MIT's PhotoGuard aims to protect original images from AI manipulation and deepfakes

AI-generated images are becoming more and more realistic, and so far there is no reliable way to detect them. Researchers at MIT can't change that, but they can at least protect existing images from AI manipulation.

Instead of trying to detect manipulation, the researchers want to make the original images more resistant, so that manipulation is either impossible or very hard.

"PhotoGuard" is the name of the system that introduces minimal pixel perturbations into an original image that are invisible to humans but can be detected by AI systems.

These disturbances are intended to make image manipulation much more difficult. The system was developed by the Computer Science and Artificial Intelligence Laboratory (CSAIL) at the Massachusetts Institute of Technology (MIT).

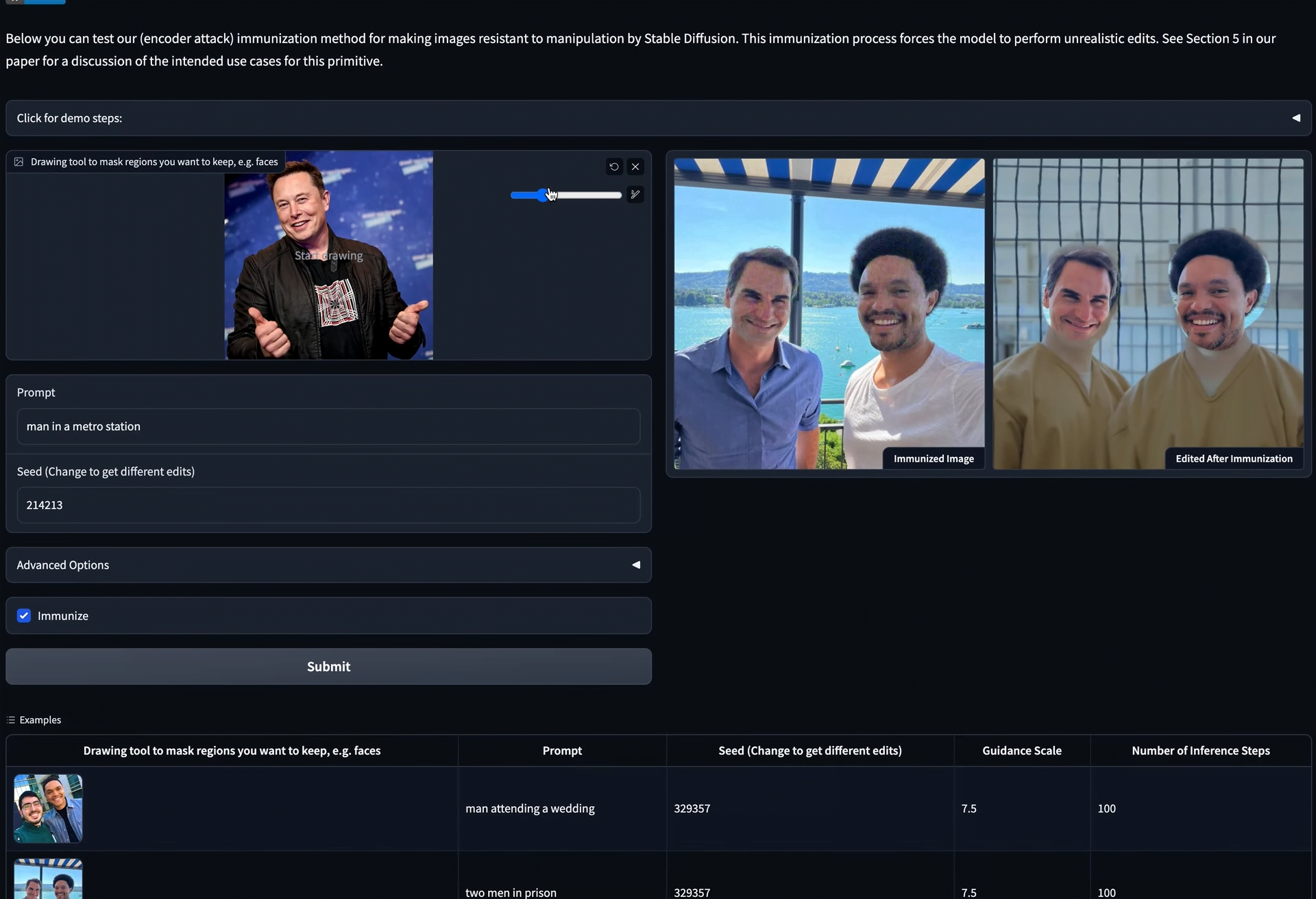

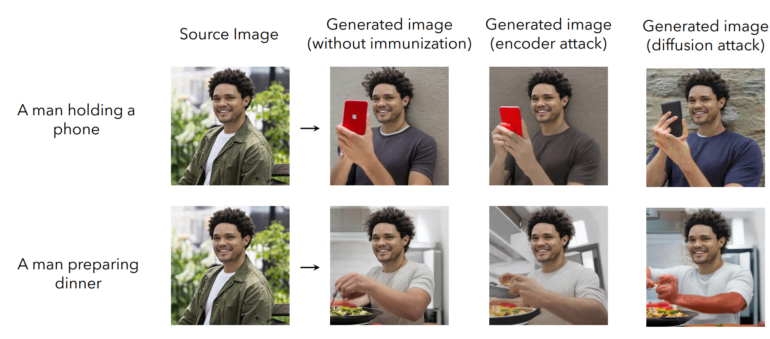

PhotoGuard uses two methods to "immunize" an image against AI manipulation. The first method, the so-called "encoder" attack, targets the AI model's existing abstraction of the image in latent space and manipulates the data there in such a way that the model no longer clearly recognizes the image as such and introduces flaws. The change is analogous to a grammatically incorrect sentence, the meaning of which is still clear to humans, but which can confuse a language model.

The second method, the diffusion attack, is more sophisticated: for an original image, a specific target image is defined that is determined by minimal pixel changes to the original image during inference. When the AI model then tries to modify the original image, it is automatically redirected to the target image defined by the researchers, and the result no longer makes sense. Watch the video below to see the system in action.

Integrating manipulation protection directly into models

The researchers suggest that their or similar protections could be offered directly by model developers. For example, they could offer a service via an API that allows images to be immunized against the manipulation capabilities of their particular model. This immunization would also need to be compatible with future models and could be built in as a backdoor during model training.

Broad protection against AI manipulation will require a collaborative approach between developers, social media platforms, and policymakers, who could, for example, mandate that model developers also provide protection, the researchers say.

They also point out that PhotoGuard does not provide complete protection. Attackers could try to manipulate the protected image by, for example, cropping it, adding noise, or rotating it. In general, however, the researchers see potential for developing robust modifications that can withstand such manipulation.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.