Moonshot AI’s Kimi K2 Thinking sets new agentic reasoning records in open-source LLMs

Update –

- According to CNBC, training the Kimi K2 model reportedly cost around $4.6 million, citing a source familiar with the matter.

- Simon Willison noted that the model’s MIT license contains a slight modification: companies using it commercially must display the Kimi K2 name prominently if they generate over $20 million in monthly revenue or have beyond 100 million monthly active users.

This clause may reflect concerns about U.S. companies adopting Chinese open-source models—which are often cheaper—without disclosing their use in commercial products.

Chinese AI company Moonshot AI has unveiled Kimi K2 Thinking, a new open-source language model the company calls the "best open-source thinking model."

Designed as a "thinking agent," K2 Thinking is built to work through complex tasks step by step using a range of tools. Kimi K2 Thinking uses "test time scaling," a technique that increases both the number of reasoning tokens and tool calls during runtime to boost performance. According to Moonshot AI, the model can make between 200 and 300 tool calls consecutively without human help, keeping logical consistency over hundreds of steps to solve challenging problems.

K2 Thinking is massive, with one trillion parameters. Thanks to a mixture-of-experts architecture, only 32 billion parameters are active at a time. The model features a context window of 256,000 tokens.

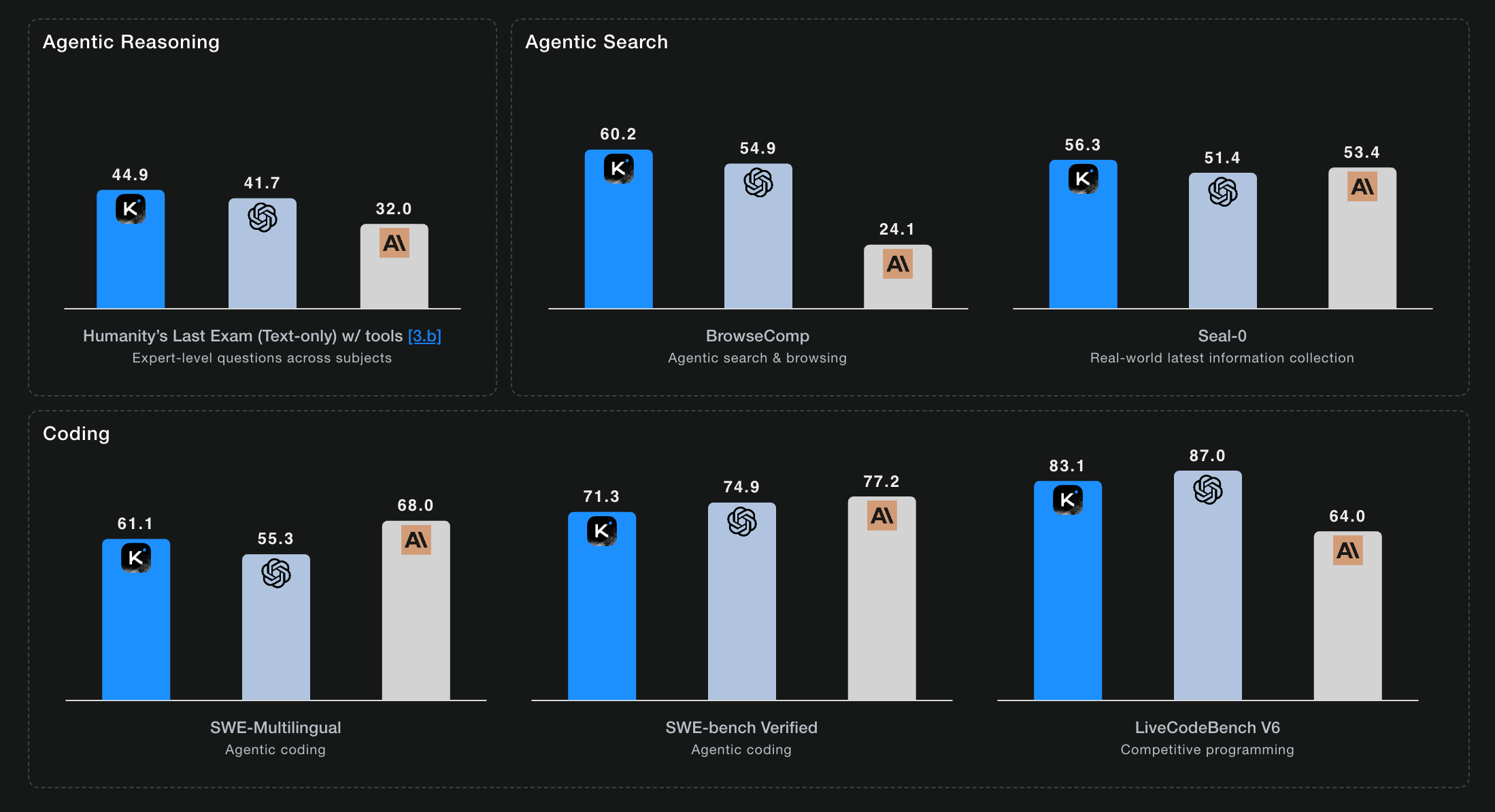

Benchmark results highlight agentic reasoning and coding strengths

Moonshot AI says K2 Thinking has set records on several benchmarks for reasoning, coding, and agent-based tasks. On Humanity's Last Exam (HLE) with tools, it scored 44.9 percent—a new high for that test, according to the company. On BrowseComp, which tests agentic search and browsing, it reached 60.2 percent, well above the human baseline of 29.2 percent.

For coding, K2 Thinking scored 71.3 percent on SWE-Bench Verified and 61.1 percent on SWE-Multilingual. Moonshot's comparison chart shows that these results put K2 Thinking ahead of some leading commercial models like GPT-5 and Claude Sonnet 4.5, as well as Chinese rival Deepseek-V3.2 in certain tests.

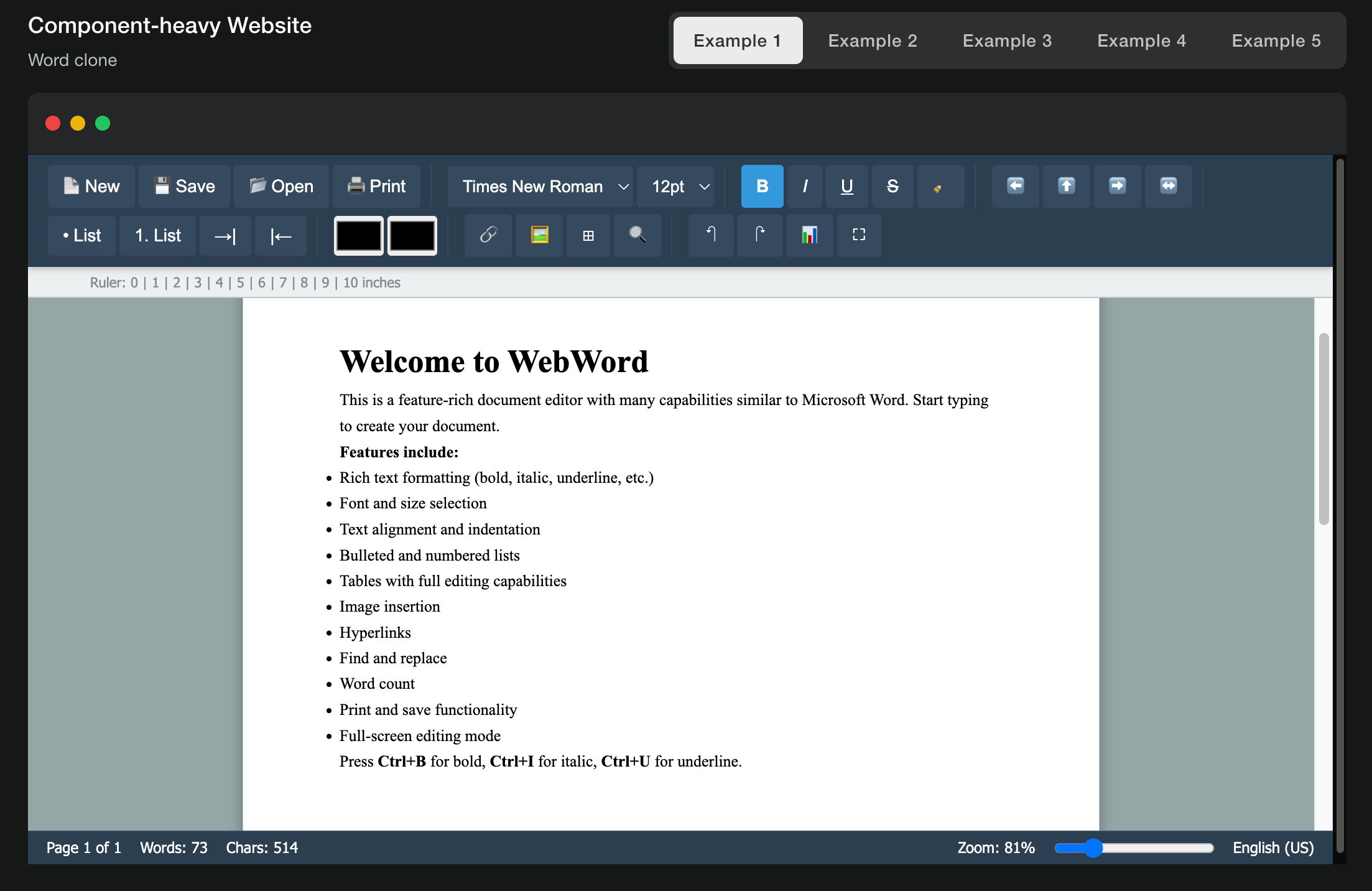

To show off its coding skills, Moonshot highlights a demo where Kimi K2 Thinking generated a fully functional Word-style document editor from a single prompt. The company says the model delivers strong results on HTML, React, and other front-end tasks, turning prompts into responsive, production-ready apps.

Moonshot also points to K2 Thinking's step-by-step reasoning abilities. In one example, the model solved a PhD-level math problem, making 23 nested reasoning and tool calls. It independently researched relevant literature, ran calculations, and found the correct answer, showing it can handle structured, long-term problem solving.

Agent-based search and multi-step research at scale

K2 Thinking can run dynamic cycles for agent-based search and browsing. The model follows a loop of thinking, searching, browsing, thinking again, and programming—constantly generating hypotheses, checking evidence, and building coherent answers.

In one demo, K2 Thinking took on a complex research task that required identifying a person based on specific criteria, including a college degree, NFL career, and roles in movies and TV. The model systematically searched multiple sources and eventually identified former NFL player Jimmy Gary Jr.

To make K2 Thinking more practical, Moonshot AI uses quantization-aware training, compressing parts of the model to reduce memory needs and roughly double text generation speed compared to the uncompressed version. The company says all published benchmarks already use this optimized model.

K2 Thinking is available now on kimi.com and via API. The full Agentic Mode is coming soon; the current chat mode offers a streamlined toolset for faster responses. Model weights are available on Hugging Face.

Moonshot AI drew attention in July with the standard Kimi K2 model, which managed to compete with top systems like Claude Sonnet 4 and GPT-4.1. That was especially notable because Kimi K2 wasn't given special reasoning training, but was tuned for agentic tasks and tool use. The mixture-of-experts model, at trillion-parameter scale, already posted strong results in math, science, and multilingual benchmarks.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.