Moonshot AI's open-source Kimi-VL tackles text, images and video with just 2.8 billion parameters

A new open-source AI model from Chinese startup Moonshot AI processes images, text, and videos with surprising efficiency. Kimi-VL stands out for its ability to handle long documents, complex reasoning, and user interface understanding.

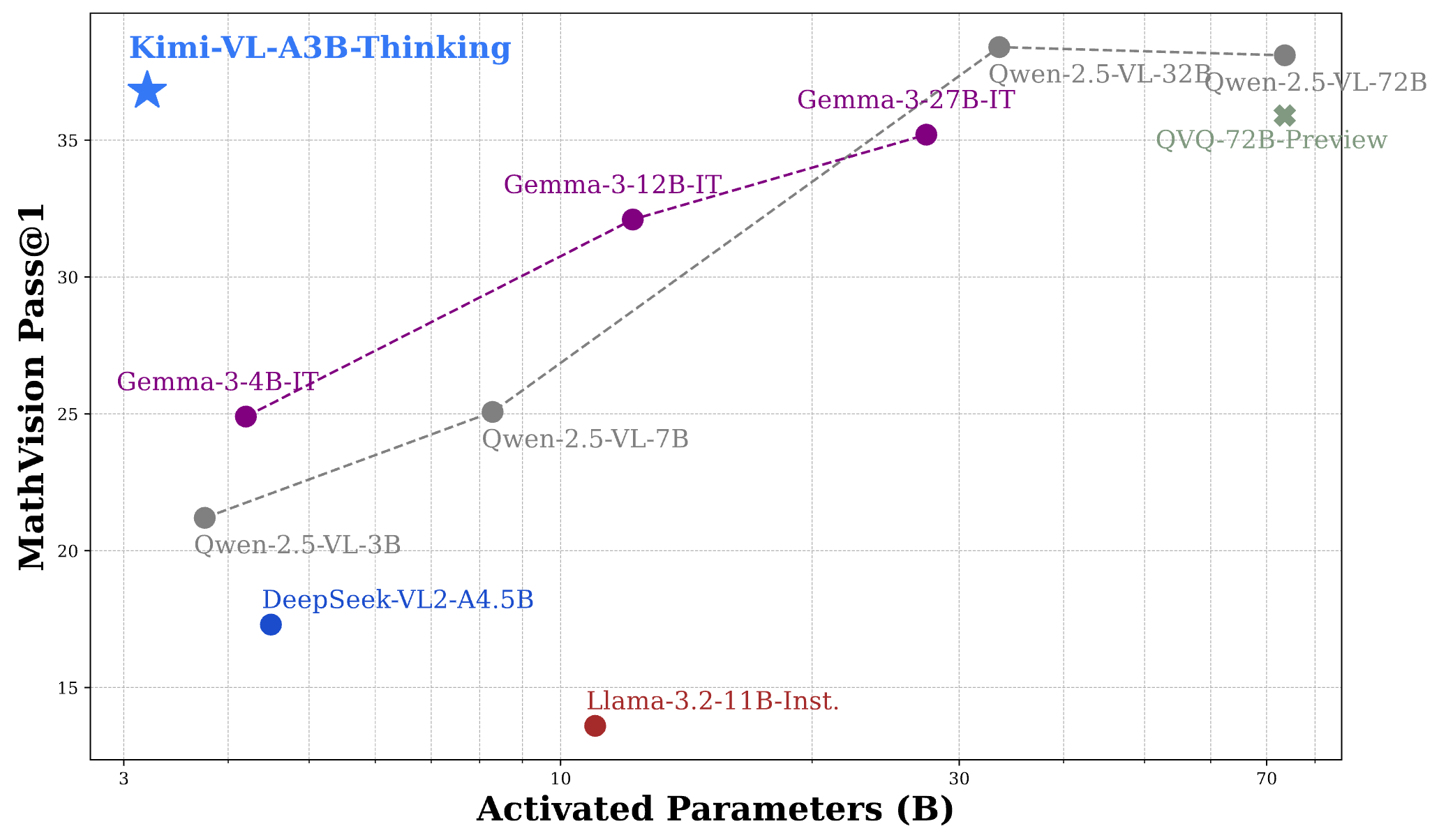

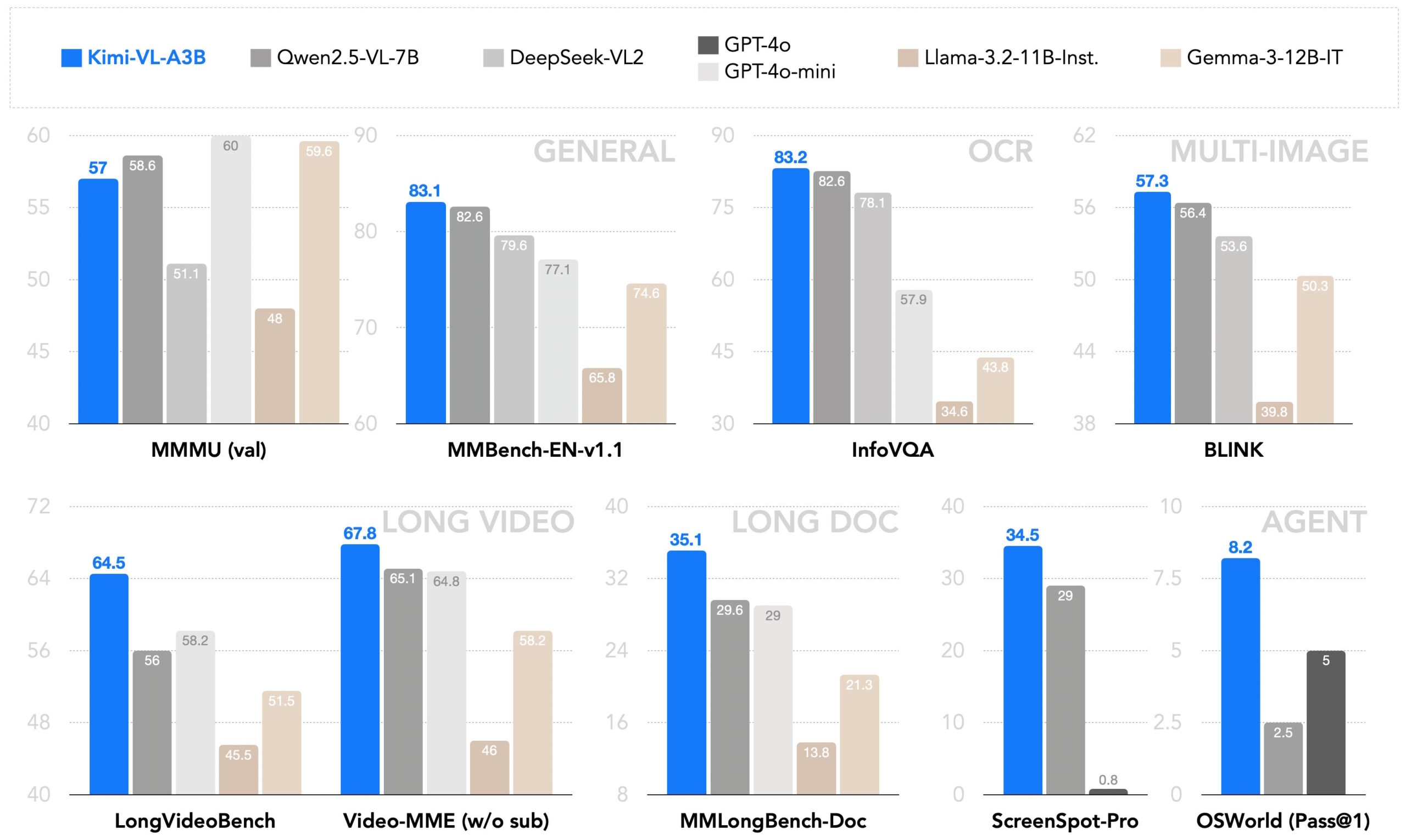

According to Moonshot AI, Kimi-VL uses a mixture-of-experts architecture, activating only part of the model for each task. With just 2.8 billion active parameters—far fewer than many large models—Kimi-VL delivers results comparable to much bigger systems across various benchmarks.

The model boasts a maximum context window of 128,000 tokens, enough to process an entire book or lengthy video transcript. Moonshot AI reports that Kimi-VL consistently scores well on tests like LongVideoBench and MMLongBench-Doc.

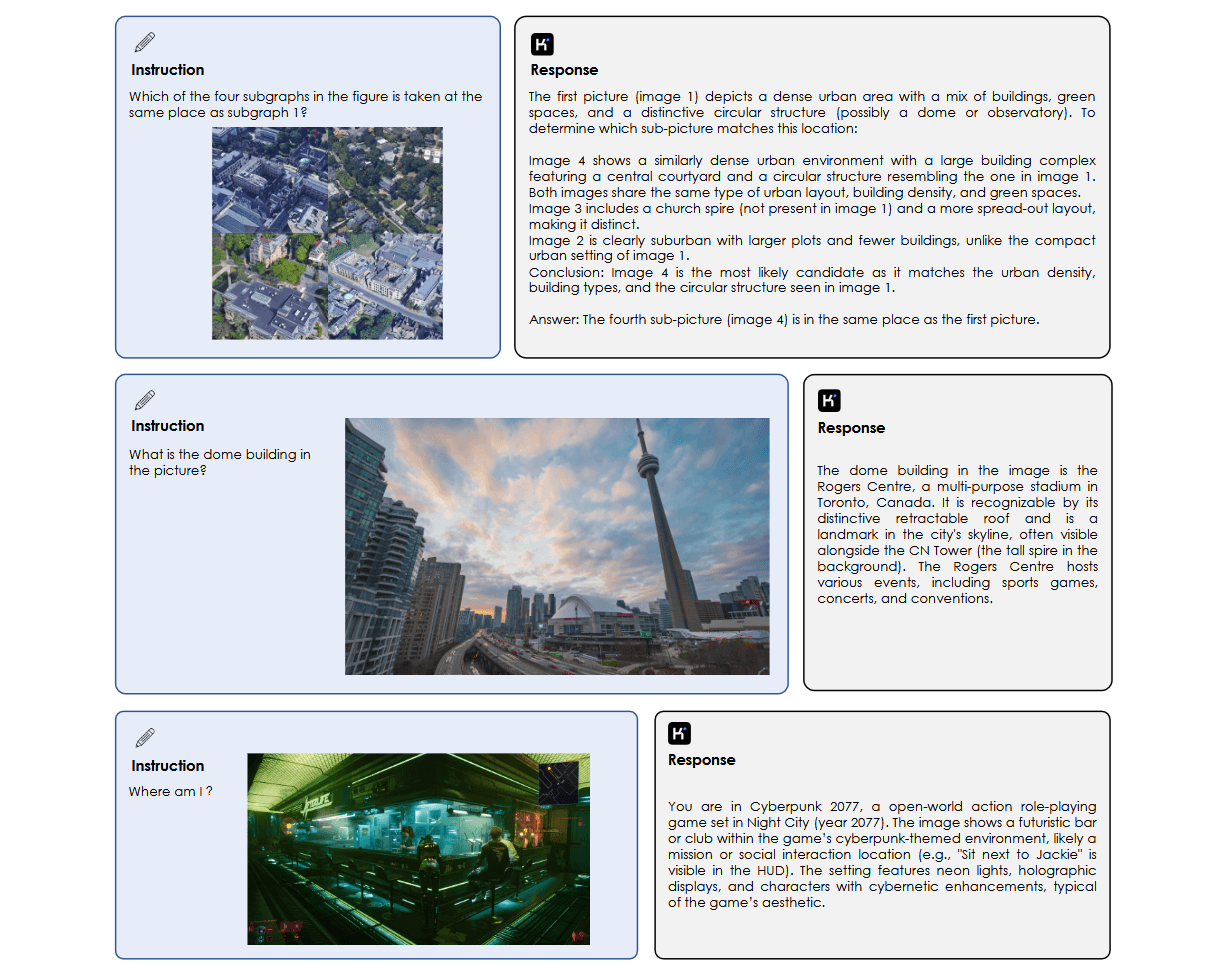

Kimi-VL's image processing capabilities are notable. Unlike some systems, it can analyze complete screenshots or complex graphics without breaking them into smaller pieces. The model also handles mathematical image problems and handwritten notes. In one test, it analyzed a handwritten manuscript, identified references to Albert Einstein, and explained their relevance.

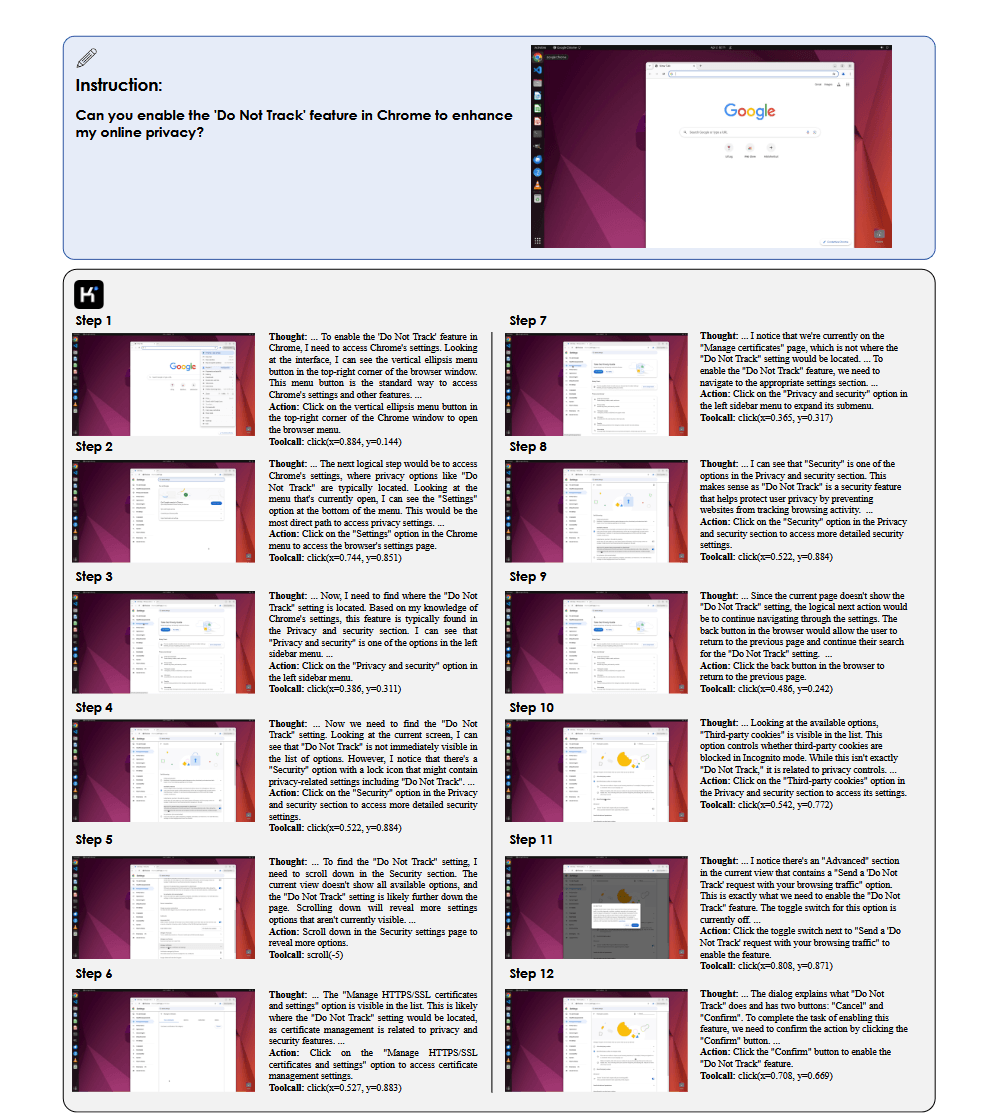

The system also functions as a software assistant, interpreting graphical user interfaces and automating digital tasks. Moonshot AI claims that in tests where the model navigated browser menus or changed settings, it outperformed many other systems, including GPT-4o.

Compact design, competitive results

Compared to other open-source models like Qwen2.5-VL-7B and Gemma-3-12B-IT, Kimi-VL appears more efficient. According to Moonshot AI, it leads in 19 out of 24 benchmarks, despite running with far fewer active parameters. On MMBench-EN and AI2D, it reportedly matches or beats scores usually seen from larger, commercial models.

The company attributes much of this performance to its training approach. Beyond standard supervised fine-tuning, Kimi-VL uses reinforcement learning. A specialized version called Kimi-VL-Thinking was trained to run through longer reasoning steps, boosting performance on tasks that require more complex thought, such as mathematical reasoning.

Kimi-VL isn't without constraints. Its current size limits its performance on highly language-intensive or niche tasks, and it still faces technical challenges with very long contexts, even with the expanded context window.

Moonshot AI says it plans to develop larger model versions, incorporate more training data, and improve fine-tuning. The company's stated long-term goal is to create a "powerful yet resource-efficient system" suitable for real-world use in research and industry.

Earlier this year, Moonshot AI released Kimi k1.5, a multimodal model for complex reasoning that the company claims holds its own against GPT-4o in benchmarks. Kimi k1.5 is available on the kimi.ai web interface. A demo of Kimi-VL can be found on Hugging Face.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.