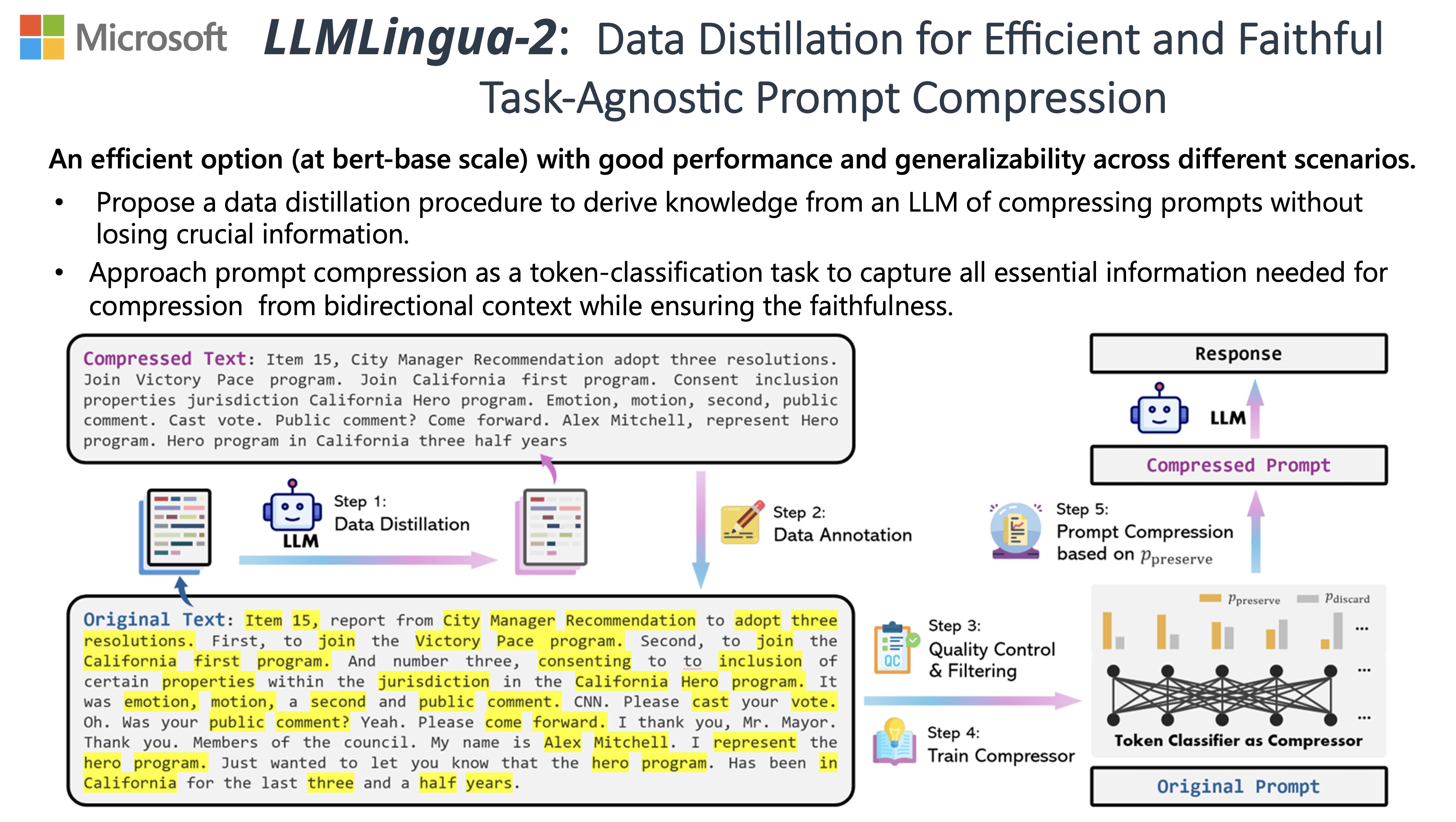

Microsoft Research has released LLMLingua-2, a model for task-agnostic compression of prompts. It enables shortening prompts to as little as 20 percent of their original length, reducing costs and latency.

According to Microsoft Research, LLMLingua-2 intelligently compresses long prompts by removing unnecessary words or tokens while preserving essential information. This can reduce prompts to as little as 20 percent of their original length, resulting in lower costs and latency. "Natural language is redundant, amount of information varies," the research team writes.

According to Microsoft Research, LLMLingua 2 is 3 to 6 times faster than its predecessor LLMLingua and similar methods. LLMLingua 2 was trained using examples from MeetingBank, which contains transcripts of meetings and their summaries.

To compress a text, the original is fed into the trained model. The model scores each word, assigning points for retention or removal while considering the surrounding context. The words with the highest retention values are then selected to create the shortened prompt.

The Microsoft Research team evaluated LLMLingua-2 on several datasets, including MeetingBank, LongBench, ZeroScrolls, GSM8K, and BBH. Despite its small size, the model showed significant performance improvements over strong baselines and demonstrated robust generalization across different LLMs.

System Prompt:

You are an excellent linguist and very good at compressing passages into short expressions by removing unimportant words, while retaining as much information as possible.

User Prompt:

Compress the given text to short expressions, and such that you (GPT-4) can reconstruct it as close as possible to the original. Unlike the usual text compression, I need you to comply with the 5 conditions below:

1. you can ONLY remove unimportant words.

2. do not reorder the original words.

3. do not change the original words.

4. do not use abbreviations or emojis.

5. do not add new words or symbols.

Compress the origin aggressively by removing words only. Compress the origin as short as you can, while retaining as much information as possible. If you understand, please compress the following text: {text to compress}

The compressed text is: [...]

Microsoft's compression prompt for GPT-4

For various language tasks such as question answering, summarization, and logical reasoning, it consistently outperformed established frameworks such as the original LLMLingua and selective context strategies. Remarkably, the same compression worked effectively for different LLMs (from GPT-3.5 to Mistral-7B) and languages (from English to Chinese).

LLMLingua-2 can be implemented with just two lines of code. The model has also been integrated into the widely used RAG frameworks LangChain and LlamaIndex.

Microsoft provides a demo, practical application examples, and a script that illustrates the benefits and cost savings of prompt compression. The company sees this as a promising approach to achieve better generalizability and efficiency with compressed prompts.