Researchers at New York University have developed an AI system that might be able to learn language like a toddler. The AI used video recordings from a child's perspective to understand basic aspects of language development.

Today's AI systems have impressive capabilities, but they learn inefficiently: they need millions of examples and consume massive amounts of computing power. They probably don't develop a real understanding of things like humans might.

An AI that could learn like a child would be able to understand meaning, respond to new situations, and learn from new experiences. If AI could learn like humans, we would see a new generation of artificial intelligence that is faster, more efficient, and more versatile.

AI learns to associate visual cues with words

In a study published in the journal Science, researchers at New York University examined how toddlers associate words with specific objects or visual concepts, and whether an AI system can learn similarly.

The research team used video recordings from the perspective of a child between the ages of 6 and 25 months to train a "relatively generic neural network".

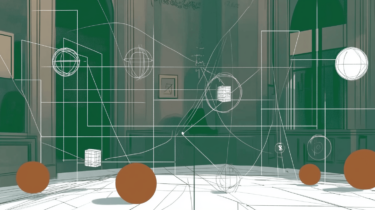

The AI, called Child's View for Contrastive Learning (CVCL), processed 61 hours of visual and speech data recorded in the context of the child's visual scene. The speech data includes, for example, statements made by the child's parents about an object visible in the video.

Using this data, the system learned the properties and connections between different sensory modalities to understand the meaning of words in the child's visual environment.

The AI was able to learn many word-object associations that occurred in the child's everyday experience with the same accuracy as an AI trained on 400 million captioned images from the Internet. It was also able to generalize to new visual objects and align its visual and linguistic concepts.

The results show that basic aspects of word meaning can be learned from a child's experience, the researchers said. Whether this leads to actual understanding, and how easily and to what extent generalization is possible, remains to be seen.

Our model acquires many word-referent mappings present in the child’s everyday experience, enables zero-shot generalization to new visual referents, and aligns its visual and linguistic conceptual systems. These results show how critical aspects of grounded word meaning are learnable through joint representation and associative learning from one child’s input.

From the paper

The researchers now want to find out what is needed to better adapt the model's learning process to children's early language acquisition. The model might need more data, or it could need to pay attention to a parent's gaze, or perhaps develop a sense of the solidity of objects, something that children intuitively understand.

One limitation of the study is that the AI was trained using only one child's experience. In addition, the AI was only able to learn simple names and images, and struggled with more complex words and concepts. It is also unclear how the AI could learn abstract words and verbs, since it relies on visual information that does not exist for these words.

Yann LeCun, head of AI research at Meta, argues that superhuman AI must first learn to learn more like humans, and that research must understand how children learn languages and understand the world with far less energy and data consumption than today's AI systems.

He is trying to create systems that can learn how the world works, much like baby animals. These systems should be able to observe and learn from their environment, similar to what the researchers above have shown. Meta is collecting massive amounts of first-person video data for this purpose. LeCun is also working on a new brain-like AI architecture to overcome the limitations of today's systems and make them more rooted in the real world.