Open-source Moonshine speech recognition model is up to five times faster than OpenAI's Whisper

U.S. startup Useful Sensors has developed Moonshine, an open-source speech recognition model that processes audio more efficiently than OpenAI's Whisper while using fewer computing resources.

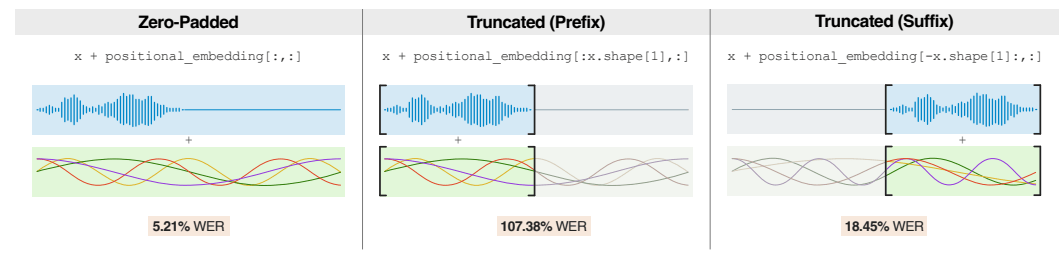

The company says it designed Moonshine specifically for real-time applications on hardware with limited resources. Moonshine's main advantage is its flexible architecture. While Whisper processes all audio in fixed 30-second segments regardless of length, Moonshine adjusts its processing time based on actual audio duration, making it particularly efficient for shorter clips.

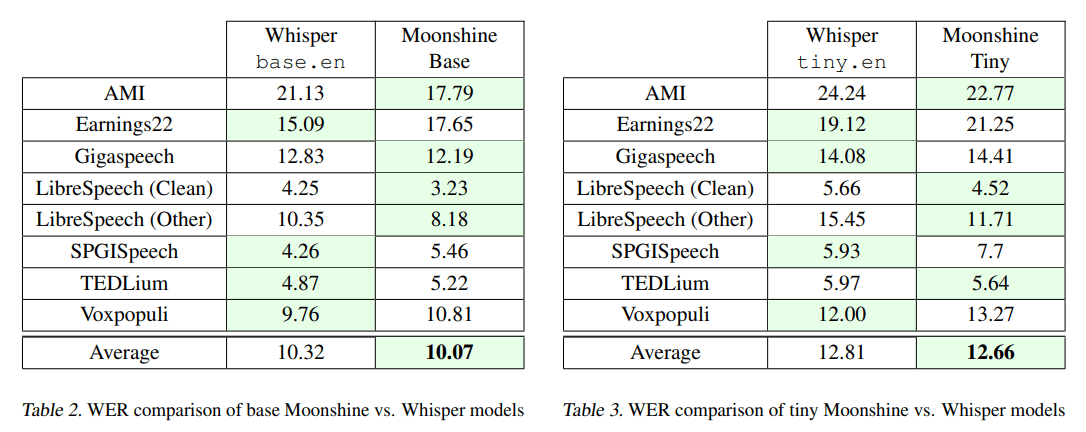

The model comes in two sizes. The smaller Tiny version features 27.1 million parameters, while the larger Base version uses 61.5 million parameters. For comparison, OpenAI's equivalent models are larger: Whisper tiny.en uses 37.8 million parameters, and base.en 72.6 million parameters.

Testing shows the Tiny model matches its Whisper counterpart's accuracy while consuming less computing power. Both Moonshine versions maintained lower word error rates than Whisper during tests, even with varying audio levels and background noise.

The researchers identified one area for improvement: very short audio clips under one second, which made up a small portion of the training data. Adding more short segments to the training set could improve the model's performance with these clips.

Offline capabilities open new doors

By operating efficiently without an internet connection, Moonshine enables applications that weren't feasible before due to hardware constraints. While Whisper runs on standard computers, it demands too much power for smartphones and small devices like Raspberry Pi computers. Useful Sensors uses Moonshine for Torre, its English-Spanish translator.

The code for Moonshine is available on Github. Users should note that AI transcription systems, like LLMs, can hallucinate. Researchers at Cornell University found that Whisper created non-existent content about 1.4 percent of the time, with higher error rates for people with speech disorders such as aphasia. Other researchers and developers report much higher hallucination rates.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.