OpenAI updates Codex model, adds trusted access program for cyber defense

Key Points

- OpenAI released GPT-5.2-Codex, an AI model designed to handle complex tasks and detect vulnerabilities. Verified security experts will get exclusive access to a version with fewer restrictions.

- Improved context compression and optimized image processing allow for more efficient analysis and more accurate interpretation of technical diagrams.

- While benchmarks show only minor performance gains over its predecessor, the model is specifically positioned for cybersecurity applications.

The new AI model GPT-5.2-Codex is built to solve complex tasks as an autonomous software agent. Because the technology is also effective at finding vulnerabilities, OpenAI is launching an exclusive access program where verified experts get a version with relaxed security filters.

Technically, OpenAI relies on advanced context compression, or "compaction." This method helps the model process long conversation histories and extensive code analyses more efficiently. The system is designed to maintain an overview even in complex projects, building directly on the capabilities of its predecessor, GPT-5.1-Codex-Max, which was already designed to work on tasks for longer than a day.

OpenAI has also optimized image processing, allowing GPT-5.2-Codex to interpret technical diagrams or screenshots of user interfaces more precisely. According to the company, controlling native Windows environments now works more reliably than it did with the previous model.

Benchmarks show only slight gains

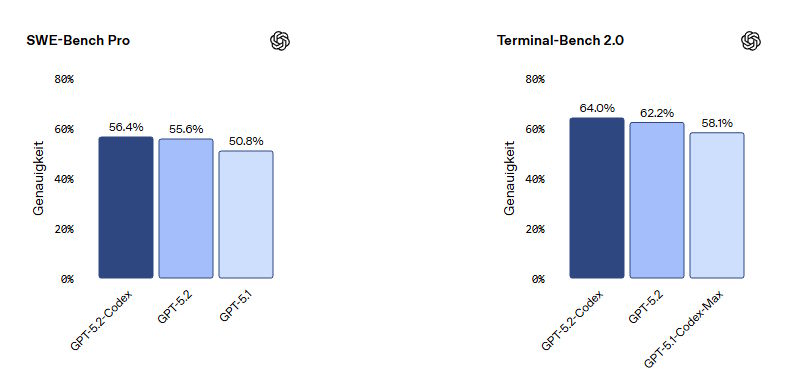

In standardized tests, the new model shows only slight improvements over the basic version. In SWE-Bench Pro, where software simulates solving real problems in GitHub repositories, GPT-5.2-Codex achieves a solution rate of 56.4 percent, compared to the standard version's 55.6 percent.

In Terminal-Bench 2.0, accuracy increases slightly more to 64 percent. This test checks how well AI agents can operate command-line tools, set up servers, or compile code.

Dual-use risks for cybersecurity

A major focus of the release is cybersecurity. The increased ability to analyze code can be used for both defense and attack, and OpenAI cites a recent incident as proof. Security researcher Andrew MacPherson reportedly used an earlier version of the model to investigate a vulnerability in the React framework.

The AI discovered unexpected behaviors that, after further analysis, led to three previously unknown vulnerabilities capable of paralyzing services or exposing source code. According to OpenAI, the discovery demonstrates how autonomous AI systems can speed up the work of security researchers.

These capabilities carry risks. OpenAI now rates the model at nearly a "high" level within its Preparedness Framework for cybersecurity. In response, the company is introducing a trusted access program.

Aimed at certified security experts and organizations, the program gives participants access to models that are less restrictive than the public version. This allows experts to search for security vulnerabilities without being blocked by the AI's standard protection filters.

GPT-5.2-Codex is available now to paying ChatGPT users. Integration is handled via the command line, development environments, and the cloud, with an interface for third-party providers coming soon.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now