OpenAI's GPT-4o outperforms human experts in moral reasoning, study finds

A recent study suggests that OpenAI's GPT-4o can offer moral explanations and advice that people consider superior to those of recognized ethics experts.

Researchers from the University of North Carolina at Chapel Hill and the Allen Institute for Artificial Intelligence explored whether large language models (LLMs) could be considered "moral experts." They conducted two studies comparing the moral reasoning of GPT models with that of humans.

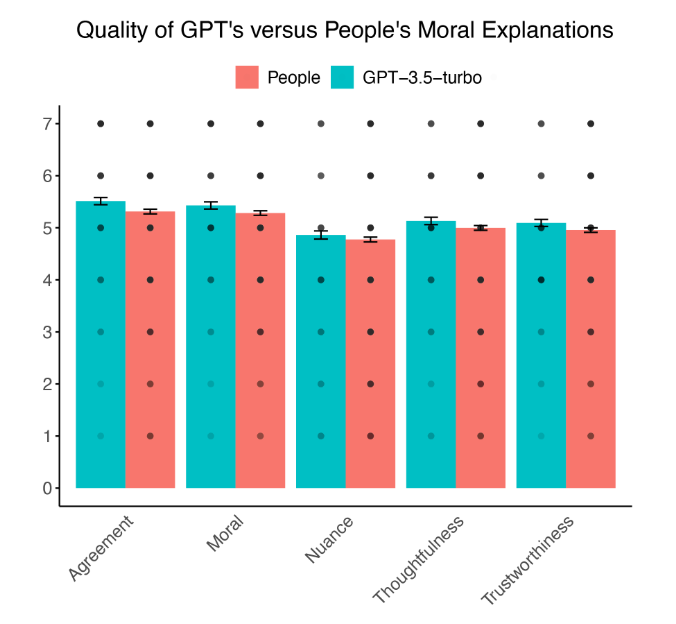

In the first study, 501 U.S. adults rated moral explanations from GPT-3.5-turbo and other participants. The results showed that people rated GPT's explanations as more morally correct, trustworthy, and thoughtful than those of human participants.

Evaluators also agreed more often with the AI's assessments than with those of other humans. While the differences were small, the key finding is that the AI can match or even surpass human-level moral reasoning.

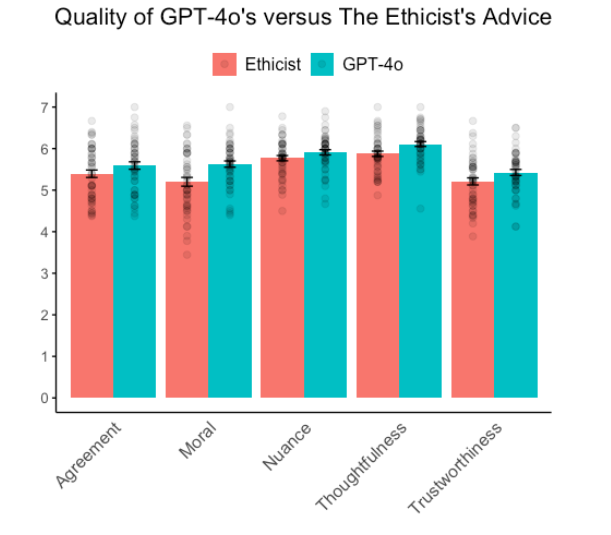

The second study compared advice from GPT-4o, the latest GPT model, with that of renowned ethics expert Kwame Anthony Appiah from The New York Times' "The Ethicist" column. Nine hundred participants rated the quality of advice on 50 ethical dilemmas.

GPT-4o outperformed the human expert on almost every measure. People rated the AI-generated advice as more morally correct, trustworthy, thoughtful, and accurate. Only when it came to perceived nuance was there no significant difference between the AI and the human expert.

The researchers argue that these results show AI can pass a "Comparative Moral Turing Test" (cMTT). Interestingly, participants in both studies often identified AI-generated content, suggesting the machine still fails the classic Turing test of passing as human in conversation. But there are other studies that suggest GPT-4 is also capable of passing the Turing test.

Text analysis revealed that GPT-4o used more moral and positive language in its advice than the human expert. This could partly explain the higher ratings for the AI advice, but it wasn't the only factor.

The authors note that the study was limited to US participants, and further research is needed to investigate cultural differences in how people perceive AI-generated moral reasoning. Additionally, participants were unaware that some advice came from an AI, which may have influenced the ratings.

Overall, the study shows that modern AI systems can provide moral reasoning and advice at a level comparable to or better than human experts. This has implications for the integration of AI in areas requiring complex ethical decisions, such as therapy, legal advice, and personal care, the researchers write.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.