OPT-IML: Meta releases open source language model optimized for tasks

With OPT-IML Meta provides an open-source language model in the size of GPT-3, which is optimized for language tasks. It is available for research purposes only.

The "Open-Pre-trained-Transformer - Instruction Meta-Learning" (OPT-IML) is based on Meta's OPT language model, which was announced in early May 2022 and released in late May. The largest model has 175 billion parameters like OpenAI's GPT-3, but is said to have been significantly more efficient in training, incurring only one-seventh the CO₂ footprint of GPT-3.

Fine-tuning with language tasks for language tasks

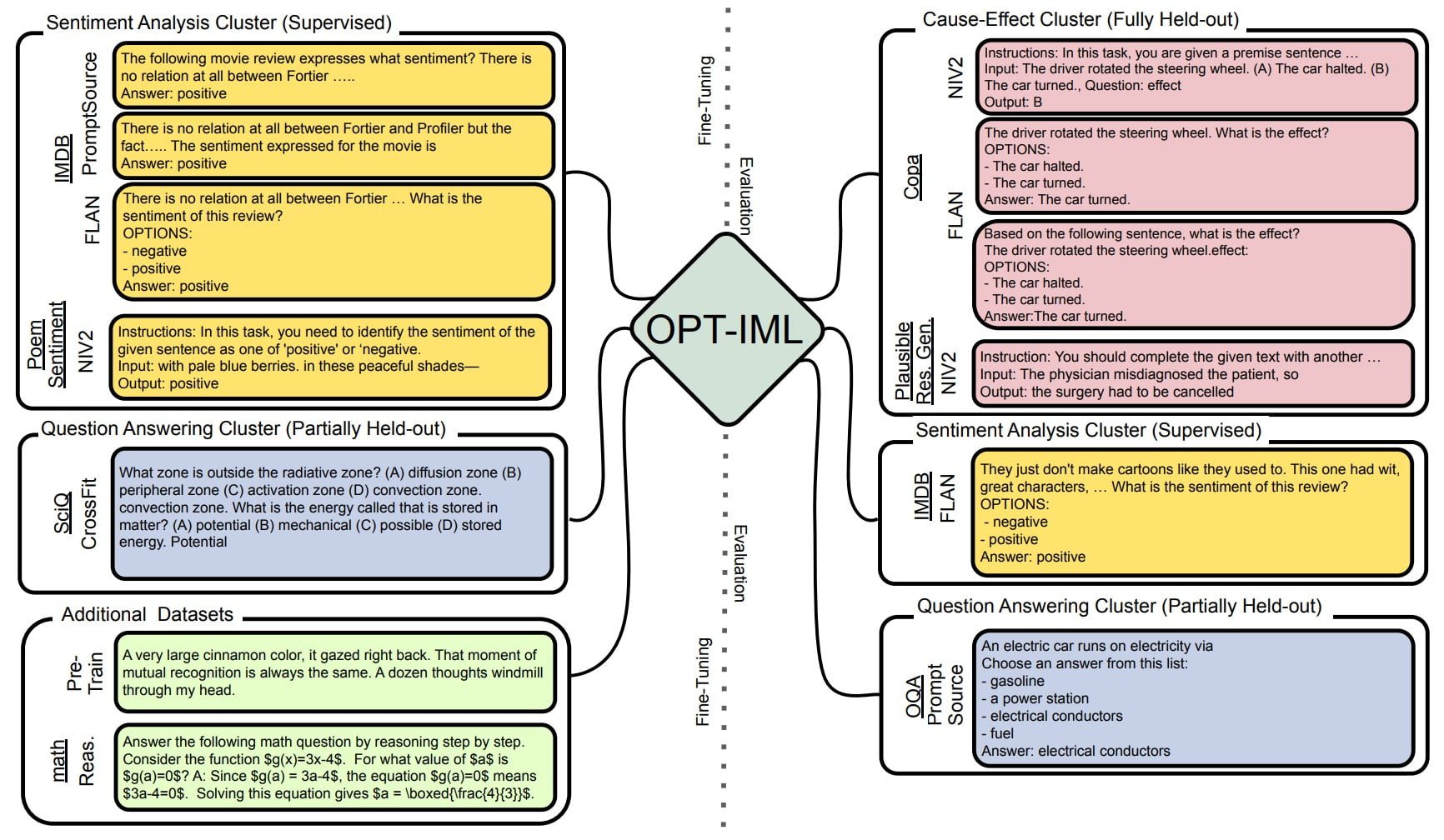

According to Meta, the now released IML version is fine-tuned to perform better on natural language tasks than native OPT. Typical language tasks include answering questions, summarizing text, and translating. For fine-tuning, the researchers used about 2000 natural language tasks. The tasks are grouped into eight NLP benchmarks (OPT-IML Bench), which the researchers also provide.

Meta offers OPT-IML in two versions: OPT-IML itself was trained with 1500 tasks, and another 500 tasks were withheld for evaluation. OPT-IML-Max was trained with all 2000 available tasks.

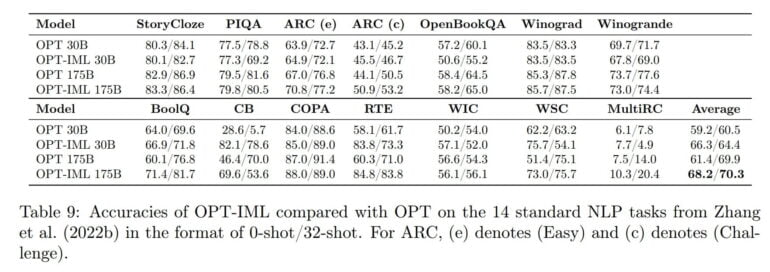

On average, OPT-IML improves over OPT with approximately 6-7% on 0-shot accuracy at both 30B and 175B model scales. For 32-shot accuracy, we see significant improvements on the 30B model, and milder improvements on 175B. While the improvements are significant for certain tasks such as RTE, WSC, BoolQ, ARC, CB, and WiC, our instruction-tuning does not improve performance for other tasks such as StoryCloze, PIQA, Winograd, and Winogrande.

From the paper

In their paper, the researchers also present strategic evaluation splits for their benchmark to evaluate three different types of model generalization abilities: 1) fully supervised performance, 2) performance on unseen tasks from seen task categories, and 3) performance on tasks from completely held-out categories. Using this evaluation suite, they present tradeoffs and recommended practices for many aspects of instruction-tuning.

No commercial use

Meta is releasing the 30-billion parameter model in both versions directly on Github as a download. The OPT-IML-175B model is planned to be available on request soon. The request form will also be published on Github.

Unlike e.g. GPT-3 via API, OPT-IML may not be used for commercial purposes. The provided OPT license is for non-commercial research purposes only. The license is bound to the recipient and cannot be redistributed.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.