With RawNeRF, Google scientists introduce a new tool for image synthesis that can create well-lit 3D scenes from dark 2D photos.

In the summer of 2020, a research team at Google first introduced "Neural Radiance Fields" (NeRF): Put simply, an AI system recognizes where light rays end in images. Based on this, the system can automatically create a 3D scene from multiple photos of the same 2D scene. Imagine a kind of automated photogrammetry that reduces manual effort and the number of photos while generating flexible, customizable 3D scenes of high quality.

Over the past two years, Google teams have regularly demonstrated new use cases for NeRFs, such as for Google Maps Immersive View or to render Street View in 3D based on photographs and depth data.

RawNeRF processes RAW images

With RawNeRF, the research team around AI researcher Ben Mildenhall now presents a NeRF that can be trained with RAW camera data. This image data contains the full dynamic range of a scene.

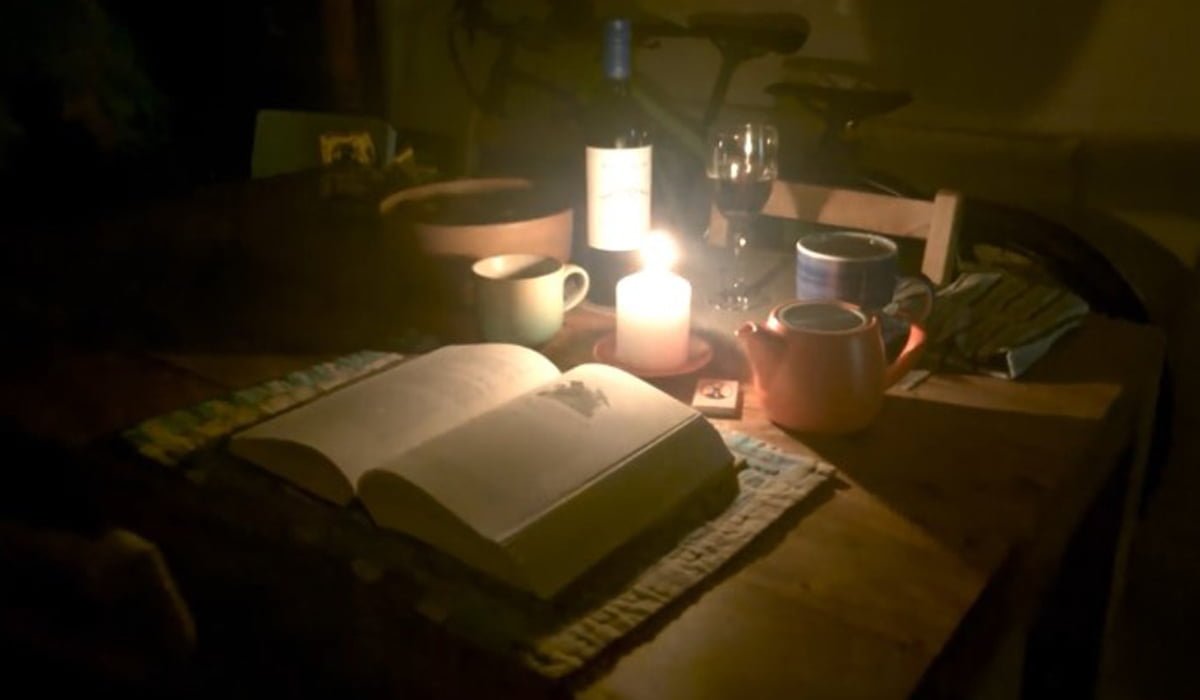

According to the research team, thanks to RAW data training, RawNERF can "reconstruct scenes from extremely noisy images captured in near-darkness." In addition, the camera viewpoint, focus, exposure, and tone mapping can be changed after the fact.

"Training directly on raw data effectively turns RawNeRF into a multi-image denoiser capable of combining information from tens or hundreds of input images," the team writes.

If you're still at CVPR and have the stamina to make it through another poster session, check out RawNeRF tomorrow morning! We exploit the fact that NeRF is surprisingly robust to image noise to reconstruct scenes directly from raw HDR sensor data. pic.twitter.com/CEeXWSmt9Q

- Ben Mildenhall (@BenMildenhall) June 24, 2022

You can download RawNeRF from Github. There you can also find Mip-NeRF 360, which can render photorealistic 3D scenes from 360-degree footage, and Ref-NeRF.