PaLM-SayCan: Google combines a large language model with an everyday robot

In the PaLM-SayCan project, Google is combining current robotics technology with advances in large language models.

Advances in large-scale AI language models have so far mainly arrived in our digital lives, such as text translation, text and image generation, or behind the scenes, when tech platforms use language AI to moderate the content.

In the PaLM-SayCan project, various Google divisions are now combining the company's most advanced large-scale speech model to date with an everyday robot that could one day help in the home - an assistant for the real world. But that will take a while yet.

Big language model meets everyday robot

Google unveiled the giant AI language model PaLM in early April, crediting the model with "breakthrough capabilities" in language understanding and, specifically, reasoning. PaLM stands for "Pathways Language Model" - making it a building block in Google's grand Pathways AI strategy for next-generation AI that can efficiently handle thousands or millions of tasks.

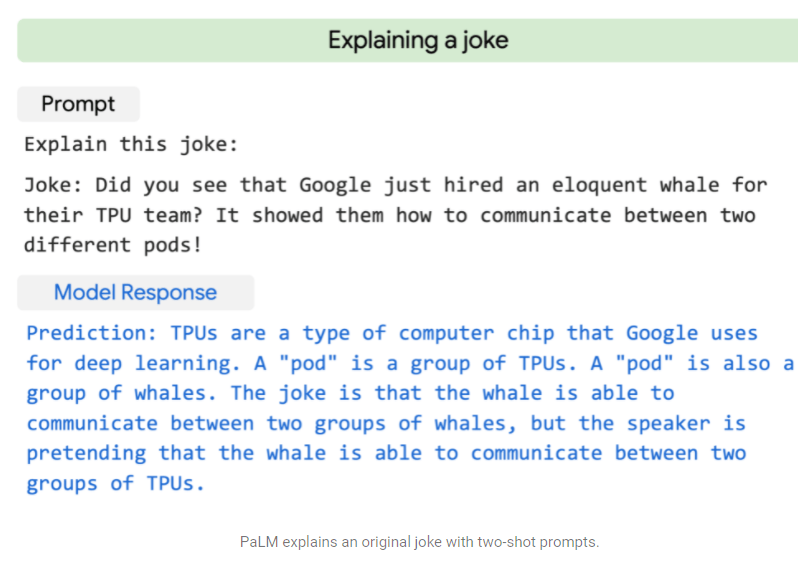

PaLM has an understanding of cause and effect, so it can solve easy text tasks and even explain simple jokes. The group achieved this new level of performance primarily through particularly extensive AI training: with 540 billion parameters, the model is one of the largest of its kind. The larger the model, the more diversely it processes language, according to the researchers.

Google has been researching robots in combination with AI more intensively since 2019. At the end of 2021, the company unveiled the household robot that is now being used as part of the PaLM SayCan project. It roams Google offices and can, for example, sort trash, wipe down tables, move chairs and bring items. It orients itself with the help of computer vision and a radar system.

PaLM can break down and prioritize tasks

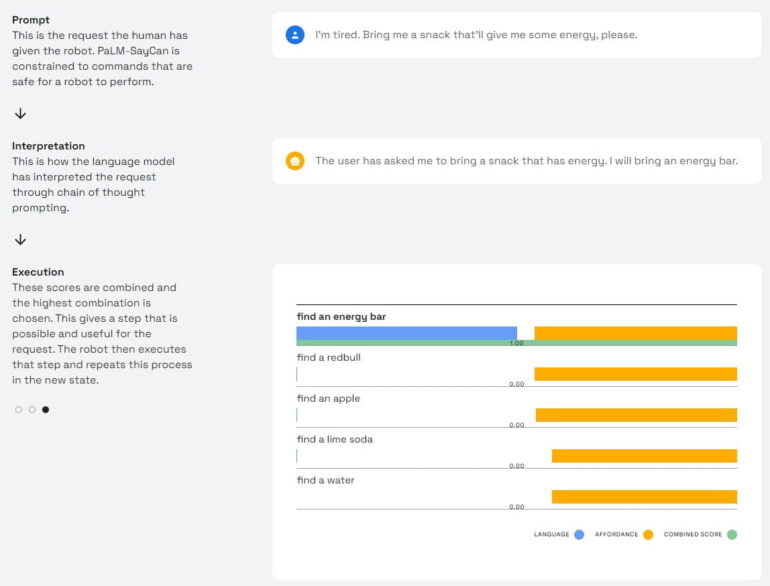

For the combination of speech AI and everyday robots, the Google research team relies in particular on PaLM'S "chain of thought prompting". In this process, the model interprets an instruction, generates possible steps to execute the instruction, and evaluates the likelihood of completing the overall task through that action. The robot executes the action that is rated the highest by the language model.

In everyday life, instructions to the robot could be worded more breezily, and conversations would become more natural: for example, if you ask for an energizing snack, the robot prefers to bring an energy bar, but alternatively has an apple, an artificial sugar drink with aminosulfonic acid, or a lemonade on the menu.

A language model-driven Google robot that one day helps with everyday tasks in our homes is a possible future. According to the research team, however, there are still many mechanical and intelligence problems to be solved before then.

The smart PaLM robot will therefore remain a test project in the Google office for the time being. However, the combination of large-scale language models and robotics has "enormous potential" for future robots tailored to human needs, the project team writes.

Google shows more demo scenarios on the official website for PaLM-SayCan.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.