Scientists at MIT and other institutions have discovered that large language models often use a simple linear function to retrieve stored knowledge. This finding could help find and correct incorrect information in models.

Linear functions are equations with only two variables and no exponents. They describe a direct relationship between two variables.

By identifying such relatively simple linear functions for retrieving particular facts, the scientists were able to test a language model's knowledge of specific topics and find out where that knowledge is stored in the model. The researchers also found that the model used the same decoding function to retrieve similar types of facts.

"Even though these models are really complicated, nonlinear functions that are trained on lots of data and are very hard to understand, there are sometimes really simple mechanisms working inside them. This is one instance of that," says Evan Hernandez, PhD student in Electrical Engineering and Computer Science (EECS) and co-author of the study.

60 % success rate in retrieving information using simple functions

The researchers first developed a method for estimating the functions and then calculated 47 concrete functions for various textual relationships, such as "capital of a country". For example, for the main topic Germany, the function should retrieve the fact Berlin.

They tested each function by changing the main topic (Germany, Norway, England, ...) to see if it could retrieve the correct information, which it did about 60 percent of the time.

But Hernandez says that for some facts, even when the model knows them and predicts text that matches those facts, the team couldn't find linear functions for them. This suggests that the model is doing "something more intricate" to store this information. What that might be is a task for future research.

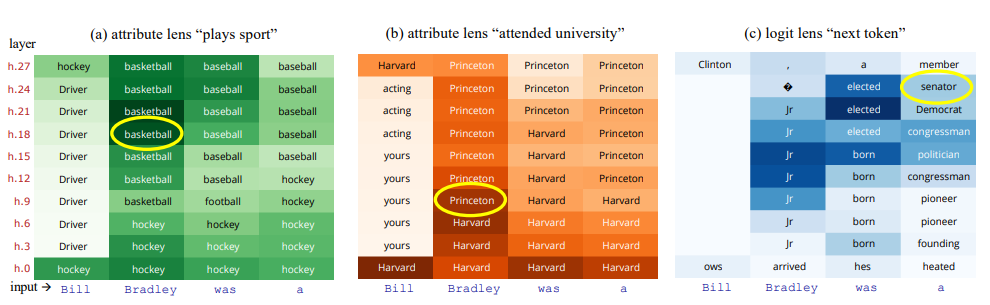

Visualization of stored knowledge through "attribute lenses"

The researchers also used these functions to figure out what a model might believe to be true about different topics. They used this method to create an "attribute lens" that visualizes where specific information about a given relationship is stored in the many layers of the transformer.

This visualization tool could help scientists and developers to correct stored knowledge and prevent an AI chatbot from reproducing incorrect information.

"We can show that, even though the model may choose to focus on different information when it produces text, it does encode all that information," explains Hernandez.

For their experiment, the researchers used the language models GPT-J, Llama 13B, and GPT-2-XL, which are rather compact LLMs. The next research task would be to see if the results hold for much larger models.