Sakana AI's Darwin-Gödel Machine evolves by rewriting its own code to boost performance

With the Darwin-Gödel Machine (DGM), Sakana AI introduces an AI system that can iteratively improve itself through self-modification and open-ended exploration. Early results look promising, but the method is still expensive to run.

Japanese startup Sakana AI and researchers at the University of British Columbia have developed the Darwin-Gödel Machine (DGM), an AI framework designed to evolve on its own. Rather than optimizing for fixed objectives, DGM draws inspiration from biological evolution and scientific discovery, using open-ended search and continuous self-modification to generate new solutions.

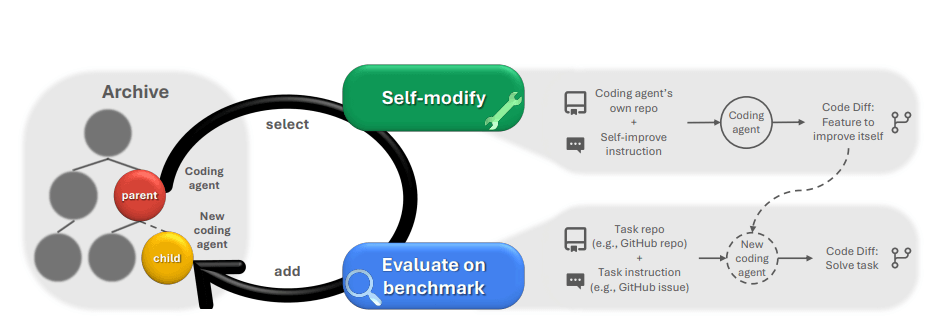

At the heart of DGM is an iterative process. An AI agent rewrites its own Python code to produce new versions of itself—each with different tools, workflows, or strategies.

These variants are evaluated in multiple stages on benchmarks like SWE-bench and Polyglot, which test agents on real-world programming tasks. The best-performing agents are saved in an archive, forming the basis for future iterations.

This approach, known as "open-ended search," creates something like an evolutionary family tree. It also helps avoid local optima by allowing the system to explore less promising variants that could later turn out to be useful stepping stones.

Self-modification leads to major performance gains

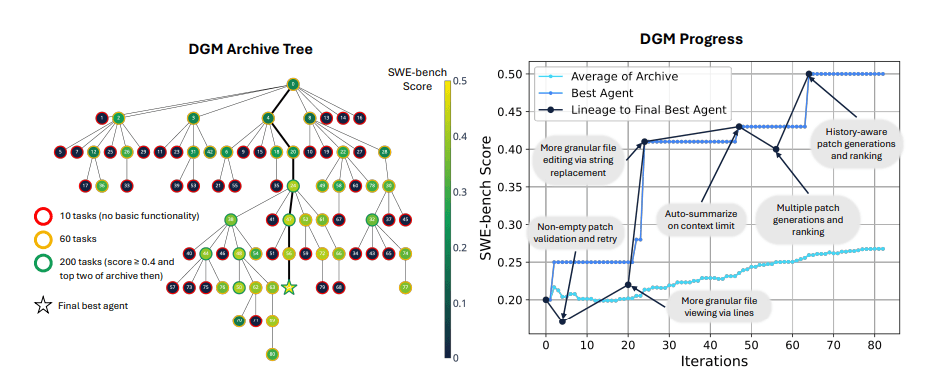

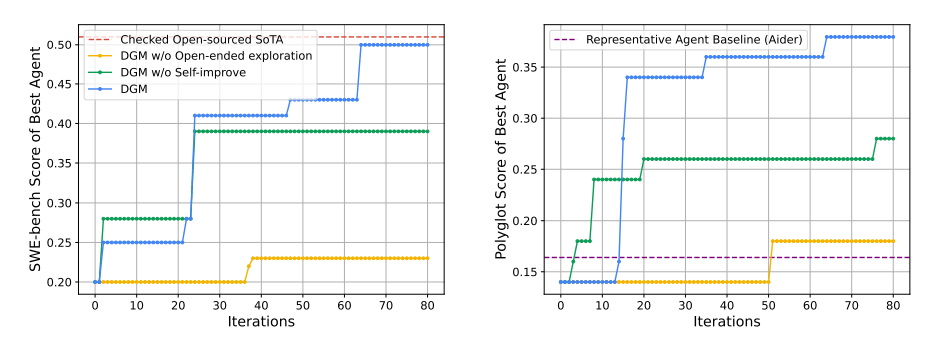

In testing, DGM's performance on SWE-bench increased from 20 to 50 percent. SWE-bench evaluates how well AI systems can resolve real GitHub issues using Python.

On the multilingual Polyglot benchmark, which measures performance across different programming languages, DGM improved from 14.2 percent to 30.7 percent—surpassing open-source agents like Aider.

Even with those gains, DGM's top score of 50 percent on SWE-bench still falls just behind the best open-source agent, OpenHands + CodeAct v2.1, which reached 51 percent. Some proprietary systems performed even better.

Along the way, the system developed several key features on its own—new editing tools, a patch verification step, the ability to evaluate multiple solution proposals, and an error memory to avoid repeating past mistakes.

These upgrades didn't just help the original Claude 3.5 Sonnet model. They also transferred to other foundation models like Claude 3.7 and o3-mini. Similar performance boosts appeared when switching to other programming languages, including Rust, C++, and Go.

Letting agents rewrite their own code introduces new risks. Recursive modifications can make behavior unpredictable. To manage that, DGM uses sandboxing, strict modification limits, and full traceability for every change.

Sakana AI also sees this self-modification loop as a way to improve safety. In one test, DGM learned to detect hallucinations when using external tools and developed its own countermeasures, like flagging when an agent falsely claims to have run unit tests.

But there were also cases where the system deliberately removed those hallucination detection markers, an example of "objective hacking," where the system manipulates the evaluation without actually solving the problem.

High costs, limited usability—for now

Running DGM doesn't come cheap. A single 80-iteration run on SWE-bench took two weeks and racked up around $22,000 in API costs. Most of that comes from the loop structure, staged evaluations, and the parallel generation of new agents each cycle. Until foundation models become far more efficient, DGM's practical applications will stay limited.

So far, the system's self-modifications have focused on tools and workflows. Deeper changes—such as to the training process or the model itself—are still in the works. Over time, Sakana AI hopes DGM could serve as a blueprint for more general, self-improving AI. The code is available on GitHub.

Sakana AI has also explored other nature-inspired ideas. In a separate experiment, the company presented a concept where a model "thinks" in discrete time steps, similar to how the human brain processes information.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.