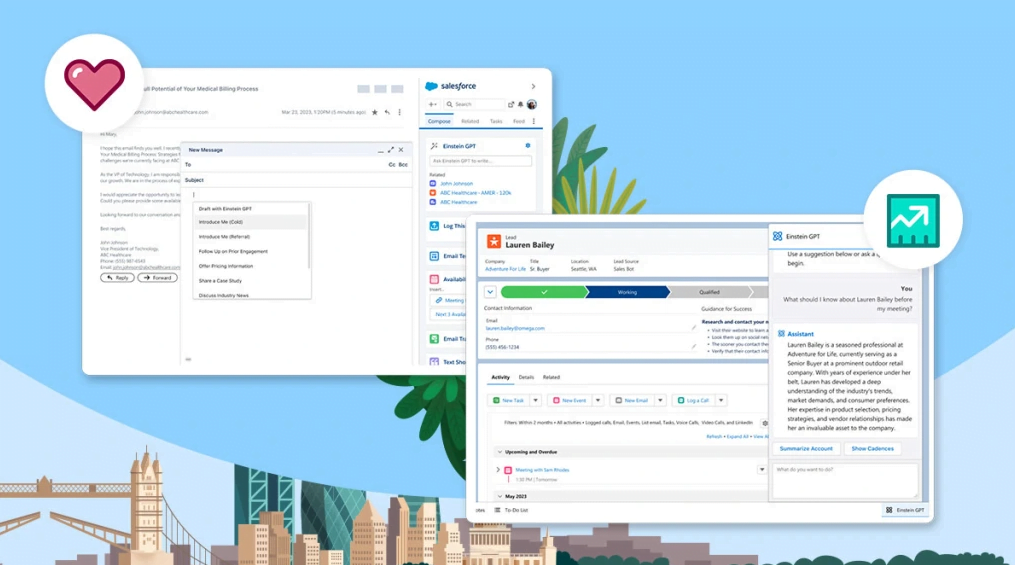

Salesforce is integrating a wide range of AI services into its products. This requires a new policy.

In the Artificial Intelligence Acceptable Use Policy, Salesforce clarifies that its AI systems cannot take responsibility for decisions that may have legal or other major implications.

Use of Salesforce's AI products complies with the policy only if the final decision is made by a human, the company says. The customer must consider additional information on top of the AI's recommendation.

Generative AI cannot replace licensed professionals

The policy also states that generative AI should not replace licensed advice, such as financial or legal counsel, and should not generate individualized medical advice, treatment, or diagnosis.

Supporting uses of AI, such as summaries of longer texts, would be permitted. Again, a qualified professional must be involved in the process to review and approve the generated content.

Salesforce also protects against the use of generative AI for discrimination, abuse, or deception, as well as the use of systems for political campaigns and weapons development. This includes, for example, the marketing of weapons.

Customers demand AI transparency

Salesforce also released the latest edition of its international State of the Connected Customer study. It reveals customers' distrust that companies will use AI ethically - only 57 percent believe they will.

68 percent of customers say advances in AI are challenging companies to be even more transparent in their communications. For example, 89 percent want to know whether they are talking to a human or an AI in customer support, and 80 percent believe it is important for AI-generated content to be reviewed and approved by humans.

The data was collected in a double-blind survey of 11,000 consumers and 3,300 business buyers in numerous countries from May 3 to July 14, 2023.

Salesforce offers specialized language models for marketing and commerce as well as service and sales, is an investor in B2B AI-focused startup Cohere, and trains AI models such as the T5 code model.