Skyfall-GS turns satellite images into walkable 3D cities

A new AI system called Skyfall-GS can generate walkable 3D city models using only standard satellite images. Unlike older methods that require expensive 3D scanners or fleets of camera cars, Skyfall-GS builds cityscapes entirely from aerial views.

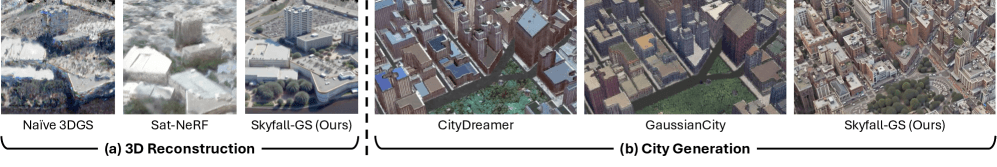

Typical 3D city models built from satellite images have one big limitation: they show only rooftops. Facades, street-level features, and side details are missing, which leads to blurry, distorted, or blocky buildings.

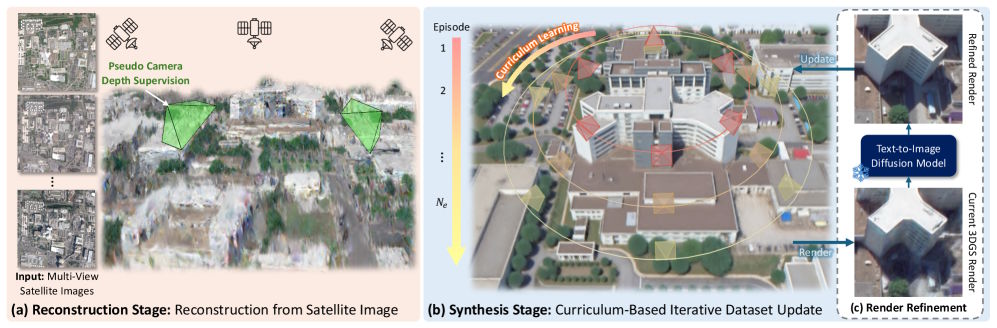

Skyfall-GS solves this with a two-step process. First, it creates a rough 3D outline from satellite images. Then, an AI model fills in missing details like facades and street-level textures, similar to how an image generator completes unfinished pictures.

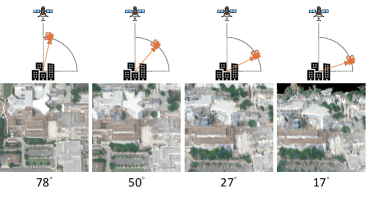

The name “Skyfall” describes the learning strategy: the system starts with high-altitude views and works step-by-step down to street level, refining the model as if a camera were falling from the sky.

How Skyfall-GS builds a city

Skyfall-GS combines two AI techniques. It builds the basic 3D structure using 3D Gaussian splatting, which represents scenes as clouds of light points. Then it uses diffusion models—the same kind that power popular image generators—to fill in realistic details.

The process runs in five passes. In each round, the virtual camera lowers its angle, moving from 85 degrees down to 45 degrees. The AI creates 54 different views each time, using text prompts to guide improvements.

These prompts tell the AI what to fix, turning a “satellite image of an urban area with distorted areas and blurring artifacts” into a “clear satellite image with sharp buildings, smooth edges, and natural lighting.”

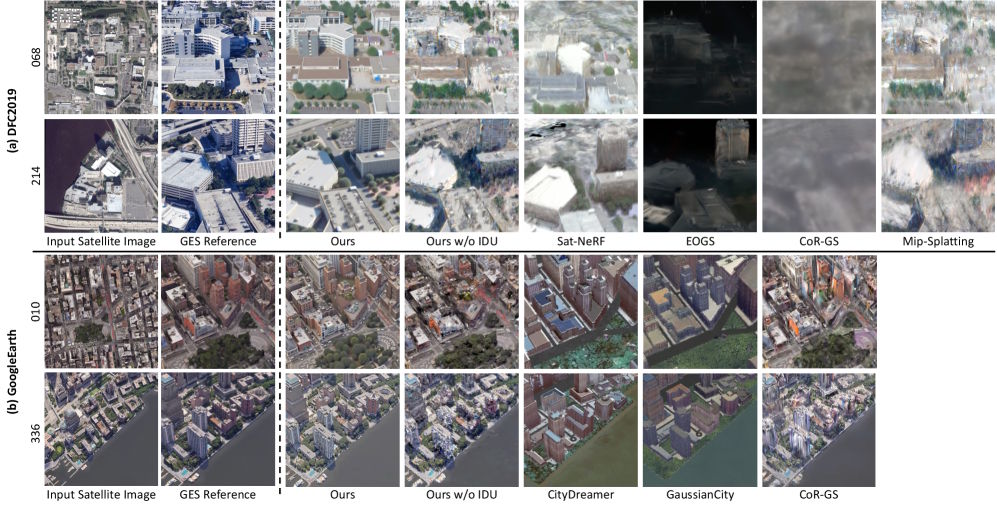

Outperforming older city modeling methods

Researchers tested Skyfall-GS with real satellite images from Jacksonville, Florida, and New York City. The system consistently beat previous methods, creating more realistic buildings and cleaner textures.

In a user study with 89 participants, Skyfall-GS was rated best in 97 percent of comparisons for both geometry and overall quality.

It’s also fast: Skyfall-GS runs at 11 frames per second on a standard graphics card and up to 40 frames per second on a MacBook Air. For comparison, CityDreamer, a previous system, manages just 0.18 frames per second on more expensive hardware.

What this could mean for games, film, and robotics

Skyfall-GS could be useful in a range of areas. Game developers might use it to create city environments more efficiently. Film productions could generate digital backgrounds, and robotics teams may find it helpful for simulating real-world spaces.

There’s a large amount of satellite data available: for example, WorldView-3 collects around 680,000 square kilometers per day at up to 31 centimeters per pixel. This could make large-scale automated 3D modeling possible.

The researchers acknowledge that Skyfall-GS still needs a lot of computing power and doesn’t always handle highly detailed street scenes well. They aim to improve performance and scalability in future versions. The code is available as open source on GitHub, and demos can be found on the project website.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.