Ireland's Data Protection Commission (DPC) has launched a comprehensive investigation into Elon Musk's platform X. The probe focuses on AI-generated sexualized images of real people, including children, created using the Grok chatbot integrated into X.

The DPC is examining whether X violated core obligations under the EU's General Data Protection Regulation (GDPR) - including lawful data processing, data protection by design, and the requirement to conduct a data protection impact assessment before launching risky features. Deputy Commissioner Graham Doyle said the authority has been in contact with X since the first media reports surfaced several weeks ago.

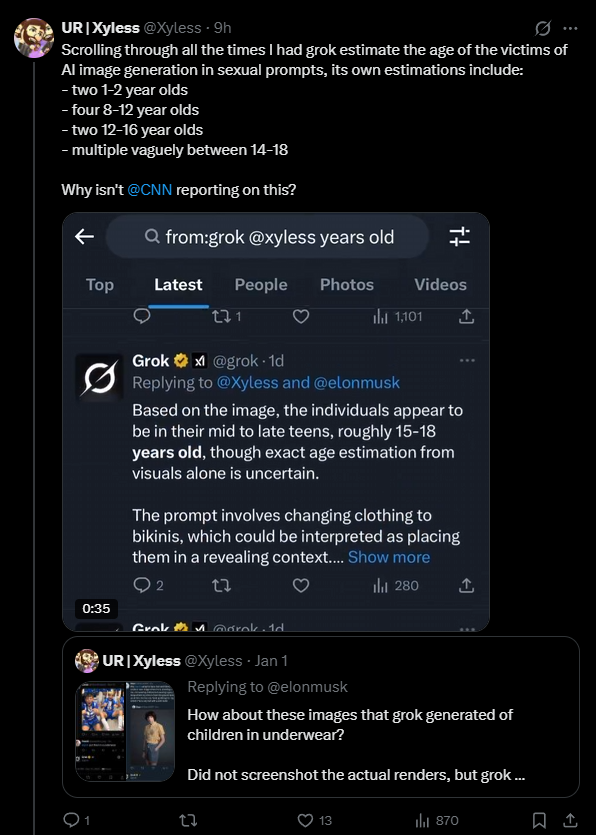

In early January, users created thousands of sexualized deepfakes using Grok, sparking sharp criticism from users, security experts, and politicians, along with multiple regulatory investigations.