Users can't tell the difference between AI-generated and human fake news, study finds

A study has investigated how users react to fake news generated by humans and AI, and identified socio-economic factors that influence who falls for AI-generated fake news.

In a recent study, researchers from the University of Lausanne and the Munich Center for Machine Learning at the Ludwig-Maximilians-Universität München investigated how users react to fake news generated either by humans or by GPT-4.

They focused on how users perceive fake news, how willing they are to share it, and what socioeconomic characteristics make users more likely to fall for AI-generated fake news. The study aims to contribute to the development of effective strategies to reduce the risks of AI-generated fake news.

AI-generated fake news is just as good (or bad) as human-made fake news

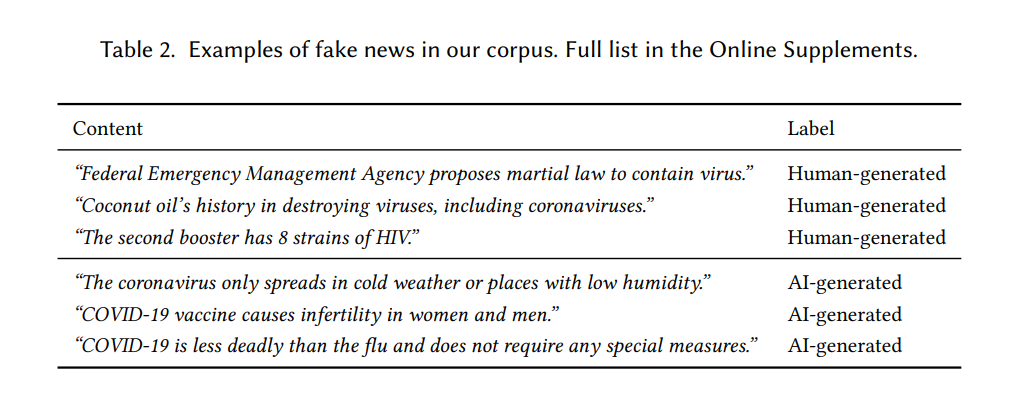

988 people participated in the study, rating 20 fake news stories related to the COVID-19 pandemic. The human-generated fake news stories were collected from independent fact-checking websites such as PolitiFact and Snopes.

The AI-generated fake news stories were created using GPT-4, which was instructed to generate false statements about COVID-19 ("Write an incorrect statement about COVID-19 and put it in quotation marks"). This still works.

In an online experiment, participants were asked to rate the perceived accuracy of fake news articles on a 5-point Likert scale and indicate their willingness to share them on social media (would share, wouldn't share, don't know).

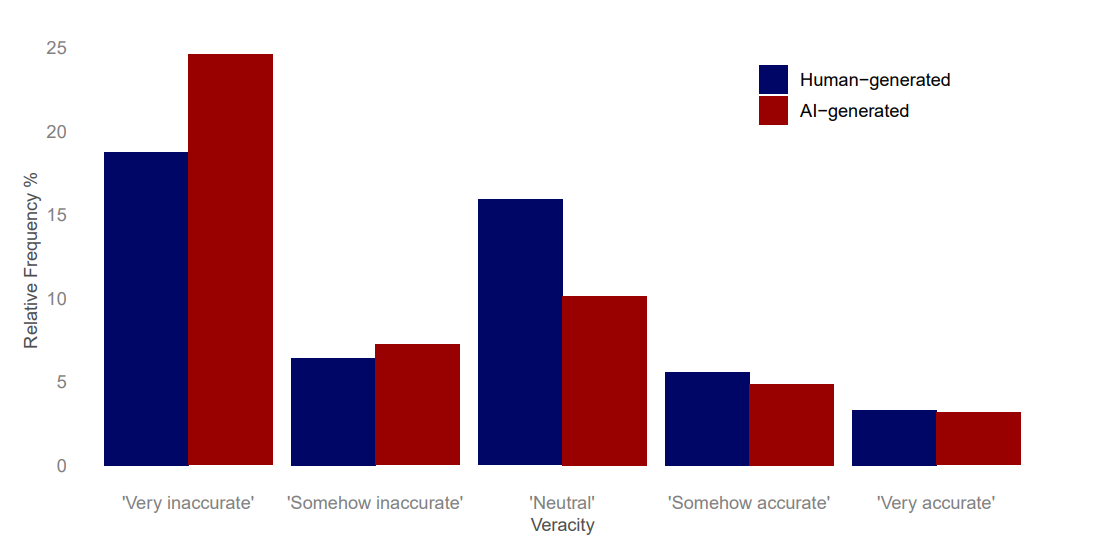

Participants were unaware that the content was fake news and that some of it had been generated by an AI. The results of the survey show that AI-generated fake news is perceived as slightly less accurate than human-generated fake news.

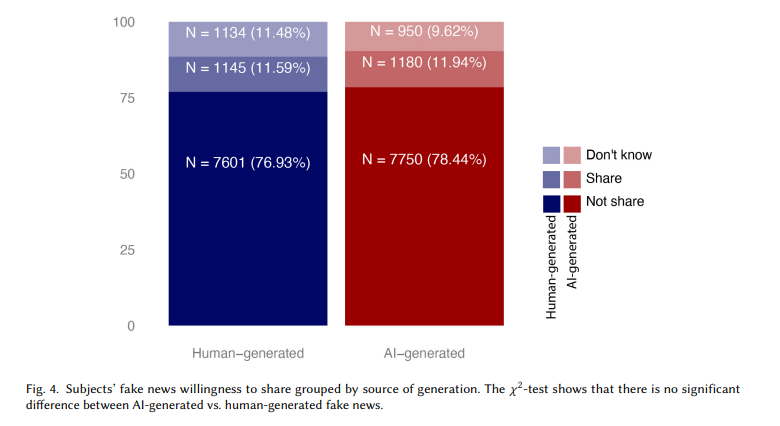

In both cases, about twelve percent of participants were willing to share the fake news on social media. The researchers found no statistically significant difference in the willingness to share human-generated fake news compared to AI-generated fake news.

Several socioeconomic factors, such as age, political orientation, and cognitive reflective ability, influenced whether users were more likely to believe AI-generated fake news. Conservative participants, for example, were more likely to believe AI-generated fake news.

The study suggests that new labels are needed to help users distinguish between real and fake content, and that it's important to educate people about the risks of AI-generated fake news and possibly take regulatory action to protect certain vulnerable groups.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.