xAI releases preview of Grok-1.5 Vision multimodal AI model with improved understanding of the physical world.

Elon Musk's AI startup xAI has released a preview of Grok-1.5 Vision, its first multimodal AI model, which the company says has a better understanding of the physical world than its competitors.

In addition to standard text capabilities, Grok-1.5V can process various visual information, including documents, diagrams, graphics, screenshots, and photos. The model will soon be available to early testers and current Grok users.

xAI claims that Grok-1.5V is competitive with today's best multimodal models in several areas, from multidisciplinary reasoning to understanding documents, scientific diagrams, graphics, screenshots, and photos.

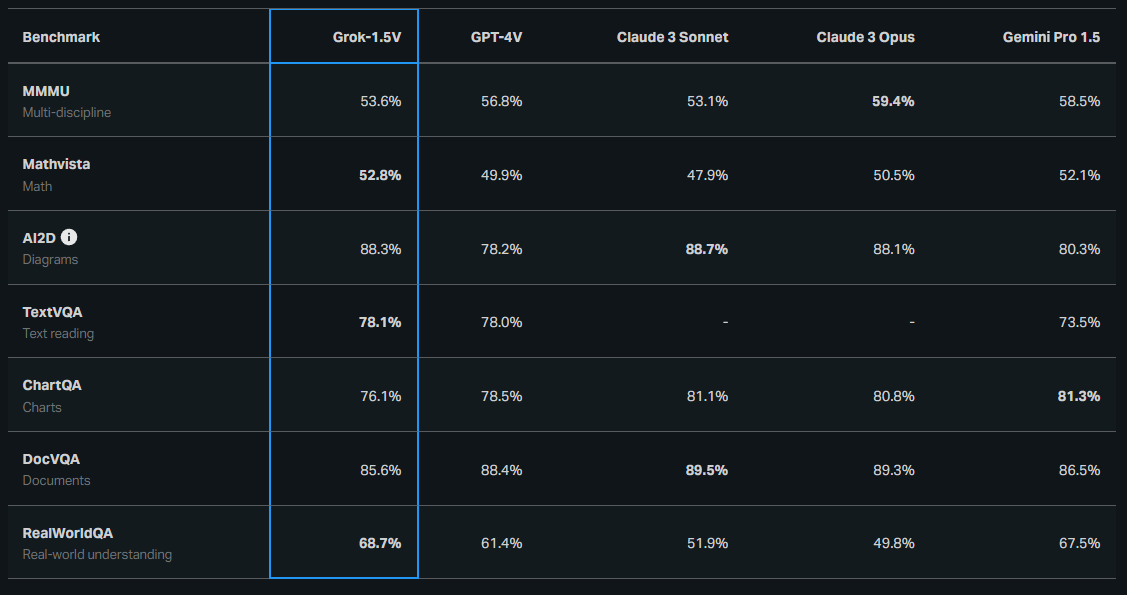

The company has published a table comparing Grok's performance on various benchmarks against competing models such as OpenAI's GPT-4, Anthropic's Claude, and Gemini Pro. Grok achieved equal or better results in most areas.

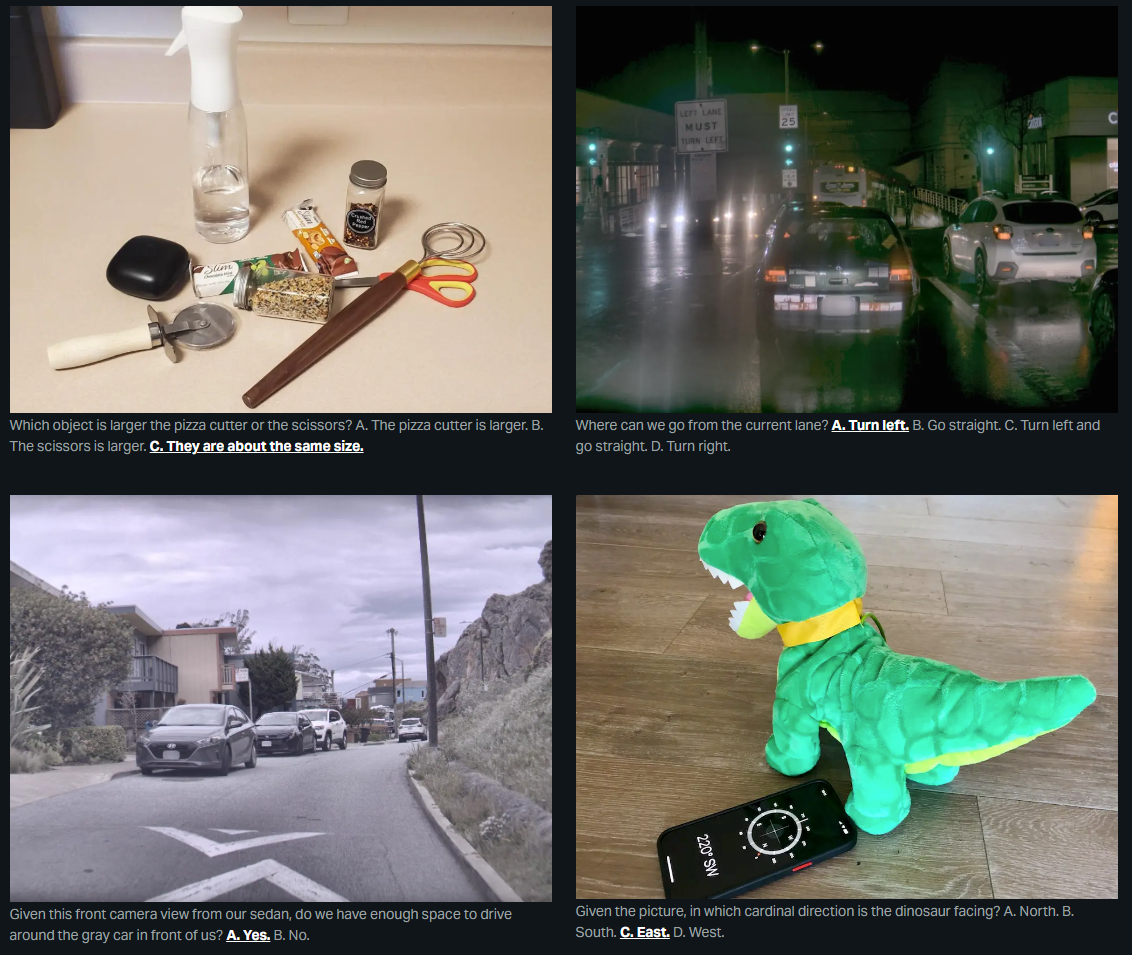

xAI highlights Grok's ability to understand the physical world as measured by the newly introduced RealWorldQA benchmark, which tests spatial understanding of the real world. Grok outperformed competitors using zero-shot prompts (no example solutions given in the prompt).

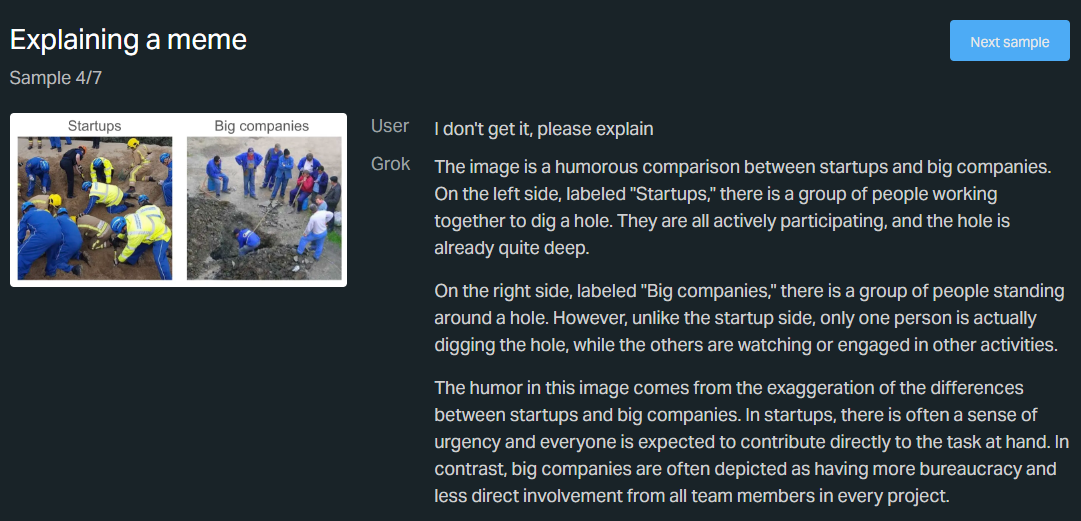

An example shows how Grok can generate working Python code from a flowchart describing the logic of a guessing game. This demonstrates the model's ability to understand diagrams and translate them into executable code. Another example shows Grok-1.5V explaining a meme.

RealWorldQA: Visual benchmark for the physical world

xAI argues that understanding the physical world is essential to developing useful AI assistants for the real world. To this end, the company has developed the RealWorldQA benchmark, which evaluates the spatial capabilities of multimodal models. Many of the examples are straightforward for humans, but are often challenging for AI models.

The initial RealWorldQA dataset consists of more than 700 images, each containing a question and an easily verifiable answer. The images are taken from vehicles and other sources, and are anonymized. xAI is making the dataset available to the community for download under the CC BY-ND 4.0 license.

xAI sees the advancement of multimodal understanding and generation capabilities as important steps toward a useful Artificial General Intelligence (AGI) that can understand the universe - xAI's self-proclaimed mission.

The company expects significant improvements in both areas in the coming months for various modalities such as images, audio, and video. In May, xAI reportedly plans to launch Grok-2, which Musk says will outperform GPT-4.