15% of companies ban code AI, but 99% of developers use it anyway

A new global study from cloud security firm Checkmarx reveals that nearly all developers are using AI coding tools, even at companies that have officially banned them.

The study found that 99% of development teams use AI coding tools, although 15% of surveyed companies have explicitly prohibited their use. This gap between official policies and actual practices in development departments underscores the difficulty in controlling generative AI use.

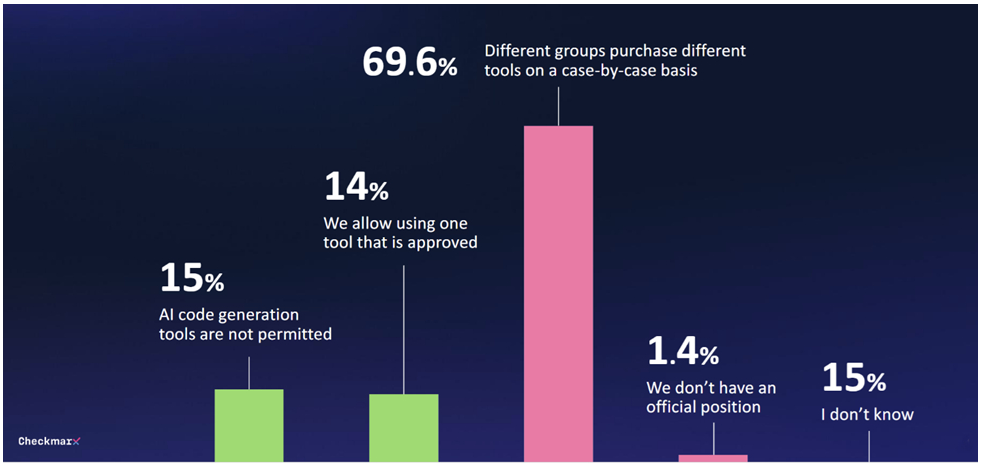

Only 29% of companies have established any form of governance for generative AI tools. In 70% of cases, there's no central strategy, with purchasing decisions made on an ad-hoc basis by individual departments.

Security concerns are growing, with 80% of respondents worried about potential threats from developers using AI. Specifically, 60% express concern about AI issues such as hallucinations.

Despite these worries, there's still interest in AI's potential. 47% of respondents were open to allowing AI to make unsupervised code changes. Only 6% said they wouldn't trust AI with security measures in their software environment.

"The responses of these global CISOs expose the reality that developers are using AI for application development even though it can’t reliably create secure code, which means that security teams are being hit with a flood of new, vulnerable code to manage," said Kobi Tzruya, chief product officer at Checkmarx.

Microsoft's Work Trend Index recently reported similar findings, showing that many employees are using their own AI tools when none are provided. Often, they don't discuss this use, which hinders the systematic implementation of generative AI into business processes.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.