Enterprises struggle to implement generative AI, study finds

A new study from Intel-owned cnvrg.io shows that despite the hype, the adoption of generative AI based on large language models is still in its infancy.

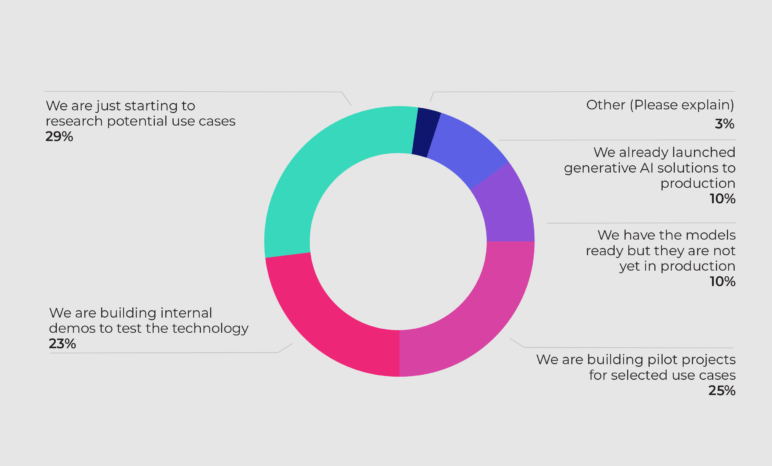

According to the survey of 434 data scientists, developers and IT managers, only ten percent of companies have successfully integrated generative AI solutions into their business processes. 90 percent of companies are still in the experimental phase.

56 percent consider generative AI to be moderately important (32 percent), somewhat important (16 percent), or not important (8 percent). The current hype around generative AI contrasts with the reality of its business application.

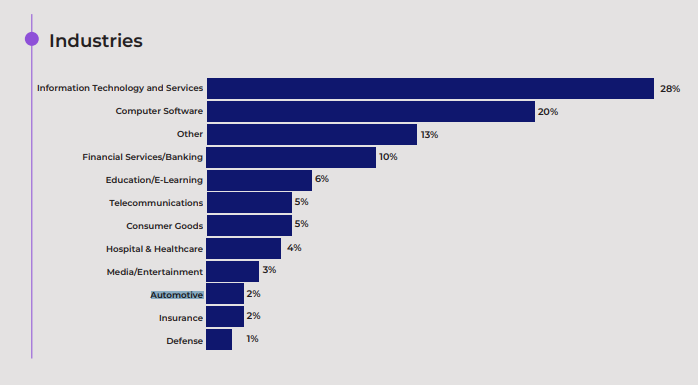

Financial services, banking, insurance, and defense are pioneers in the use of AI, preferring to use chatbots and translation solutions. The education, automotive, and telecommunications sectors, on the other hand, have some catching up to do. AI initiatives are still in their infancy.

Compared to 2022, the use of chatbots has increased by 26% and the use of translation and text generation has increased by 12%. This is likely a direct result of the widespread use of large language models in 2023.

According to 46% of respondents, IT infrastructure is the biggest obstacle to deploying large language models. The computationally intensive models put a strain on existing resources.

84% of respondents said they lack the technical skills to manage the complexity of language models. 58% have low levels of AI integration, running five or fewer models. According to the study, little has changed since 2022.

62% still find it difficult to adopt AI. The larger the organization, the more complex the implementation. Implementing new AI technologies, scaling usage, and improving existing offerings are the top priorities for 2024.

According to the study, enterprise adoption of AI is still low, despite the emergence of technologies such as ChatGPT. The report also highlights that many companies are still in the early stages of integrating AI into their processes. It cites several factors, including skills, regulation, reliability, and infrastructure, as barriers to development.

The study quotes Intel's Tony Mongkolsmai as pointing to the lack of technical skills needed to handle the complexity of AI and large language models.

The study, which took place over three years, suggests that tasks need to be simplified to make it easier for developers to work with AI, which Mongkolsmai echoes. He emphasizes that this is the only strategy for the industry to fully realize the potential of technologies such as large language models soon.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.