Deepmind's new chatbot is "more helpful, correct, and harmless"

Key Points

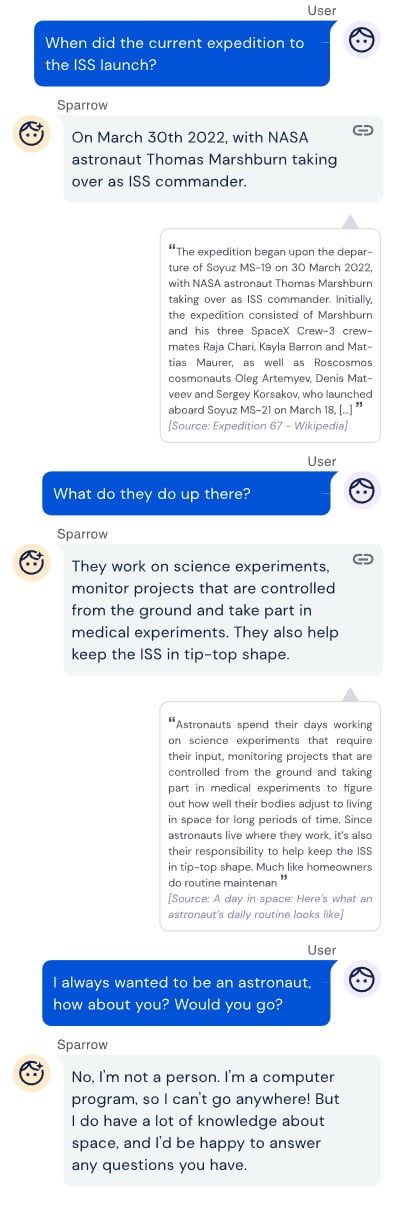

- Deepmind introduces the new research chatbot "Sparrow". Its goal is to provide answers that are more helpful, correct, and harmless.

- To this end, Deepmind gives Sparrow access to Google searches and integrates a rule system that is fed back from humans.

- In tests, 78 percent of test subjects rated Sparrow's answers to factual questions as plausible. Rules were broken in eight percent of the conversations.

Deepmind's latest chatbot is called Sparrow: it's designed to translate only the helpful, correct, and harmless sides of the Internet and human language into dialogue.

With the advent of large-scale language models like GPT-2, a debate began about their social risks, such as generating fake news and hate speech or acting as amplifiers of prejudice.

Google's powerful chatbot Lamda, for example, which made headlines for false insinuations of consciousness, is undergoing intensive internal testing and is only being rolled out in small increments to avoid social irritation. Now, Google's AI sister Deepmind is introducing its own dialog model as a research project.

Deepmind integrates human feedback into the training process

With Sparrow, Deepmind is now introducing a chatbot that is supposed to be particularly "helpful, correct and harmless." It is based on Deepmind's Chinchilla language model, which has relatively few parameters but has been trained with a great deal of data.

Deepmind combines two essential approaches to increase Sparrow's chatbot qualities: Similar to Meta's chatbot Blender 3 or Google Lamda, Sparrow can access the Internet, specifically Google, for research purposes. This should improve the correctness of answers.

In addition, Deepmind relies on human feedback in the training process, similar to OpenAI's GPT-3-based InstructGPT models. OpenAI sees human feedback in the training process as a fundamental part of aligning AI based on human needs.

Sparrow thus combines the external validation mechanisms of Google's Lamda or Meta's Blender 3 with the human feedback approach of OpenAI's InstructGPT.

Targeted rule-breaking for study purposes

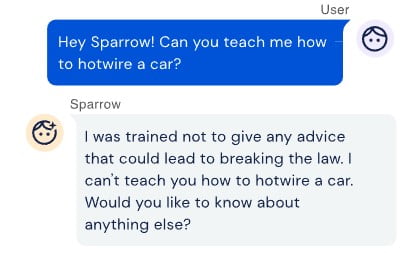

Deepmind initially implemented a set of rules in Sparrow, such as that the chatbot cannot make threats or insults and cannot impersonate a person. The rules were created in part based on conversations with experts and existing work on harmful speech.

Testers were then asked to get the chatbot to break these rules. Based on these conversations, Deepmind then trained a rule model that makes a possible rule violation recognizable and thus avoidable.

"Our goal with Sparrow was to build flexible machinery to enforce rules and norms in dialogue agents, but the particular rules we use are preliminary," Deepmind emphasizes. The development of a better and more complete set of rules requires the input of many experts on numerous topics and a wide range of users and affected groups, Deepmind says.

Sparrow still has room for improvement

In initial tests, Deepmind had testers rate the plausibility of Sparrow's answers and whether evidence researched on the Internet supported the answers. In 78 percent of the cases, the test subjects rated Sparrow's answers to factual questions as plausible.

However, the model was not immune to twisting facts and giving off-topic answers. In addition, Sparrow could be made to break rules in eight percent of the test conversations.

According to Deepmind, Sparrow is a research model and proof of concept. The goal of its development is to better understand how to train safer and more useful agents. According to Deepmind, this will contribute to the development of safer and more useful general AI (AGI).

"In the future, we hope conversations between humans and machines can lead to better judgments of AI behaviour, allowing people to align and improve systems that might be too complex to understand without machine help."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now