Deeper insights into AI language models - chain of thought prompting as a success factor

Large language models like OpenAI's GPT-3 are supposed to provide better answers with what is called Chain of Thought (CoT) prompting. What is CoT Prompting and how can it help?

Authors: Moritz Larsen, Prof. Dr. Doris Weßels

The use of generative AI language models, such as GPT-3 from OpenAI, can lead to surprising results. This is due to the lack of verifiability and explainability of such "black box" systems, which is an inherent feature of deep learning systems. The continuously growing number of parameters correlates positively with the performance of "Large Language Models (LLM)".

They solve tasks better and better, but the solution path usually remains intransparent. Now a breakthrough can be achieved with the so-called "Chain of Thought" prompting, abbreviated CoT prompting. With this method, the model is asked to explain the solution step by step.

Prompts describe the requirement for the selected model in natural language. In a so-called "zero-shot prompt", an instruction or question such as "Explain the term Chain of Thought-Prompting" is sufficient to arrive at a result. The more precise the wording, the more likely the results will match expectations.

In addition, we can use examples to explain to the model in more detail what we expect the result to be.

One example (one-shot prompt) can already be sufficient, several examples (few-shot prompt) instruct the model more precisely. Even small changes in the prompt can lead to entirely different results. The goal of "prompt design" or "prompt engineering" is to find the optimal prompt so that the language model best solves the task at hand (Liu et al. 2021). Small additions to the prompt can lead to a better quality result in this regard.

Adding the phrase "Let's consider step by step …" or some form of this phrase that points out that a step-by-step approach is required has a significant effect. It encourages the language model to represent a step-by-step approach to solving a task, and in this way makes its supposed chain of thought visible to users.

In their experiments with different language models, Google researchers were able to prove that the quality of the results is improved by CoT prompting above a certain model size (Wei/Zhou 2022). This is another major advantage besides the gain in transparency.

How "chain of thought" prompting affects the output of language models

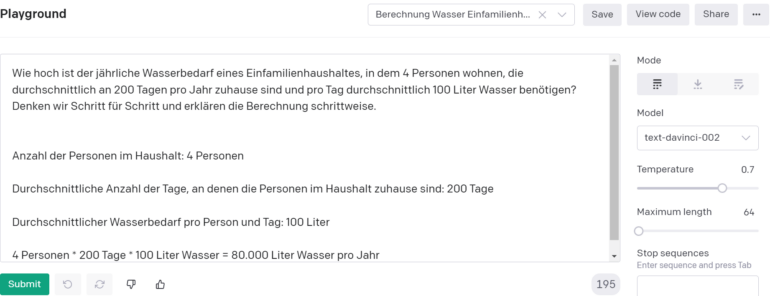

The following examples, which are inevitably unique and non-reproducible results due to the randomized production of results, were produced in Playground using the Davinci-2 model from OpenAI's GPT-3 model family and should be evaluated as "cherry-picked" outputs.

Example 1: Impact of CoT prompting on arithmetic tasks

CoT prompting will be explained using an arithmetic task. In Table 1, the left side shows the input text without CoT prompt. The right side shows the same input text and additionally the CoT prompt that is particularly relevant here: "Let's think step by step and explain the calculation step by step".

In the lower part, the result generated by the AI is displayed in each case. On the left side it is a detailed answer that repeats the information from the task and gives the result. On the right side you can see a representation of the four individual steps explaining the result.

| Arithmetic task without CoT prompt: | Arithmetic problem with CoT prompt: |

| What is the annual water demand of a single-family household containing four people who are home an average of 200 days per year and use an average of 100 liters of water per day? | What is the annual water demand of a single-family household containing four people who are at home an average of 200 days per year and use an average of 100 liters of water per day?

Let's think step by step and explain the calculation step by step. |

| Result without CoT prompt: | Result with CoT prompt: |

| The annual water demand of a single-family household, in which four people live, who are at home on average 200 days per year and need on average 100 liters of water per day, is 80,000 liters. | Number of people in the household: 4 people

Average number of days the people in the household are at home: 200 days Average water consumption per person per day: 100 liters 4 persons * 200 days * 100 liters of water = 80,000 liters of water per year |

Table 1: Comparison of the arithmetic task without CoT prompt (left) and with CoT prompt (right).

This example shows that already first steps towards explainability can be taken by an intelligent prompt design. However, explainability only refers to the output and the formal solution steps, not to the inner structure of the underlying artificial neural network (KNN) as a result of Deep Learning, which is still considered a "black box". The (supposed) chain of thoughts of an AI-generated by CoT prompting must not be misunderstood as an actual insight into the logic of the AI model.

Example 2: Does CoT prompting improve the explanation of a citation?

This further example is about the explanation of a quote. As a quote, we chose the following passage from Hannes Bajohr's text "Artificial Intelligence and Digital Literature. Theory and Practice of Connectionist Writing" regarding authorship in the context of AI-generated texts:

"The rupture between paradigms also touches on the authorship question, the human side of which is undergoing an increasing distancing in the human-machine structure. While in the sequential paradigm one could still plausibly speak of secondary authorship, which consists in the formulation of a sequence of rules, the execution of which produces the work - here the idea of a "writer of writers" makes perfect sense - with KNNs one is faced with tertiary authorship: Only the data set for the training remains to be defined, from which the KNN independently forms the model, and the parameters remain to be determined, by means of which the model finally produces the output. With large language AIs like GPT-3, even this is no longer possible, because the training is too complex to adapt it to new data sets. The 'programming' is done by the normal language formulation of prompts ('prompt design') following the model of dialogic communication - here one could even speak of quaternary authorship." (Bajohr 2022)

The prompt on the left consists of two parts: The first part is the above-mentioned quotation (shown in the table with the placeholder <<QUOTE>> for space reasons). The second part is the task or question "What does this quote from Hannes Bajohr describe?".

The prompt on the right side contains the same elements and additionally the crucial phrase "Let's think step by step to reach a conclusion." which is meant to stimulate the presentation of the step-by-step procedure.

| Task without CoT prompt: | Task with CoT prompt: |

| <<CITATION>>

What does this quote by Hannes Bajohr describe? |

<<QUOTE>>

What does this quote by Hannes Bajohr describe? Let's think step by step to reach a conclusion. |

| Result without CoT prompt: | Result with CoT prompt: |

| This quote from Hannes Bajohr describes the break between the sequential and KNN paradigms. In the sequential paradigm, it is possible to speak of secondary authorship, which consists in the formulation of a sequence of rules whose execution produces the work. In KNNs, however, it is only possible to specify the parameters by means of which the model produces the output. In the case of large language AIs such as GPT-3, even this is no longer possible. | First, it is assumed that in the sequential paradigm there is a secondary authorship [sic!], consisting of a sequence of rules whose execution produces the work. This idea of a 'writer of writer' makes sense in this context.

The next step is to point out that in KNNs (artificial neural networks) there is a tertiary authorship. This means that the data set for training and the parameters by means of which the model finally produces the output are created by the KNN itself. In the last step, it is mentioned that for large language AIs like GPT-3 there is even a quaternary authorship. This means that the training is so elaborate that it is no longer possible to adapt it to ever new data sets. The 'programming' here is done by the normal language formulation of prompts ('prompt design') following the model of dialogic communication. This quote thus describes the fact that authorship of works has changed from secondary to tertiary and quaternary authorship as artificial intelligence has evolved. |

Table 2: Explanation of a quote without CoT prompt (left) and with CoT prompt (right).

If you compare the results, you will notice at first glance that the output with CoT prompt is much more extensive. A bulleted structure can be seen. The line breaks after each paragraph, which were generated by the language model in addition to the text, also contribute to this structuring. The structuring thus gives the impression that the individual aspects of the quote are taken up and a conclusion is drawn from them in the last step.

Here, the step-by-step procedure becomes clear. The core statement of the quotation is recognized and divided into three sections, and in each case it is mentioned which form of authorship is associated with it. In the second section, however, there is an error in the content. It is said that the data set and the parameters are created independently by the KNN. According to Bajohr, however, this is precisely not the case, but it is the elements that are determined by humans.

However, we cannot understand how this content error arises. The chain of thoughts, or more precisely the step-by-step processing to reach a conclusion, remains an output of the language model whose origin cannot be traced due to the black box problem. Finally, the last section correctly summarizes the main message.

The output without CoT prompt, on the other hand, is a single block of text. It becomes clear that no step-by-step procedure was suggested by the prompt. Although a three-part division of the quote is also recognizable here, it is not made explicit by appropriate wording and formatting.

In terms of content, the first sentence aptly summarizes the core statement. However, only the form of secondary authorship is mentioned in the further course, the other forms are not mentioned. Content errors are not found in this output. From this point of view, it can be seen as a partially extracted and reduced form of the quotation.

How these results are to be evaluated depends, among other things, on our subjective expectation. But does the CoT prompt also objectively lead to a better result?

The output without the CoT prompt does not contain a content error and aptly summarizes the content of the quote in the first sentence, but does not go into depth. The task ("What does this quote describe?") is therefore fulfilled. The output of the CoT prompt, on the other hand, contains a content error but arrives at a correct conclusion and satisfies all forms of authorship.

The question of the right prompt and the danger of anthropomorphization

Both examples can only provide a small glimpse of the opportunities and challenges that targeted prompting presents. Writing templates or having them written automatically is a challenging task that requires time and experience.

The use of AI in language models will become increasingly relevant in education in the future. However, to deal successfully with AI, it is crucial to know which type of prompting will produce the desired result.

With the increasing use of artificial intelligence (AI), the question arises whether and to what extent these new technologies also bring new risks. One of these risks is the anthropomorphization of an AI, where an AI language model is assumed to have human capabilities and ways of thinking (Larsen/Weßels 2022). At Google, this even led to the dismissal of an employee who attributed consciousness to a speech AI (Bastian 2022).

A likely risk associated with humanization is the reduction or abandonment of a critical distance. Among other things, this could then lead us to overly trust an AI system and reveal information that we would otherwise keep to ourselves (Weidinger et al. 2022).

The current trend of an exponentially growing number of parameters in AI language models (Megatron-Turing NLG with 530 billion parameters and PaLM with 540 billion parameters) even spurs the dream of a strong AI. DALL-E 2, also from OpenAI, enables the path from text to image at the push of a button. Here, too, the instructions are given in natural language (zero-shot prompt).

Even more far-reaching multitasking capabilities are provided by Deepmind's AI agent Gato, which is also said to be able to perform movements. Kersting speaks of a "turning point" in this context, as size and capabilities correlate in neural language models (Kersting 2022).

He emphasizes the need to build Germany's own AI ecosystem to reduce dependence on American or even Asian AI language models, which by their nature also bring their culture and values with their training data and reflect them in text generation.

About the authors

Moritz Larsen: Master's student in Pedagogy, Language, and Variation at Kiel Christian Albrechts University; project collaborator in the AI project "Students' Academic Writing in the Age of AI" at the Research and Development Center of Kiel University of Applied Sciences.

Doris Weßels: Professor of Information Systems with a focus on Project Management and Natural Language Processing at Kiel University of Applied Sciences; project leader of the AI project "The Academic Writing of Students in the Age of AI" and head of the Virtual Competence Center for "Teaching and Learning Writing with Artificial Intelligence - Tools and Techniques for Education and Science".

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.