Microsoft study shows AI copilot development can be overwhelming

Key Points

- Microsoft Research has identified issues software developers face when building AI copilots such as Office Copilot, GitHub Copilot, and Adobe Firefly. Interviews with 26 developers revealed challenges at every stage of the development process.

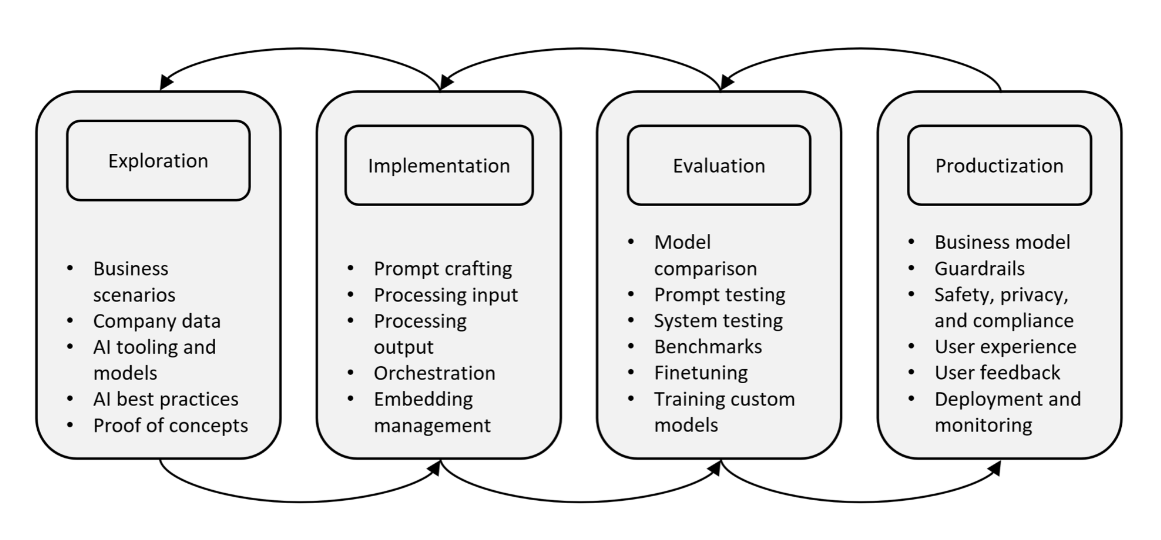

- The development of a copilot follows a rough process of exploration, implementation, evaluation, and productization. However, due to the non-deterministic nature of Large Language Models (LLMs), the process is unclear and iterative.

- The study categorizes the problems into six areas: Time-consuming rapid engineering, orchestration of multiple data sources, lengthy testing, lack of best practices, security and compliance challenges, and inadequate developer experience. Researchers see potential for new tools and processes.

A study by researchers at Microsoft Research has identified problems developers face when building AI copilots.

As more companies deploy AI copilots powered by large language models (LLMs) to assist users with tasks in applications such as Word, Excel, programming, and image and video creation, software developers are entering uncharted territory in integrating these AI technologies.

Microsoft researchers interviewed 26 professional software developers responsible for copilot development at a variety of companies. Their key finding is that development processes and tools have not kept pace with the challenges and scope of AI application development.

The development process for a copilot follows a rough sequence of exploration, implementation, evaluation, and productization. But because of the unpredictable nature of AI, the process is "messy and iterative," the researchers say.

Developers must identify relevant use cases, assess feasibility with different technologies, and ultimately deliver a product to real users - each of which presents its own set of challenges. The study categorized the pain points into six areas:

1. Prompt engineering is time-consuming and requires extensive trial and error to balance context and token count. It is "more art than science" and models are "very fragile" with "a million ways you can effect it."

2. Orchestrating multiple data sources and prompts to understand user intent and control workflows is complex and error-prone.

3. Testing is crucial but tedious because of LLM unpredictability. Developers run tests repeatedly looking for matches or creating expensive benchmarks.

4. There are no best practices for working with LLMs. Developers rely on Twitter and papers in a rapidly evolving field that demands constant rethinking.

5. Security, privacy, and compliance require guardrails, but telemetry data collection is restricted for privacy. Security reviews become laborious.

6. The developer experience suffers from inadequate tools and integration issues. Developers must continuously learn new tools instead of focusing on customer problems.

Focus group sessions identified potential improvements in future tools and processes, such as better support for writing, validating, and debugging prompts; more user transparency and control; automated measurement procedures; rapid prototyping options; and easy integration into existing code.

The study highlights the significant disruption caused by generative AI in software products, bringing both opportunities and uncertainties. Software development may need to be rethought due to rapidly evolving models.

"The proliferation of product copilots, driven by advancements in LLMs, has strained existing software engineering processes and tools, leaving software engineers improvising new development practices," the researchers conclude.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now