OpenAI introduces personal data removal form as ChatGPT faces criticism over false information

Key Points

- The Austrian data protection organization Noyb has filed a complaint against OpenAI, alleging that ChatGPT violates the GDPR by generating false information about individuals and refusing requests to correct or delete this data.

- Noyb is asking the Austrian data protection authority to investigate OpenAI's data processing practices, comply with the complainant's request for access, and impose a fine to ensure future compliance with the GDPR.

- OpenAI may have introduced a personal data deletion form in response to the current criticism, but it has run afoul of data protection authorities in various countries in the past. The technical challenges of correcting language models remain.

ChatGPT often generates false information about certain people. A new data removal form aims to minimize the damage, but ultimately won't solve the problem.

OpenAI has faced criticism for its AI chatbot's tendency to fabricate false information about individuals, as do other large language models, which Austrian nonprofit noyb (None of Your Business) says violates the European Union's General Data Protection Regulation (GDPR).

Noyb has filed a complaint against OpenAI, accusing the company of failing to address these issues.

Making up false information is quite problematic in itself. But when it comes to false information about individuals, there can be serious consequences. It’s clear that companies are currently unable to make chatbots like ChatGPT comply with EU law, when processing data about individuals. If a system cannot produce accurate and transparent results, it cannot be used to generate data about individuals. The technology has to follow the legal requirements, not the other way around.

Maartje de Graaf, data protection lawyer at noyb

Noyb's complaint involves an anonymous public figure who requested that inaccurate personal information generated by ChatGPT be corrected or deleted. However, OpenAI denied the request.

The company claimed that it was not possible to correct the data without compromising ChatGPT's ability to filter out all information about the individual. While it is possible to block requests that include the name, this is not a viable long-term solution because it's too cumbersome.

In addition, OpenAI did not adequately respond to the complainant's request for access to his personal data, a right that should be granted to users under the GDPR.

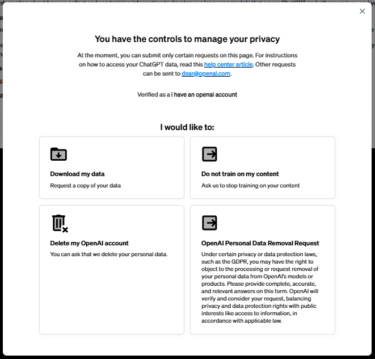

Perhaps in response to growing concerns, OpenAI recently added a form on its website to remove personal information from ChatGPT.

Individuals can opt out of processing or request that their personal data be removed from OpenAI's models or products, ostensibly in compliance with privacy laws such as the GDPR. However, OpenAI notes:

OpenAI will verify and consider your request, balancing privacy and data protection rights with public interests like access to information, in accordance with applicable law. Submitting a request does not guarantee that information about you will be removed from ChatGPT outputs, and incomplete forms may not be processed.

OpenAI Personal Data Removal Request

In the past, OpenAI has repeatedly run afoul of data protection authorities, sometimes with serious consequences. Italy banned the chatbot for a few weeks, and data protection authorities in France, Ireland, and Germany are monitoring the US company.

While OpenAI's response to the request to delete or correct personal data is disappointing, it is unfortunately technically understandable. Once a language model is trained, it can only be tuned with significant overhead. While you can try to block certain prompts that contain names, this is not a scalable approach for the future.

An improvement might be the "ChatGPT Search" currently circulating in the rumor mill, which - better than the already integrated browsing function - should take search results into account when answering.

However, the inherent problem of LLMs inventing information in the absence of knowledge is far from being solved.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now