Gemini 1.5 Pro is now the most capable LLM on the market, according to Google's benchmarks

According to Google, Gemini 1.5 Pro is now the most capable LLM on the market, at least on paper.

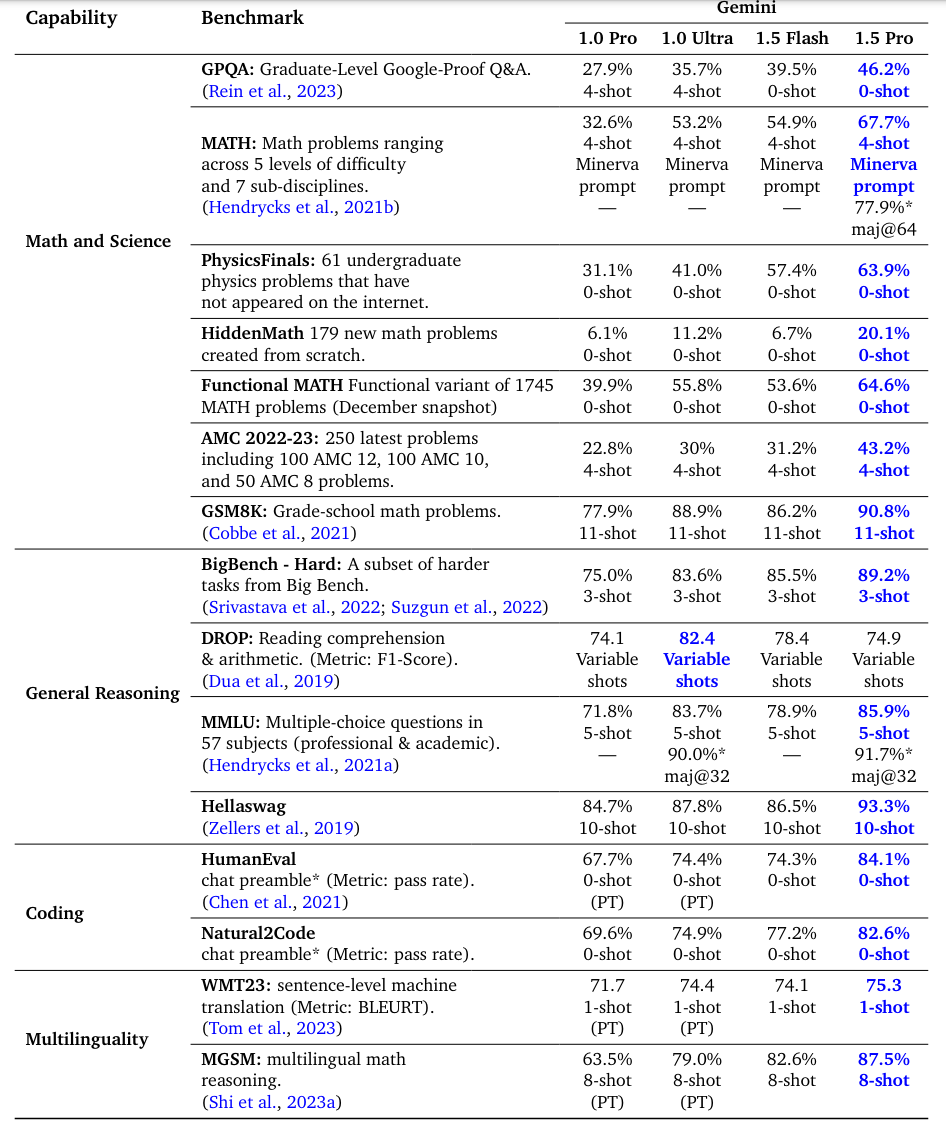

Google DeepMind has been improving the Gemini models over the past four months, and senior researchers Jeff Dean and Oriol Vinyals claim that the new Gemini 1.5 Pro and Gemini 1.5 Flash outperform their predecessors and OpenAI's GPT-4 Turbo in most text and vision tests.

Specifically, Gemini 1.5 Pro outperforms its predecessor, Gemini 1.0 Ultra, in 16 out of 19 text benchmarks and 18 out of 21 vision benchmarks.

On the MMLU general language understanding benchmark, Gemini 1.5 Pro scored 85.9% in the normal 5-shot setup and 91.7% in the majority vote setup, outperforming GPT-4 Turbo. Keep in mind, however, that benchmark results and real-world experience can be very different.

Gemini 1.5 Flash is designed to be very fast with minimal regression rates. As a leaner and more efficient version of the model, it aims to deliver similar performance with a context window of up to two million tokens.

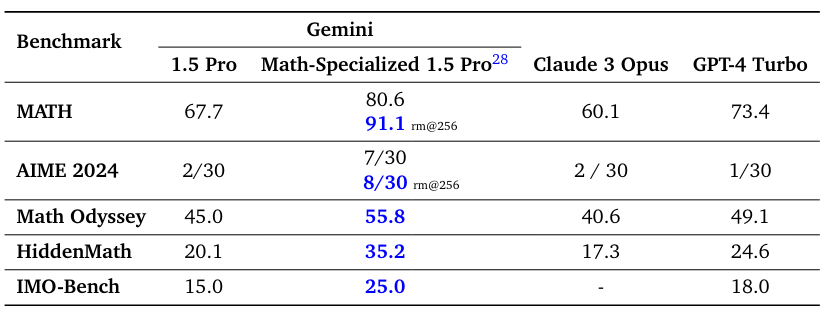

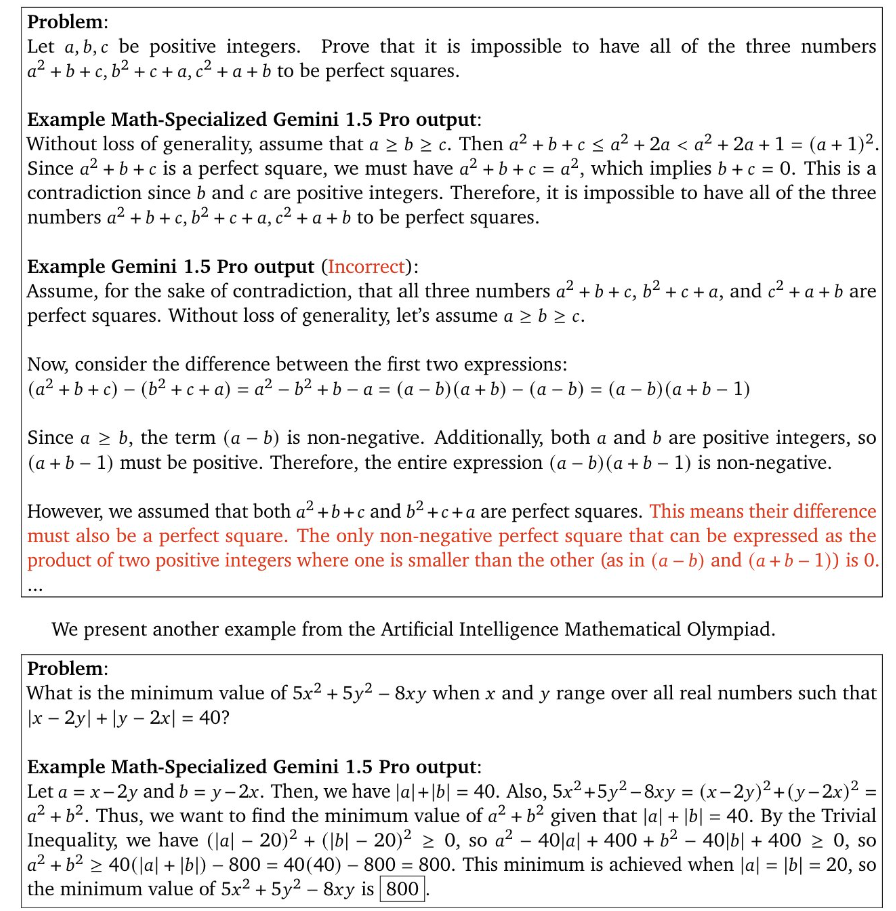

According to Google's Jeff Dean and Oriol Vinyals, Gemini 1.5 Pro shows particular progress in math, coding, and multimodal tasks. Google benchmarked a version of Gemini 1.5 optimized for math tasks, and it clearly outperformed 1.5 Pro, Claude 3 Opus, and GPT-4 Turbo in math.

The key feature of Gemini 1.5 Pro is its huge context window of up to 10 million tokens. This allows models to process data from long documents, hours of video, and days of audio. Google claims that Gemini 1.5 Pro can learn a new programming language from a manual, or a rare natural language like Kalamang from 500 pages of grammar instructions and a few example sentences, and speak it with human-like skill.

When tested to find specific information in the context of 10 million tokens, Gemini 1.5 Pro is 99.2% accurate. However, this so-called "needle in a haystack" test is not very useful because it's just a resource-intensive word search. CTRL+F is more efficient.

More sophisticated tests would measure the model's ability to use all the context in its answers and check for the "lost in the middle" problem. As long as the model ignores random information when answering your questions, the huge context window is of limited use.

Gemini 1.5 Pro and Gemini 1.5 Flash are available now and can be tested for free via the Google AI Studio platform.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.