Developer combines Stable Diffusion, Whisper and GPT-3 for a futuristic design assistant

How will we interact with computers in a few years? Probably very differently than we do today. One developer gives a taste by linking three AI systems for a digital design assistant.

For his AI-based design assistant, Twitter user Progen links three AI systems: the open-source image AI Stable Diffusion for image generation, OpenAI's Whisper, also open source, for translating spoken words into English, and GPT-3 for dialogs with the assistant.

AI specifies the task through queries

The result: Progen can have simple conversations with the assistant and give her instructions for image ideas. She confirms the instructions and either carries them out directly or asks queries to clarify them.

For the prompt "Let's design a house," the assistant asks if it is an exterior or interior. She then asks about the building material to be used, the location of the house, and the time of year. Progen's answers are integrated into the generated image.

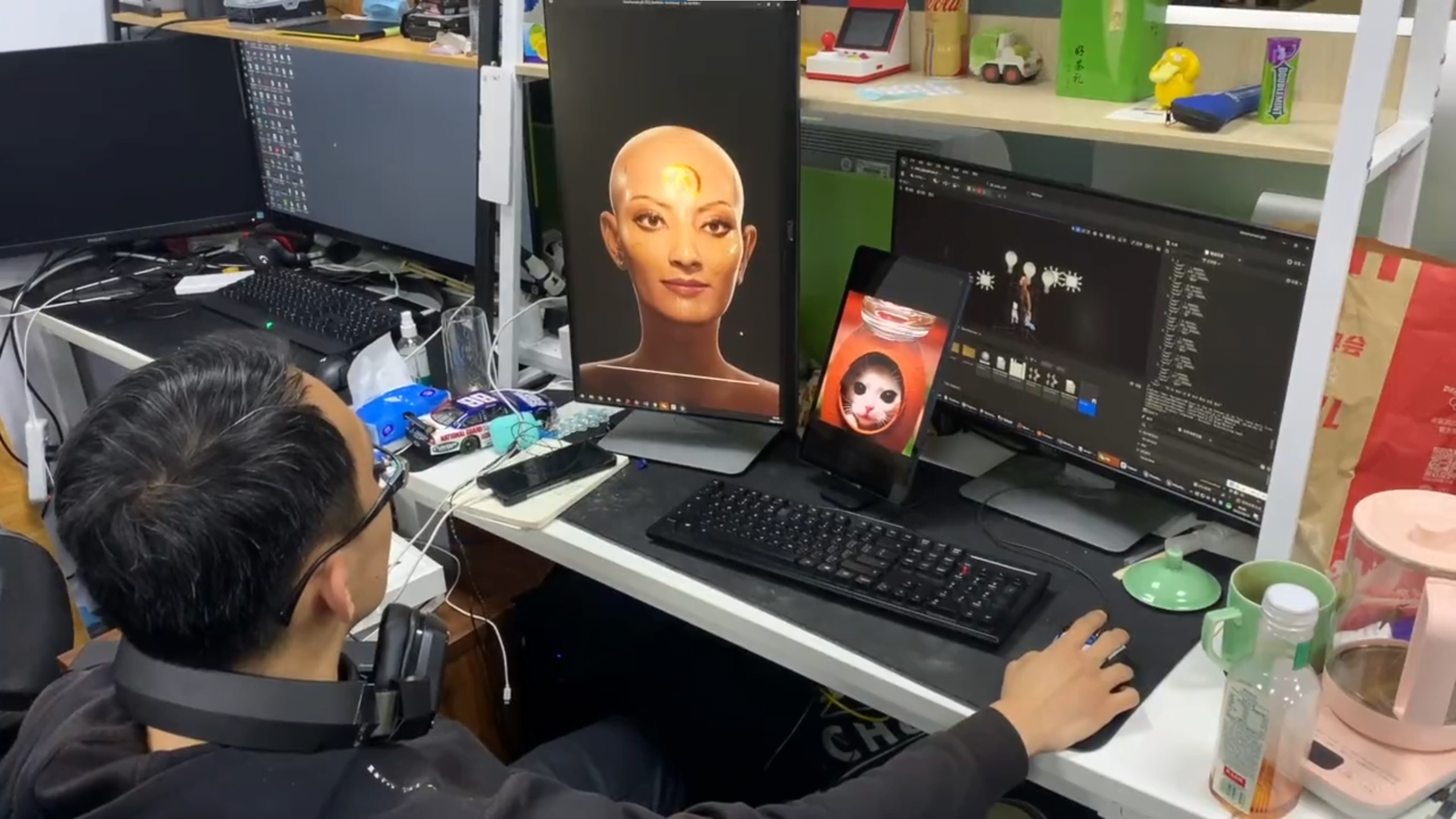

Metahuman Creator with Stable Diffusion, OpenAI Whisper and GPT-3 - the digital design assistant is up and running, translating spoken instructions into image ideas in multiple languages. | Video: Progen via Twitter

For the avatar creation, Progen used the digital human builder "Metahuman Creator" from the Unreal Engine company Epic Games. Epic released the software for nearly photorealistic avatars in April 2021 and sees it as a basis for the development of virtual beings, among other things.

Progen considers his project a proof of concept. It is interesting on the one hand because it links three AI systems, two of which are open source. On the other hand, the demonstration points to a new way in which humans might interact with computers in the future that can perform extensive tasks automatically and refine those tasks independently through queries and human feedback.

This alignment with human needs is a fundamental topic of AI research, but its complexity goes far beyond the example shown here. Progen's demo is also currently only of limited use for daily work, as the latency is still quite high - this problem could probably be solved through optimization and future AI models.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.