Deepfake nudes: parents clueless, teachers suspicious, students split

A new study by consulting firm The Human Factor highlights the growing problem of AI-generated nude images of minors, known as deepfake nudes, being created and shared by students. The firm surveyed more than 1,000 parents, students, teachers, and technology experts and developed practical solutions.

Recently, several incidents in the US involved students using AI image generators to create and share fake nude images of classmates. In Florida, two teens were charged with sharing deepfakes of 22 classmates and teachers. Similar cases occurred at a high school in New Jersey and in Spain.

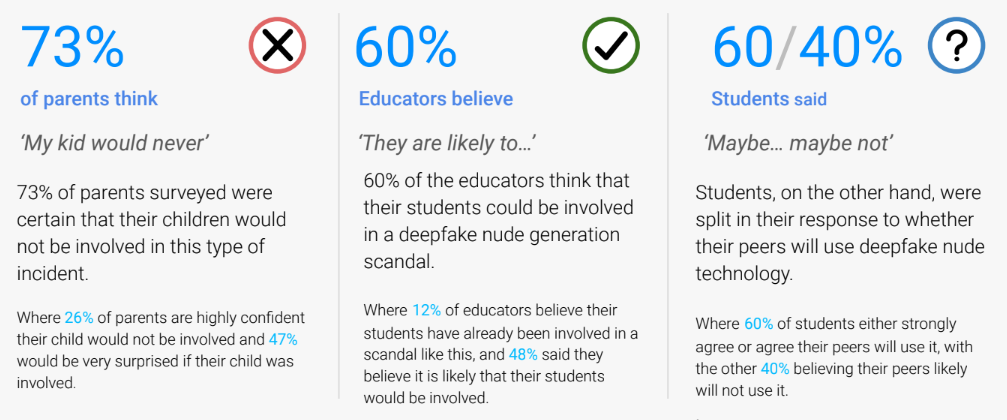

The study shows that most parents are completely clueless, with 73% of parents believing that their children would never be involved in a deepfake nude generation scandal. In contrast, 60% of teachers believe their students could be involved.

Students were split: 60% thought it likely that their classmates would misuse the technology, while 40% thought it unlikely.

Existing laws often fall short

Laws exist on related topics like child porn, revenge porn, and cyberbullying. However, there are no clear nationwide rules on deepfake nudes of minors made by minors. Case law also lags behind tech developments.

Tougher penalties could also stop students from reporting cases - for fear of consequences for the perpetrators, often classmates or friends. Victims, in turn, want to avoid re-traumatization through legal proceedings.

Tech companies are also reaching their limits. It's difficult to reliably identify content as deepfakes. Plus, the images are shared in private chat groups that platforms can't directly access. In some cases, the images would circulate there for months, the study authors write.

That's why bystanders are important because they are the first to report deepfakes. But as the study shows, there is a lack of courage.

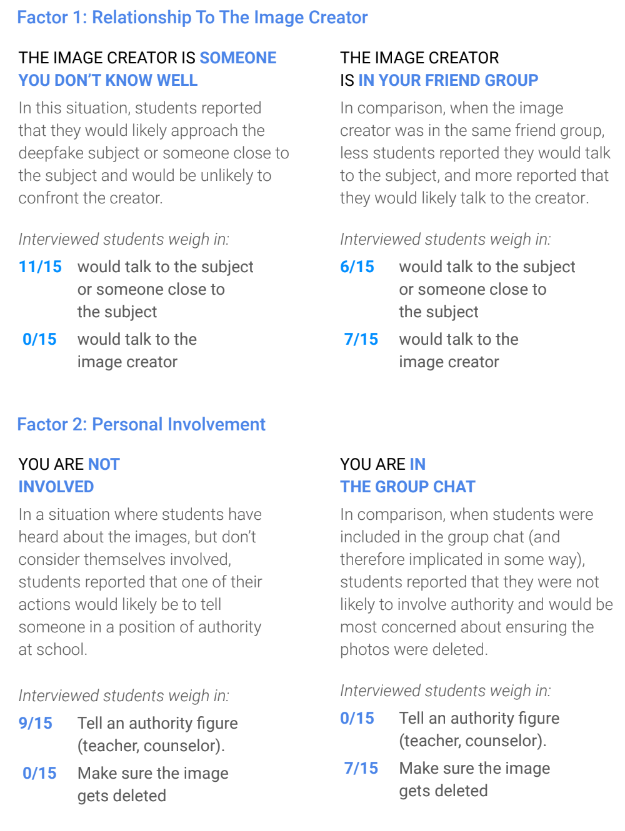

A survey of U.S. students in grades 7 through 12 found that if the students heard about the images but were not involved, 9 out of 15 would inform an authority figure. However, none of the students would press for the images to be taken down.

Even worse, none of the students engaged in a group chat would involve an authority figure, and only 7 out of 15 would insist that the photos be deleted. Confrontation with the creator of the image is largely avoided, with only 7/30 confronting him or her. More than half of respondents (17/30) would instead try to involve friends and close people.

The study shows that closeness to the incident and personal involvement are critical factors in whether students report deepfake incidents. The more involved they are, the less likely they are to involve teachers and the greater their desire to cover up.

Recommendations for Schools and Parents

The study recommends more education and clear consequences. Schools should openly discuss AI and deepfakes, emphasize the impact on victims, and set up (anonymous) reporting systems. Parents should talk to their children about technology and set rules together.

School rules and codes of conduct should explicitly name deepfakes as an offense and define penalties. This would allow teachers and principals to act quickly and with legal certainty. Anonymous reporting systems could encourage students to report cases.

Empathy for all parties involved is crucial, the experts said. Technology is moving so fast that perfect solutions are impossible. Understanding each other makes it easier to work together to establish new, healthy norms of behavior.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.