AI-faked images and videos - so-called deepfakes - have developed rapidly in recent years. Here, we trace the history and describe the most important milestones.

What do all the people in the cover image have in common? They don't exist. An AI came up with them. More precisely, it generated them using millions of similar pixel structures as examples.

I created the images on the website thispersondoesnotexist.com. Anyone who can click with a mouse can do it. It also works for cats.

Such realistic fake portraits are made possible by the invention of so-called "Generative Adversarial Networks" (GAN). These networks consist of two AI agents: One forges an image, the other tries to detect the fake. If agent discovers the forgery, the forger AI adapts and improves.

In this way, both agents become more and more efficient in their respective disciplines over the course of the training. And, the generated images become more credible.

Not all GAN is created equal

In practice, there is a big difference between the results of the original GAN and those of current GAN variants.

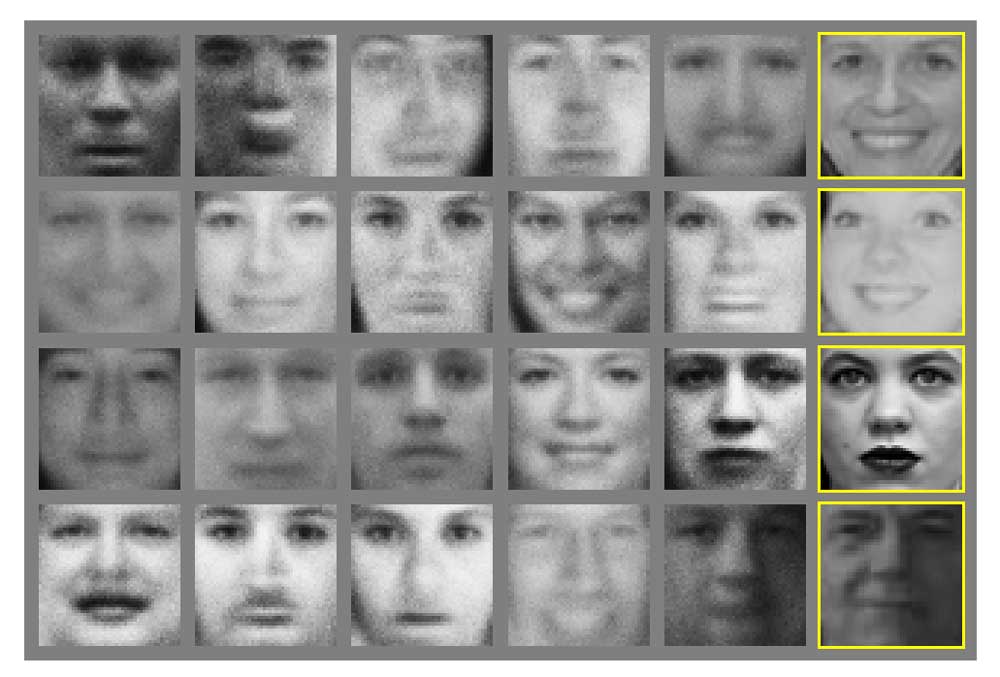

A Twitter post by Ian Goodfellow, recently head of AI at Apple, shows the development over the last few years. Goodfellow is considered the inventor of the first GAN process.

4.5 years of GAN progress on face generation. https://t.co/kiQkuYULMC https://t.co/S4aBsU536b https://t.co/8di6K6BxVC https://t.co/UEFhewds2M https://t.co/s6hKQz9gLz pic.twitter.com/F9Dkcfrq8l

- Ian Goodfellow (@goodfellow_ian) January 15, 2019

A brief GAN history

A look at the academic papers linked by Goodfellow makes clear how new AI architectures combined with larger data sets and faster computers led to the rapid development of so-called deepfakes:

2014: The birth of deepfake technology.

Goodfellow publishes a scientific paper with colleagues that introduces a GAN for the first time. It is the birth of GAN AIs and the technical basis of the deepfakes that we discuss intensively today.

2015: GANs are getting better

Researchers are combining GANs with multilayer convolutional neural networks (CNNs) optimized for image recognition, which can process a lot of data in parallel and run particularly well on graphics cards. They replace simpler networks that previously drove GAN agents. The results are becoming more credible.

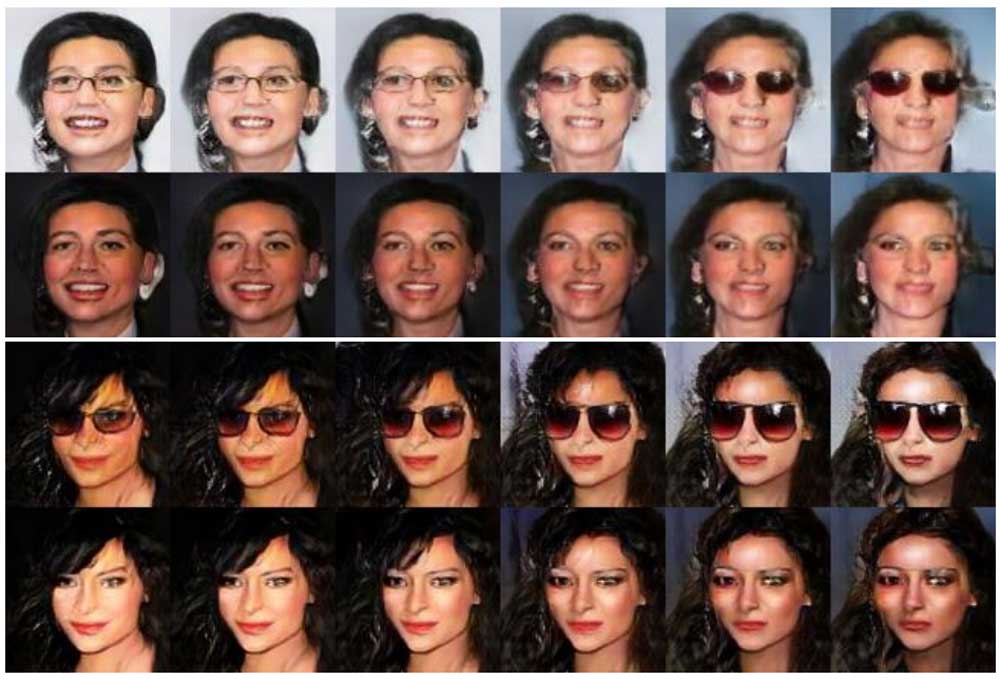

2016: Deepfake glasses & face manipulation.

Researchers combine two GANs: the agents of the different networks share information with each other. In this way, they learn in parallel.

Each agent slightly modifies the learned data. For example, it is possible to generate a person with and without sunglasses. The fake portraits again become more credible but are still clearly recognizable as fakes.

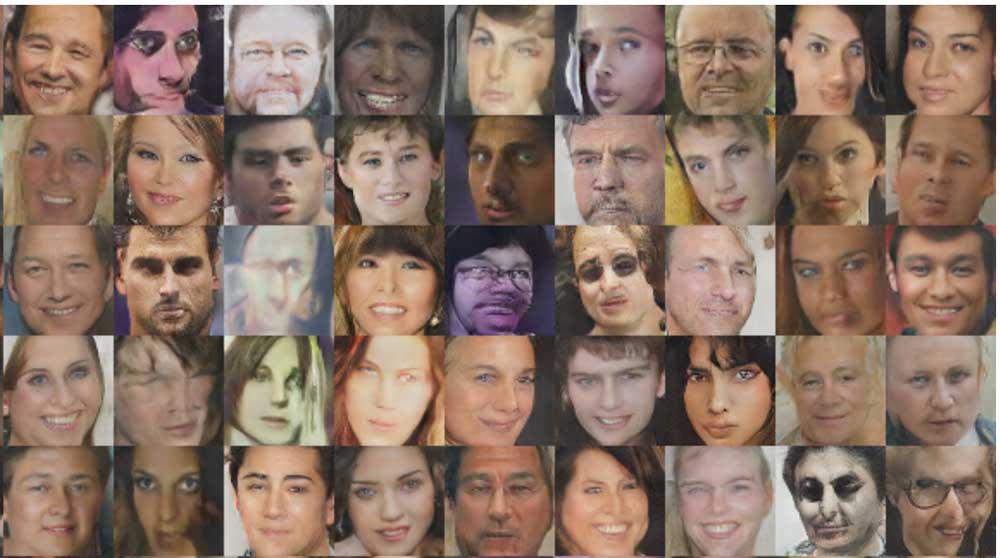

2017: Nvidia's quality leap & the first deepfake videos.

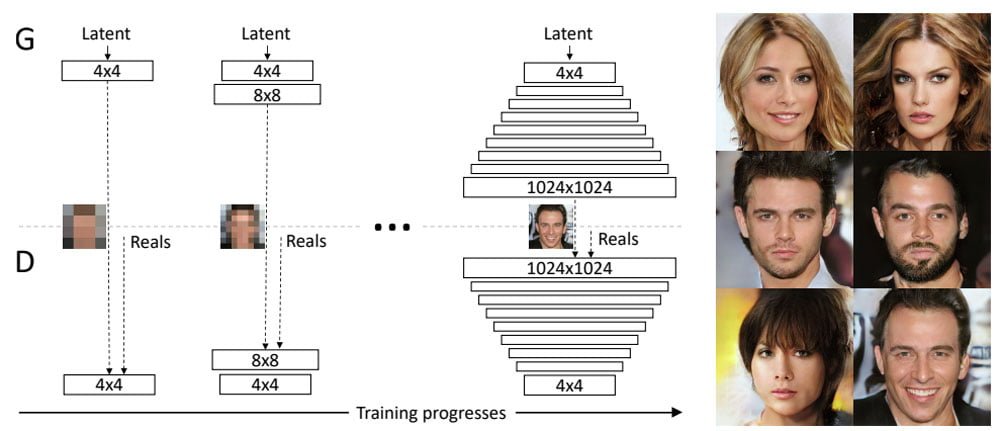

Nvidia researchers achieve a major leap in quality by solving a major problem of previous GANs:

The generator agents often produced low-resolution images because they were harder for the examiner agent to detect as fakes - more pixels means potentially more sources of error. So it makes sense for the forger AI to avoid high resolutions in order to get past the examiner agent.

Nvidia's solution: training the network in stages. First, the forger AI learns to create low-resolution images. Then, the resolution gradually increases.

The GAN, which is growing step by step in this way, produces fake portraits of unprecedented quality: The images still have flaws, but they can definitely fool people who don't look very closely.

While Nvidia is still improving its own GANs, Reddit user "deepfakes" is taking the technology mainstream: In the fall of 2017, the first deepfake porn named after him appeared, in which the faces of porn actresses were exchanged with those of prominent women.

The problem with porn

The term deepfake has since become synonymous with AI-generated images and videos. The "deep" refers to the neural networks built up in many layers (deep learning) that help generate the images.

Deepfake porn is still easily recognizable as fake, but the production effort is so low that thousands of users flock to Reddit and other online platforms within a short time to create explicit videos. The well-known U.S. actress Scarlett Johansson, whose face was particularly frequently misused for AI porn, later referred to the Internet as a "dark wormhole" in this context.

2018: More GAN control and deepfake YouTube channels.

Again Nvidia researchers succeed in controlling their GAN better: they can target individual image features, for example "dark hair" and "smile" in portraits.

In this way, the characteristics of training images can be specifically transferred to AI-generated images. The so-called style transfer (see video below) will become an important component of many subsequent AI projects.

Of course, the GAN principle doesn't just work for portraits: the AI doesn't care at all what kind of pixel structure it outputs. It only needs corresponding training data. At the end of 2018, Google's AI sister Deepmind, for example, shows AI-generated food, landscapes, and animals that look impressively believable.

The Deep Video Portrait software improves video manipulation using GANs, and the first YouTube channels specializing in deepfakes are emerging: It is no longer just porn that is being faked, but all kinds of videos, for example of politicians or major Hollywood flicks. For the first time, it is being discussed that AI processes could digitally resurrect actors who have already died.

And deepfake porn is going down the tubes: in the first quarter of 2018, Pornhub, Twitter, Gfycat and Reddit banned fake porn from their platforms. The website of the much-used Deepfake app goes offline.

2019: Deepfake arrives in the mainstream

Samsung researchers introduce a GAN that can deepfake humans and artwork. For example, the researchers put an animated smile on Mona Lisa's face. Samsung's deepfake AI needs only a handful of photos for respectable results.

A few months later, Israeli researchers introduce Face Swapping GAN (FSGAN), an AI that believably swaps faces in live video in real time. The new AI has learned to swap any face directly without prior one-on-one training. However, it does not yet reach the quality of elaborately trained deepfakes.

Away from technical advances, 2019 is the year that deepfakes finally hit the mainstream. Deepfake tools like DeepFaceLab, first released in 2018, are accelerating deepfake production. YouTube channels specializing in deepfakes are reaching millions of viewers and the number of deepfakes on the web is doubling in the first new months. Deepfakes are developing faster than expected, according to deepfake expert Hao Li who predicts, "Deepfakes will be perfect in two to three years."

Policymakers step in

The rapid spread of fake videos worries U.S. policymakers in light of the upcoming 2020 U.S. election. Members of the U.S. Congress, the U.S. Intelligence Committee, and AI and legal experts are warning of a deepfake glut and calling for regulations. Twitter is the first social platform to unveil new measures against deepfakes: Twitter wants to flag suspicious tweets and show users warnings.

Politicians outside the U.S. also take a stand. China makes AI fakes a punishable offense. The German government issues a statement on the technology saying "Deepfakes can weaken societal trust in the fundamental authenticity of audio and video recordings and thus the credibility of publicly available information." They could therefore pose a "major risk to society and politics." However, the risk should also not be overstated.

2020: Deepfake regulation & Disney's megapixel deepfakes

Facebook announces at the start of the 2020 US election campaign year that it will ban deepfakes on its own platform - with the exception of satirical or parodic deepfakes. YouTube follows suit with similar guidelines, and Twitter begins enforcing its anti-deepfake rules introduced the previous year. In August, TikTok also bans deepfakes from its own video platform.

Google sister Jigsaw releases "Assembler," an AI-powered tool for journalists to help them detect deepfakes. Qualcomm supports a start-up that irrevocably marks original photos and videos as originals upon creation, thus simplifying deepfake identification later on.

Deepfakes continue to improve

Meanwhile, the development of ever better deepfakes continues: Microsoft presents FaceShifter, an AI that generates credible deepfakes even from blurred original faces. FaceShifter relies on two networks. One creates the fake face and takes head pose, facial expression, lighting, color, background, and other attributes of the original photo for the fake. The second network HEAR-Net compares the photo generated by the first network with the original photo.

If HEAR-Net finds parts of the face obscured by hair, sunglasses, or writing, for example, it improves possible errors of the first network. Faces disappear again behind hair, lettering can be deciphered, and makeup sits where it belongs.

The Disney deepfakes

Entertainment giant Disney begins developing deepfakes for the big screen, unveiling the first megapixel deepfake: A full 1,024 by 1,024 pixels in size. At the time of the Disney patent's release, alternatives like DeepFaceLab managed just 256 by 256 pixels, and even in early 2021, DeepFaceLab 2.0's maximum resolution is 448 by 448 pixels.

Disney's Deepfake technology could, in the long run, replace traditionally used special effects methods that often require months of work for just a few seconds of footage.

Disney fans are still waiting for a first use of the megapixel deepfake - the recent appearance of a legendary Star Wars character in The Mandalorian still had to do without the technology. A missed opportunity: YouTube deepfakes of the same scene did better than Disney's CGI artists.

2021: Deepfake cruise, live streams & face rentals

The year begins with a particularly believable Tom Cruise deepfake. The Deepfaker "Deeptomcruise" uploads videos on Tik-Tok that are only recognizable as fakes if you look closely. The viral videos bring the channel hundreds of thousands of followers and the attention of the real Tom Cruise - who creates a verified channel on Tik-Tok. Behind Deeptomcruise is visual effects specialist Chris Umé, who says he worked on each video for several weeks.

Shortly after the Cruise fakes, the app Wombo AI conquers the web: With just a few clicks, any person's photo can be turned into a short video clip in which they perform well-known songs. Wombo AI's artificial intelligence is based on video recordings of real performers singing one of the songs with matching facial expressions. The Wombo AI, trained with the video data, then transfers these animations to each photo in which it recognizes a face.

This WOMBO AI is crazy lol pic.twitter.com/A7aVT4ISBN

- heyben10 (@HeyBen10_) March 10, 2021

Disney hires a well-known Deepfake YouTuber, fueling rumors that there could be more Deepfake characters in the future in, say, Star Wars. In fact, the Boba Fett series released at the end of 2021 confirms these speculations.

Deepfakes in social and mass media

Away from Disney, Bruce Willis' face makes it into a Russian commercial and a start-up buys licensing rights to real faces to use them in marketing videos using deepfake technology. Nvidia releases Alias-Free GAN in 2021, an improved version of StlyeGAN2 that enables more consistent results for perspective changes. A few months later, an optimized version releases under the name StyleGAN3.

The creator of DeepFaceLab will also show DeepFaceLive for the first time in 2021. The program can exchange faces in live videos after appropriate training or with supplied pre-trained AI models. For the live exchange, it needs a fast graphics card like the ones found in current gaming PCs.

A young man becomes a likeness of Margot Robbie via live deepfake. | Video: Github

In 2021, so-called diffusion models will also achieve the image quality of the previously unbeaten GANs for the first time. The technology has not yet been used for deepfakes. But, it forms the basis for OpenAI's GLIDE image generation tool, unveiled in late 2021.

2022: 3D GANs, DALL-E 2 and a Selensky deepfake

January brings two impressive GAN improvements. AI researchers at Tel Aviv University demonstrate a variant of StyleGAN2 that can manipulate faces in short video clips, such as adding a smile or tapering a character, without additional video training.

Video: Tzaban et al

Researchers from Nvidia and Stanford demonstrate the Efficient Geometry-aware 3D Generative Adversarial Networks (EG3D) method. This approach can consistently generate images of a person (or cat) from different viewpoints and a matching 3D reconstruction.

Conversely, the 3D GAN can also generate a 3D reconstruction from a single image of a real person. EG3D can thus generate much more believable fakes, since the generated persons also look consistent from different angles.

In 2022, researchers at the Stanford Internet Observatory find more than 1000 suspected fake profiles on Linkedin in a two-week study. More than 70 identified companies listed the fake profiles as employees and used them primarily for the initial contact of potential new customers. If the initial contact is successful, the customer is directed to a real person who references the fake profile in the course of the conversation.

A possibly historical deepfake appears in the war of aggression against Ukraine. In the video, a fake version of Ukrainian President Volodymyr Selensky calls on his people to lay down their arms. However, despite the low video resolution, the fake is easy to recognize and has no effect. Whether it is actually a deepfake, i.e. a video faked with AI technology, has not been definitively proven. Many media and numerous experts assume that it is.

In April 2022, OpenAI presents DALL-E 2, an AI system that generates images from text descriptions. The program is expected to be released in the summer of 2022.

DALL-E 2 and the underlying diffusion models are not used for deepfakes by OpenAI. The organization prohibits the generation of human faces. However, the technology will enable even better synthetic images in the future.

The AI fake decade and how to deal with deepfakes

When GAN inventor Goodfellow presented his work in 2014, he probably didn't foresee the rapid development of AI-faked images. Today, at any rate, he warns: In the future, people should no longer believe images and videos on the internet as a matter of course.

Deepfakes, which are no longer recognizable as such, even by anti-deepfake algorithms, could finally change the rules of the game - both socially and in entertainment. Deepfake expert Hao Li believes this development is possible, since images are ultimately nothing more than suitably colored pixels - a perfect copy is only a matter of time.

In addition, deepfakes are becoming commonplace due to their rapid spread on YouTube and through apps such as Reface or Impressions. Humanity got by in the past without videos and photos to inform and form opinions, Goodfellow said: "In this case, it's more like AI is closing some of the doors that our generation has been used to having open."