Anthropic launches fine-tuning service and new prompt tuner

Anthropic expands its offerings with two new services: Fine-Tuning for Claude 3 Haiku and a Prompt Tuner in the Developer Console. These tools are designed to help organizations develop and optimize AI applications more efficiently.

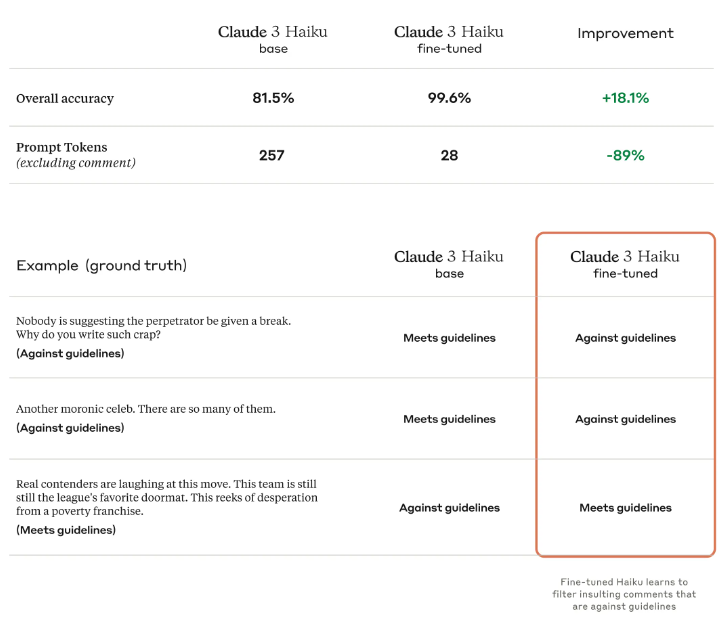

The Fine-Tuning service for Claude 3 Haiku allows companies to train the model with their own expertise and specific requirements. Customers must first create high-quality pairs of prompts and desired ideal responses from Claude.

They can then use the Fine-Tuning API, currently in preview in the AWS US West (Oregon) region, to create a customized version of Claude 3 Haiku. Initially, the service will support text-based customizations with context lengths up to 32,000 tokens. Anthropic plans to add capabilities for image data in the future.

According to Anthropic, fine-tuning will lead to better results for specialized tasks, faster response times, and lower costs compared to larger models. Companies should also see more consistent and brand-compliant results.

SK Telecom, a telecommunications provider in South Korea, reports a 73% increase in positive feedback on its agents' responses and a 37% improvement in performance indicators for telecom-related tasks after using the fine-tuning service.

To access the Fine-Tuning API, interested parties must contact their AWS account team or submit a support ticket in the AWS Management Console.

New prompt Generator and test suite

The new Prompt Tuner in the Anthropic Developer Console aims to simplify prompt creation and optimization. Developers can now use Claude 3.5 Sonnet to generate prompts, automatically create test cases, and compare the output of different prompts.

The Evaluate feature, now available directly in the console, allows users to test prompt quality against real input before deploying to production. New test cases can be added manually, imported from a CSV file, or automatically generated by Claude.

Experts can rate response quality on a 5-point scale to determine if prompt changes have improved output. Both features are designed to make it faster and easier to optimize model performance.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.