Language models like GPT-4 memorize more than they reason, study finds

A new study reveals that large language models such as GPT-4 perform much worse on counterfactual task variations compared to standard tasks. This suggests that the models often recall memorized solutions instead of truly reasoning.

In an extensive study, researchers from the Massachusetts Institute of Technology (MIT) and Boston University investigated the reasoning capabilities of leading language models, including GPT-4, GPT-3.5, Claude, and PaLM-2.

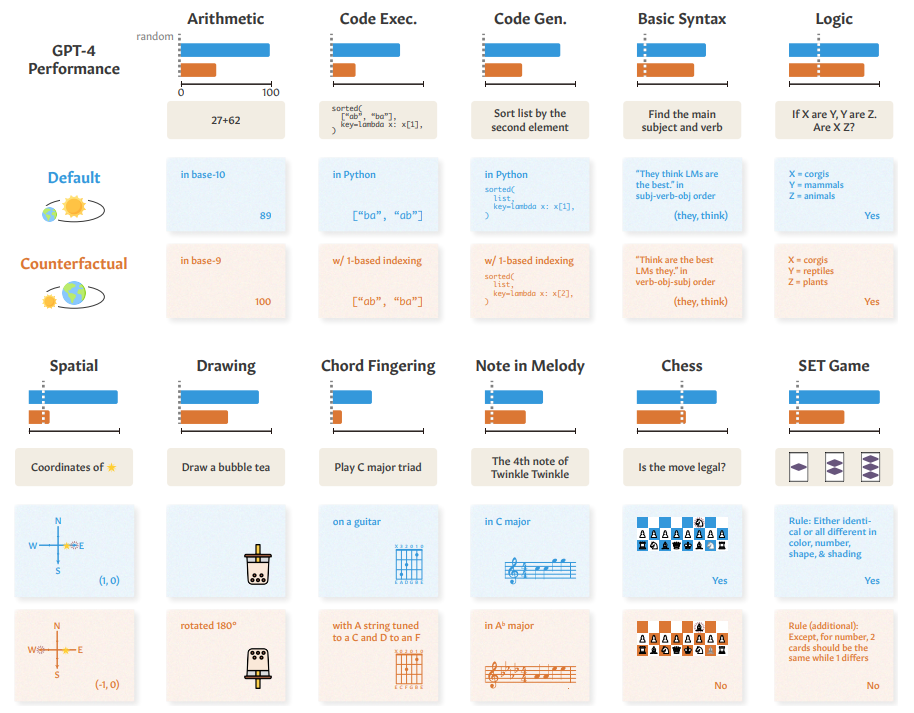

The researchers created eleven counterfactual variations of these tasks, where the basic rules or conditions were slightly altered compared to the standard tasks.

For instance, the models had to perform additions in number systems other than the standard decimal system, evaluate chess moves with minor changes to the starting positions of the pieces, or place a soft drink upside down.

In standard decimal addition, GPT-4 achieved nearly perfect accuracy of over 95 percent. However, in the base 9 number system, its performance dropped below 20 percent. Similar patterns were observed in other tasks, such as programming, spatial reasoning, and logical reasoning.

Nevertheless, the researchers highlight that the patterns of the counterfactual tasks were usually above chance level, indicating some generalization ability. So, they are likely not just learning by rote. However, the researchers cannot exclude the possibility that their counterfactual conditions were included in the AI's training dataset.

Regardless, the significant performance drop compared to standard tasks demonstrates that the models typically resort to non-transferable behaviors specific to standard conditions instead of using abstract, generalizable logical thinking.

The study also discovered that the models' performance in counterfactual tasks correlated with the frequency of the respective conditions. For example, GPT-4 showed the best counterfactual performance in the guitar chord task for the relatively frequent alternative drop-D tuning. This suggests a memory effect where the models perform better in more frequent conditions.

The researchers also explored the impact of chain-of-thought prompting (without examples), a technique where the model is asked to reason in steps. This method improved performance in most cases but could not completely close the gap between the standard and counterfactual tasks.

The researchers argue that the success of existing language models in standard tasks should not be considered sufficient evidence of their general ability to solve the target task. They stress the importance of distinguishing between recalling memorized solutions and genuine reasoning.

Recent experiments and studies have demonstrated the limited reasoning abilities of large language models. The AI industry's ultimate goal is to develop AI models with a combination of reasoning capabilities and generative AI, allowing GenAI systems to apply the knowledge learned from training examples to new examples.

A study on the quality of ChatGPT code generation revealed that GPT-3.5 could reliably solve code tasks from the LeetCode training website that were published before the end of training in 2021. However, performance on tasks published after the end of the training period dropped significantly.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.