OpenAI pits AI models against each other to produce clearer, more verifiable text

Key Points

- OpenAI has created a new approach called "prover-verifier games" that pits two AI models against each other. In this setup, a "prover" AI generates solutions to problems, while a "verifier" AI checks these solutions for accuracy.

- The two AIs take turns training in both "helpful" and "devious" roles. This process teaches the prover to create solutions that are easy for both the verifier and humans to understand. At the same time, the verifier learns to spot even small mistakes.

- OpenAI's researchers believe this method could lead to AI systems that produce correct and clearly verifiable results. Such systems could boost confidence in AI applications and make it easier to use them in important fields.

OpenAI has created a new method to enhance the clarity and verifiability of AI-generated text. This approach could boost confidence in AI systems and expand their use in critical fields.

The method uses "prover-verifier games." Two AI models compete: A "prover" makes solutions to a problem, and a "verifier" checks if they're right.

The goal is to train the prover to produce solutions that are easy for the verifier to understand. OpenAI says this also makes the solutions more comprehensible and verifiable for humans.

The study used a stronger GPT-4 model as the prover and a much weaker GPT-4 model as the verifier. The prover alternated between "helpful" and "sneaky" roles during training.

When helpful, the prover was rewarded for correct solutions the verifier liked. When sneaky, it was rewarded for wrong solutions that fooled the verifier.

Early on, the tricky prover easily tricked the verifier with wrong answers. But the back-and-forth training taught the verifier to spot subtle mistakes. This pushed the prover to try new tactics each time. Training ends when the prover can't fool the verifier anymore.

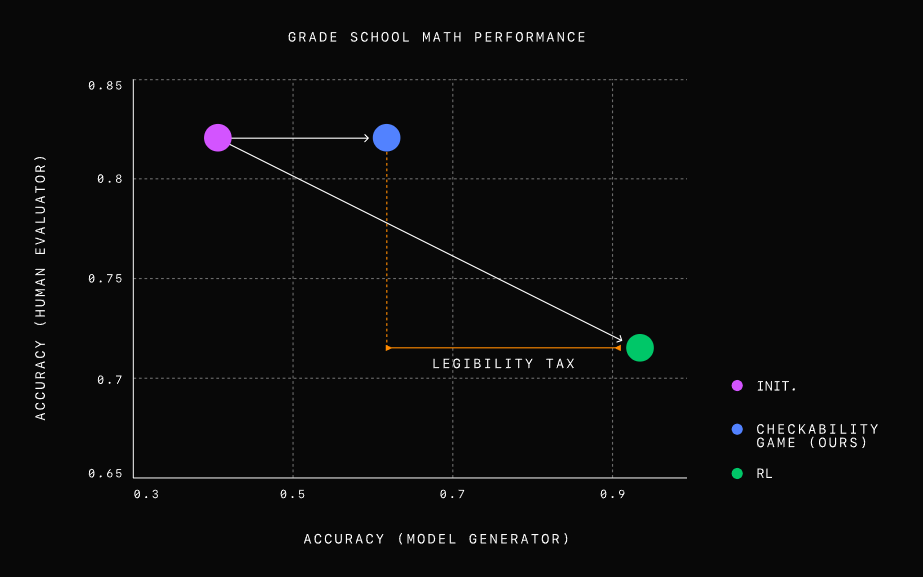

The researchers tested their method on elementary school math problems. They found that a model optimized only for correctness produced accurate but hard-to-understand solutions. Human evaluators made nearly twice as many errors assessing these solutions compared to those generated using the new approach.

The prover-verifier games struck a good balance between accuracy and clarity. The solutions were still highly accurate but were much easier to understand.

In the end, a tough verifier that can reliably tell right from wrong answers, even when the prover is trying to trick it, combined with a helpful prover that makes errors easier for people to spot, could be a powerful tool.

OpenAI's team sees this method as a promising way to develop AI systems with results that can be correctly and transparently verified. This could increase trust in AI applications and broaden their use in critical areas like medicine, finance, and law, where accuracy and traceability are crucial.

Another advantage is that the method relies less on human guidance and evaluation. This is important for developing superintelligent AI systems that need to reliably align with human values and expectations without direct human oversight, OpenAI writes.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now