Purdue's UniT gives robots a more human-like sense of touch

A team from Purdue University has introduced a new technique called UniT that allows robots to process tactile information more efficiently and transfer it to various tasks.

Scientists at Purdue University have developed a method that enables robots to process tactile information more effectively. The technique, dubbed UniT (Unified Tactile Representation), uses a specialized machine learning approach to create a versatile representation from simple tactile data.

The goal is to provide robots with a sense of touch similar to how humans use it to interact with objects, in addition to visual information. What's unique about UniT is that the tactile representation can be trained with data from just one simple object. In the experiments, the researchers used a hex key or a small ball for this purpose.

Video: Xu, Uppuluri et al.

To capture the tactile data, a GelSight sensor was used. It consists of an elastic gel with embedded markers that deform upon touch. A camera captures these deformations. These images show how the object is pressed or moved, providing information about its shape, position, and the forces acting on it.

The researchers then use a VQVAE to store the information from the tactile images in a compact form. This allows the robot to learn efficiently and apply the skills to various tasks.

UniT significantly improves robot performance

Experiments showed that the representation learned with UniT could be well transferred to unknown objects. For example, it was possible to reconstruct the contact geometry and force distribution when touching various objects, even though the system was only trained with a simple object.

UniT also enables the robot to handle different tasks without additional training, such as recognizing the position of a USB connector or precisely grasping objects. UniT shows better results than previous methods that rely only on visual information or treat the sense of touch like an additional camera.

Video: Xu, Uppuluri et al.

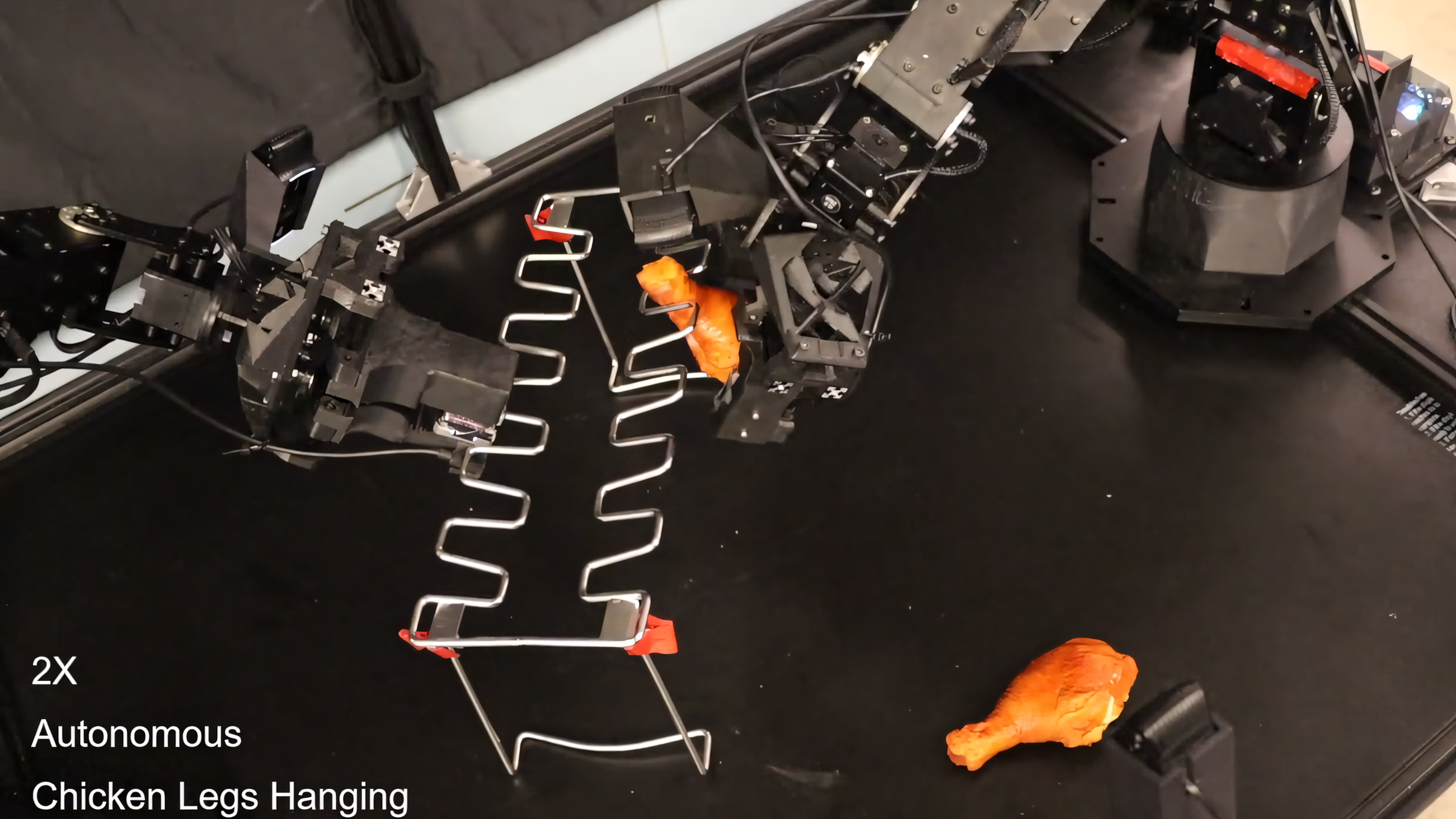

The researchers demonstrated the effectiveness of UniT through several robotic tasks. In 3D pose estimation of a USB connector, the method significantly outperformed other approaches. UniT-based control also proved superior in more exotic manipulation tasks such as hanging chicken legs or grasping fragile chips.

Video: Xu, Uppuluri et al.

Video: Xu, Uppuluri et al.

The researchers see potential for further developments. In the future, the method could be extended to soft objects or complemented with physical models.

More information and the code are available on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.