Meta's new 'Sapiens' AI models can analyze human images with unprecedented accuracy

Meta has launched a new family of AI models called "Sapiens" that focus on analyzing images containing humans.

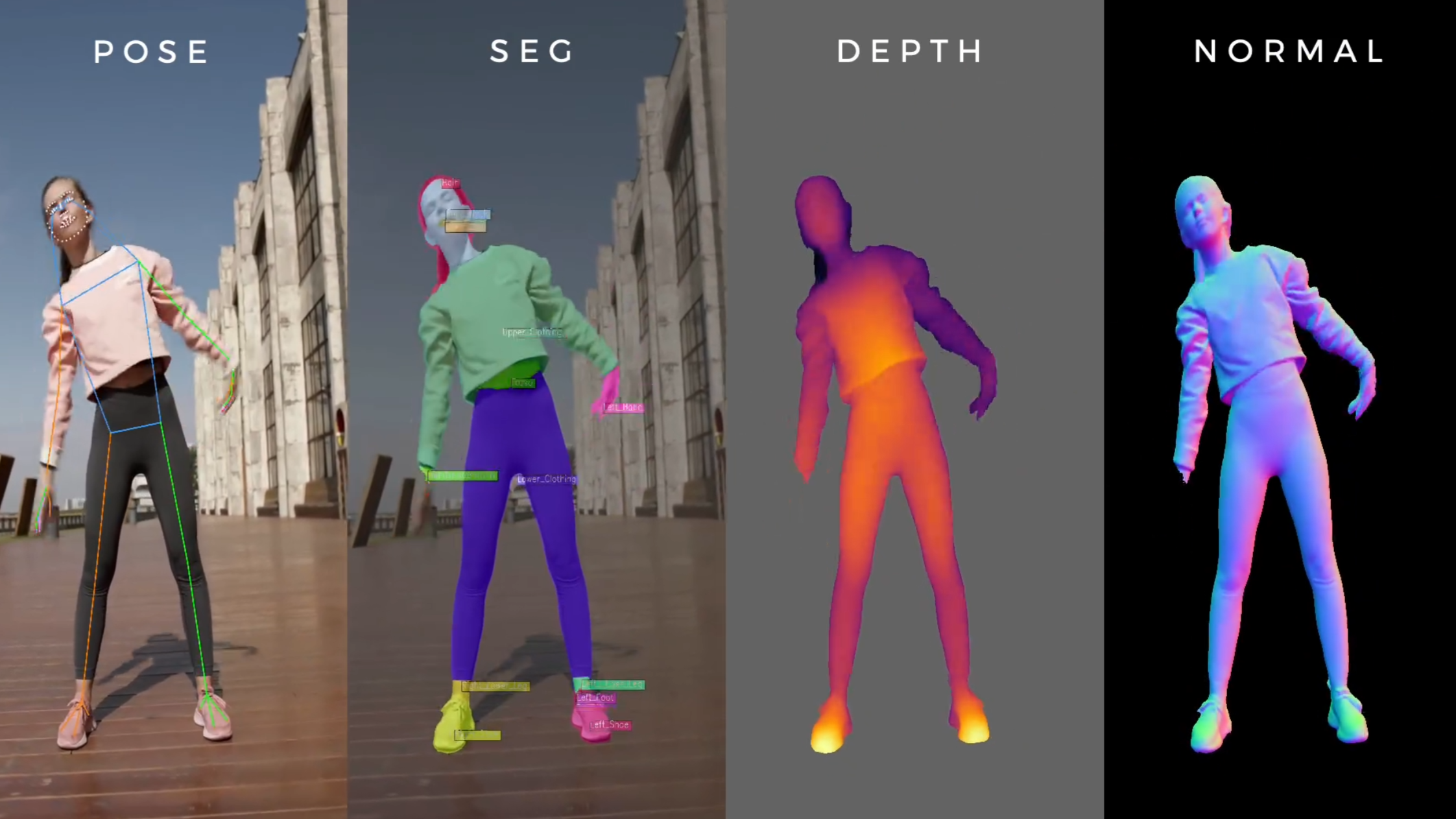

These models were pre-trained on a dataset of 300 million human images and can perform various tasks including 2D pose estimation, body segmentation, depth estimation, and surface normal estimation. The latter determines the orientation of surfaces in three-dimensional space for each point in an image. This information is crucial for understanding the 3D structure of objects and people in images and plays a key role in creating realistic lighting for 3D reconstructions.

According to Meta, the Sapiens models significantly outperform existing approaches in these tasks. For instance, in body segmentation, which identifies individual body parts in images, the Sapiens 2B model achieves an improvement of over 17 percentage points compared to previous methods.

Video: Meta

The researchers note that the models' performance improves with size: The largest model, Sapiens-2B, has 2 billion parameters and was trained natively at an image resolution of 1024 by 1024 pixels. Meta claims this allows for more detailed analysis than conventional models with lower resolution.

Sapiens models could enable better data sets

The researchers believe that pre-training on the large, curated dataset of human images is a key factor in the Sapiens models' performance. This leads to better generalization in real-world scenarios compared to training on general image data, which is the usual approach. Meta's Segment Anything 2 is an example of such a system.

Despite the improved performance, the team acknowledges that challenges remain with complex poses, crowds, and significant occlusions. The Sapiens models could also serve as a tool for annotating large amounts of real-world data to develop the next generation of human-centric image analysis systems, the team said.

Meta is making the Sapiens models available to the research community onGitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.