Apple's iPhone keynote: AI news recap (spoiler: not much to report)

Key Points

- Apple's iPhone keynote revealed limited new features for Apple Intelligence, with the first AI functions coming to iOS 18.1, iPadOS 18.1, and macOS Sequoia 15.1 in October. The free software update will roll out first in US English, with localized versions for other English-speaking countries in December and additional language support in 2025.

- "Visual Intelligence," a new feature accessed via the iPhone 16 Pro's physical camera button, allows users to quickly gather information about their surroundings, such as identifying dog breeds or checking restaurant ratings.

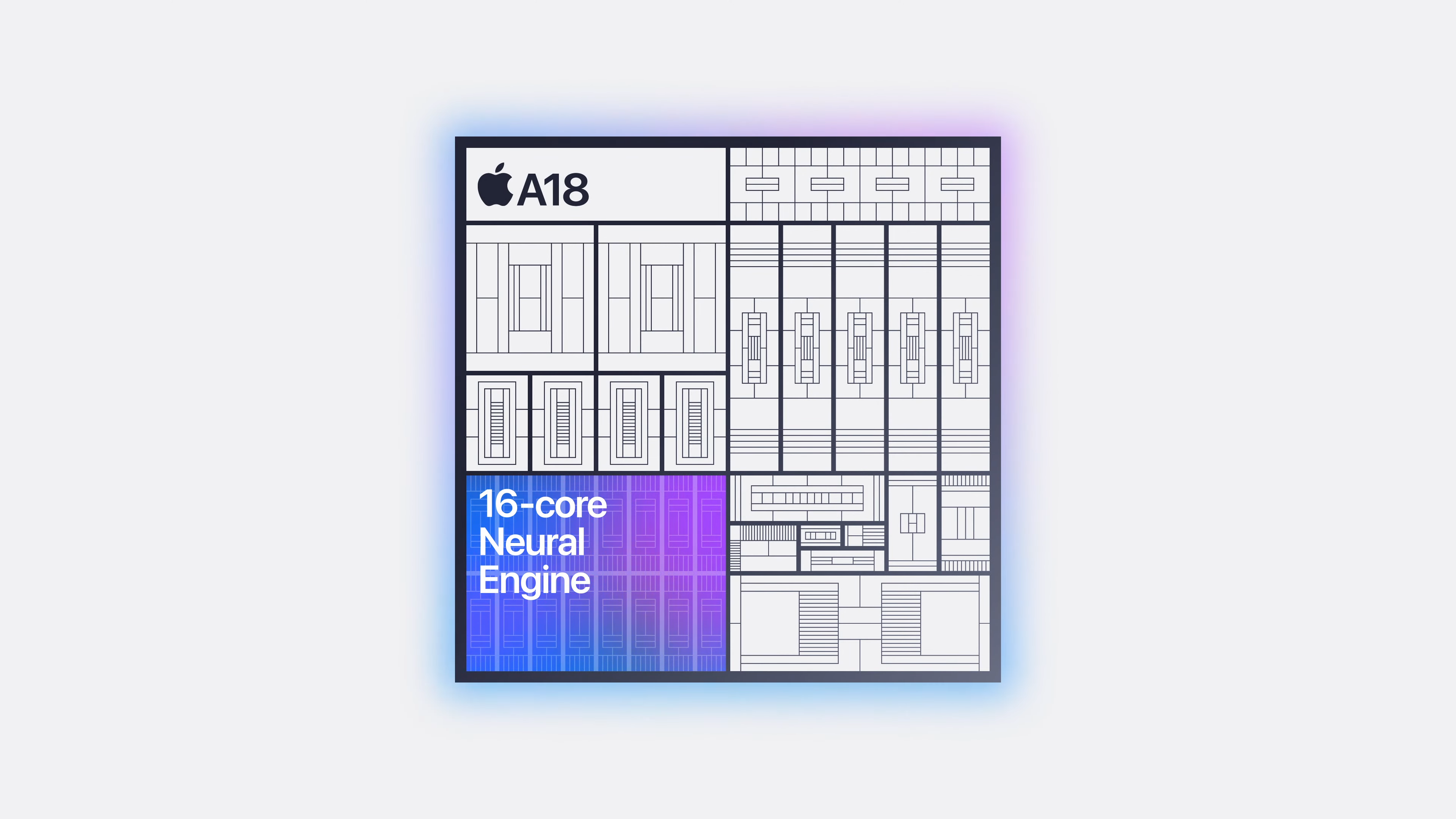

- The iPhone 16 Pro models feature the A18 Pro chip, offering 15% faster AI processing and a dedicated 16-core AI accelerator for improved machine learning performance.

Apple had already previewed the upcoming features of Apple Intelligence for iOS 18 at WWDC. As a result, the much-anticipated iPhone keynote was somewhat underwhelming from an AI perspective.

At its annual iPhone event, alongside introducing the iPhone 16 and other devices, Apple briefly touched on artificial intelligence. The initial AI capabilities, announced in June under the "Apple Intelligence" brand, are set to arrive in iOS 18.1, iPadOS 18.1, and macOS Sequoia 15.1 starting in October. However, the feature set will be limited at first, with more functions rolling out in the coming months.

Apple Intelligence is built into iOS 18, iPadOS 18, and macOS Sequoia. It uses Apple Silicon to understand and generate language and images, work across applications, and tap into users' personal context to simplify everyday tasks - all while preserving user privacy and security, Apple claims.

Google Lens for Apple users

A key example of a Siri request that iPhones will handle in the future, according to Apple: "Send Erika the picture from last Thursday's barbecue." Apple Intelligence understands the image content and links it with information such as the date it was taken, then sends the files to a contact via an app.

Many of the Apple Intelligence functions shown were already known from the WWDC build. A new feature is "Visual Intelligence," accessed on the iPhone 16 Pro via the new physical camera button (Camera Control).

This lets users quickly gather information about their surroundings. Apple demonstrates how to identify dog breeds, check ratings and opening hours for restaurants, or add events to the calendar from a flyer.

Video: Apple

Visual Intelligence also provides access to third-party tools. For example, users can search on Google (similar to Google Lens) to find where they can buy a photographed item, or use ChatGPT directly from the camera interface. Apple stresses that users always control when third-party tools are used and what information is shared.

The new iPhone 16 Series, specifically the iPhone 16 Pro and iPhone 16 Pro Max, is designed for Apple Intelligence, the company says. These devices feature the 3-nanometer A18 Pro chip, which enables 15 percent faster AI processing compared to the iPhone 15 Pro.

The chip also contains a dedicated AI accelerator with 16 cores, which boosts the performance of machine learning tasks. Apple says the new chip is up to twice as fast as its predecessor, the A16, and can run "large generative AI models."

Coming soon to an English-speaking country near you

Other functions shown were restricted to writing tools, which turn keywords into full text or make written paragraphs sound more formal. Notifications, such as those for emails, no longer show the first words of the content but an AI-generated summary.

Video: Apple

The Photos app is also getting an AI upgrade: users can now create movies by entering a description or find photos and video clips using natural language descriptions. The new "Clean Up" tool removes distracting objects in the background of a photo without changing the subject.

Apple Intelligence will be available as a free software update, with the first set of features rolling out next month in US English for most regions worldwide. The company plans to expand AI capabilities in December to include localized English in Australia, Canada, New Zealand, South Africa, and the UK. Additional language support for Chinese, French, Japanese, and Spanish will follow next year.

A month earlier, Google unveiled the latest generation of its Pixel series, which, like Apple Intelligence, fully relies on the integration of Gemini to enhance the smartphone experience with AI.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now