AI startup Prime Intellect trains first distributed LLM across three continents

Key Points

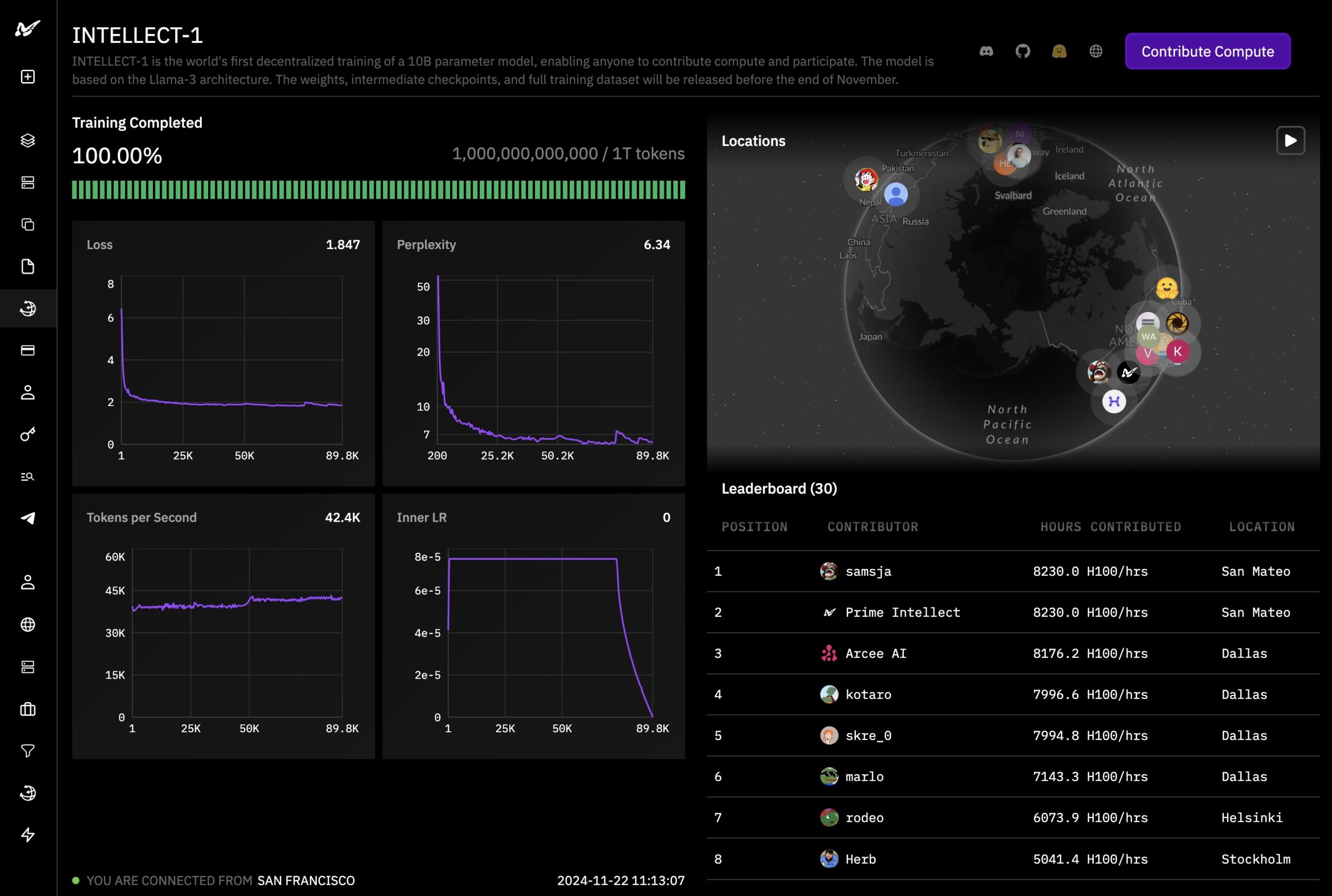

- AI startup Prime Intellect trained Intellect-1, a 10-billion-parameter language model, in just eleven days using a decentralized approach. It plans to open source it within a week.

- The company used OpenDiLoCo, an open-source implementation of DeepMind's Distributed Low-Communication (DiLoCo) method, which enables training on globally distributed devices while reducing communication requirements.

- Intellect-1 is based on the LLaMA-3 architecture, was trained with high-quality open-source datasets, and represents a milestone in the accessibility of AI training. However, it is still relatively small at 10 billion parameters, and the scalability of this approach remains to be seen.

AI startup Prime Intellect says it has completed training a 10-billion-parameter language model using computers spread across the US, Europe, and Asia.

The company claims Intellect-1 is the first language model of this size trained using a distributed approach, and plans to make both Intellect-1 and its training data available as open source next week.

The project aims to show that smaller organizations can build large AI models too, with a goal of letting anyone contribute computing power to create transparent, freely available AI systems. The vision is to allow anyone to contribute computing power toward creating transparent, freely available open-source AGI systems.

New training method reduces data transfer requirements

The project uses Prime Intellect's open-source version of DeepMind's Distributed Low-Communication method (DiLoCo), called OpenDiLoCo. This approach lets organizations train AI models across globally distributed systems while minimizing data transfer requirements.

Building on this foundation, Prime Intellect created a system for reliable distributed training that can handle computing resources being added or removed on the fly. The system optimizes communication across a worldwide network of graphics cards.

The model is based on the LLaMA-3 architecture and trained on open datasets. Its training data of more than 6 trillion tokens comes primarily from four sources: Fineweb-edu, DLCM, Stack v2, and OpenWebMath.

Looking ahead

Prime Intellect calls this a first step toward bigger goals. The company wants to scale up its distributed training to work with more advanced open-source models. They're building a system to let anyone contribute computing power securely, with training sessions open to public participation.

The company says open-source AI development reduces risks of centralized control. However, they acknowledge that competing with major AI labs needs coordinated effort. They're seeking support through collaboration and computing resources.

While Intellect-1 represents progress in making AI development more accessible, its 10 billion parameters make it relatively small by today's standards. Even without benchmark results, it's unlikely to match larger commercial AI models, or even smaller open-source models.

The main question is whether Prime Intellect can take this approach beyond proof-of-concept into something that can meaningfully advance AI development.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now