Google's AI Overview confidently presents fake 'Kyloren syndrome' as real medical condition

A neuroscience writer caught Google's AI search presenting a completely fabricated medical condition as scientific fact. The incident raises questions about AI systems spreading misinformation while sounding authoritative.

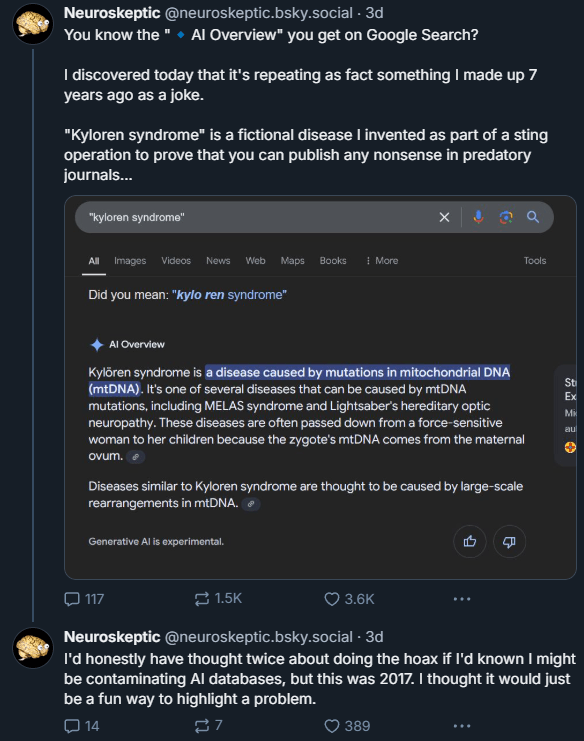

The user "Neuroskeptic" discovered Google's AI describing "Kyloren syndrome" as a real medical condition. The twist? Neuroskeptic had invented this fake syndrome seven years ago as a joke to expose flaws in scientific publishing.

The AI didn't just mention the condition - it provided detailed medical information, describing how this non-existent syndrome passes from mothers to children through mitochondrial DNA mutations. All of this information was completely made up.

"I'd honestly have thought twice about doing the hoax if I'd known I might be contaminating AI databases, but this was 2017. I thought it would just be a fun way to highlight a problem," Neuroskeptic said.

AI overview cites sources it hasn't read

While "Kyloren Syndrome" is an unusual search term, this case reveals a concerning pattern with AI search tools: they often present incorrect information with complete confidence. A regular Google search immediately shows the paper was satirical, yet the AI missed this obvious red flag. It shows that context often matters.

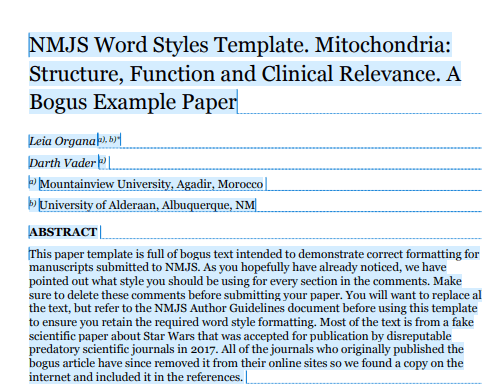

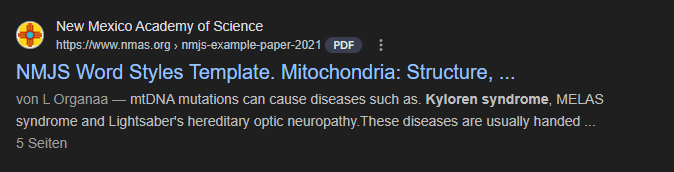

But Google's AI model Gemini, which creates these search overviews, completely missed this crucial context - despite citing the very paper that would have exposed the joke. The AI referenced the source without actually understanding what it contained.

In fairness, not all AI search tools fell for the fake condition. Perplexity avoided citing the bogus paper entirely, though it did veer off into a discussion about Star Wars character Kylo Ren's potential psychological issues.

ChatGPT's search proved more discerning, noting that "Kyloren syndrome" appears "in a satirical context within a parody article titled 'Mitochondria: Structure, Function and Clinical Relevance.'"

AI search companies stay quiet about error rates

Google's incident adds to concerns about AI search services making things up while sounding authoritative. When asked about concrete error rates in their AI search results, Google, Perplexity, OpenAI, and Microsoft have all stayed silent. They didn't even confirm whether they systematically track these errors, even though doing so would help users understand the technology's limitations.

This lack of transparency creates a real problem. Users won't spend time fact-checking every AI response - that would defeat the purpose. If people have to double-check everything, they might as well use regular search, which is often more reliable and faster. But this reality doesn't square with some people's claims that AI-powered search is the future of the Web.

The incident also raises questions about who's responsible when AI systems spread misinformation that could potentially harm people, as happened recently with Microsoft Copilot talking about court reporter Martin Bernklau. So far, companies running these AI systems haven't addressed these concerns.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.