OLMo 2 32B sets a new standard for true open-source LLMs with public code, weights, and data

Key Points

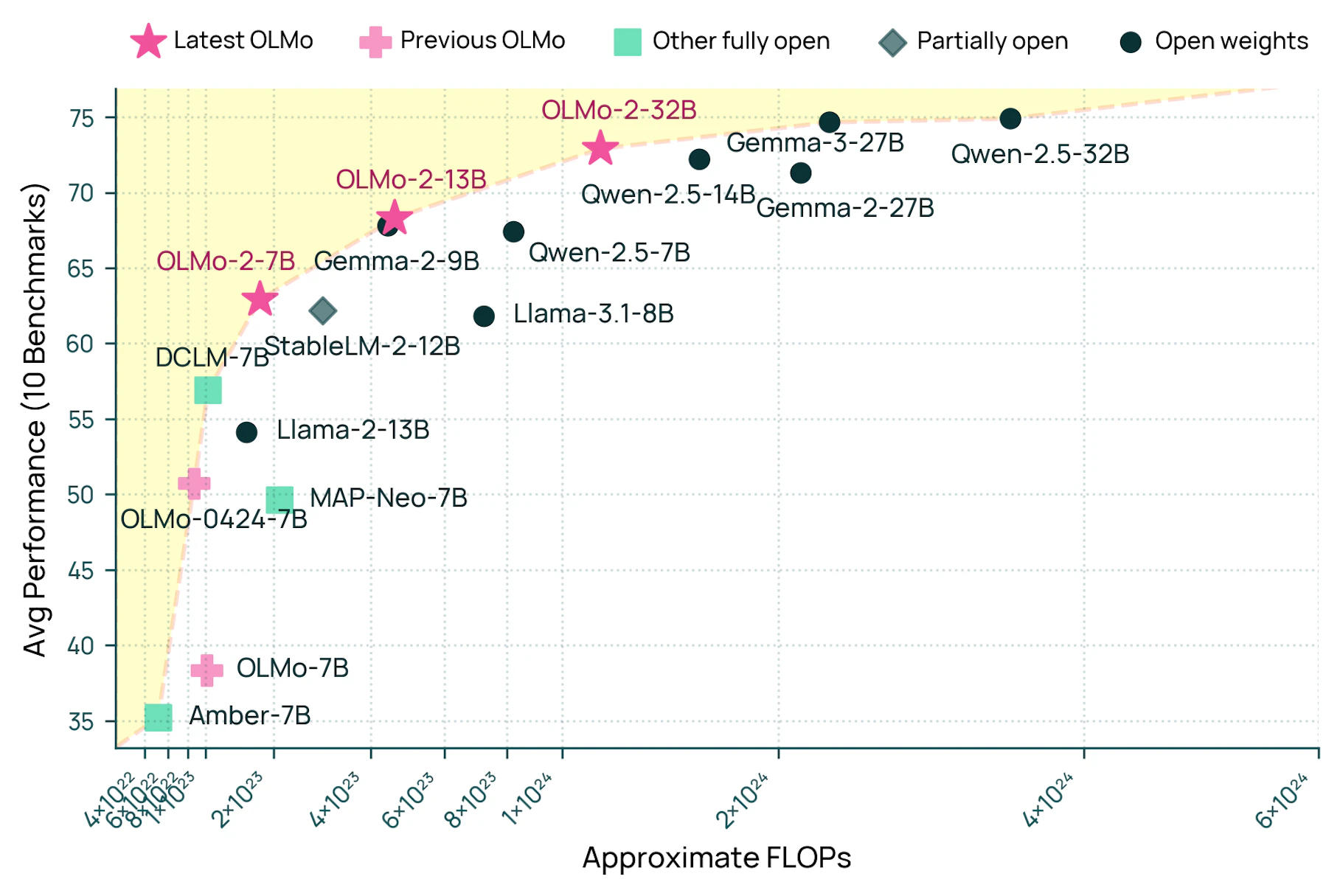

- Researchers at the Allen Institute for AI (Ai2) have developed OLMo 2 32B, a new open-source language model that matches the performance of commercial models like GPT-3.5-Turbo.

- The model was trained using just one-third of the computing resources required by comparable models, utilizing a three-phase process: basic language training, refinement with high-quality documents, and instruction training.

- Ai2 has made the entire development process transparent by releasing the source code, training checkpoints, the "Dolmino" dataset, and all technical details to the public.

A new open-source language model has achieved performance comparable to leading commercial systems while maintaining complete transparency.

The Allen Institute for Artificial Intelligence (Ai2) announced that its OLMo 2 32B model outperforms both GPT-3.5-Turbo and GPT-4o mini while making its code, training data, and technical details publicly available.

The model stands out for its efficiency, consuming only a third of the computing resources needed by similar models like Qwen2.5-32B. This makes it particularly accessible for researchers and developers working with limited resources.

Building a transparent AI system

The development team used a three-phase training approach. The model first learned basic language patterns from 3.9 trillion tokens, then studied high-quality documents and academic content, and finally mastered instruction-following using the Tulu 3.1 framework, which combines supervised and reinforcement learning techniques.

To manage the process, the team created OLMo-core, a new software platform that efficiently coordinates multiple computers while preserving training progress. The actual training took place on Augusta AI, a supercomputer network of 160 machines equipped with H100 GPUs, reaching processing speeds over 1,800 tokens per second per GPU.

While many AI projects, such as Meta's Llama, claim open-source status, OLMo 2 meets all three essential criteria: public model code, weights, and training data. The team has released everything, including the Dolmino training dataset, enabling complete reproducibility and analysis.

"With just a bit more progress everyone can pretrain, midtrain, post-train, whatever they need to get a GPT 4 class model in their class. This is a major shift in how open-source AI can grow into real applications," says Nathan Lambert of Ai2.

This builds on their earlier work with Dolma in 2023, which helped establish a foundation for open-source AI training. The team has also uploaded various checkpoints, i.e., versions of the language model at different times during training. A paper released in December along with the 7B and 13B versions of OLMo 2 provides more technical background.

The gap between open and closed source AI systems has narrowed to about 18 months, according to Lambert's analysis. While OLMo 2 32B matches Google's Gemma 3 27B in basic training, Gemma 3 shows stronger performance after fine-tuning, suggesting room for improvement in open source post-training methods.

The team plans to enhance the model's logical reasoning and expand its ability to handle longer texts. Users can test OLMo 2 32B through Ai2's Chatbot Playground.

While Ai2 also released the larger Tülu-3-405B model in January that surpasses GPT-3.5 and GPT-4o mini, Lambert explains that it isn't fully open source since the lab wasn't involved in its pretraining.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now