Microsoft's Phi-4-reasoning models outperform larger models and run on your laptop or phone

Microsoft is expanding its Phi series of compact language models with three new variants designed for advanced reasoning tasks.

The new models—Phi-4-reasoning, Phi-4-reasoning-plus, and Phi-4-mini-reasoning—are optimized to handle complex problems through structured reasoning and internal reflection, while remaining lightweight enough to run on lower-end hardware, including mobile devices. The models are part of Microsoft's Phi family of small language models and, as such, are designed to enable reasoning capabilities especially on lower-performance hardware such as mobile devices.

High efficiency with fewer parameters

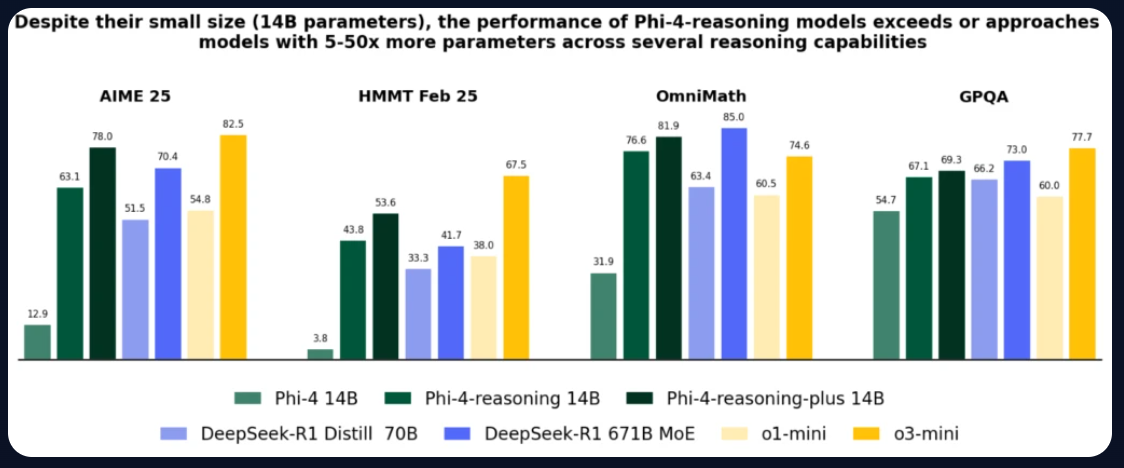

Phi-4-reasoning contains 14 billion parameters and was trained via supervised fine-tuning using reasoning paths from OpenAI's o3-mini. A more advanced version, Phi-4-reasoning-plus, adds reinforcement learning and processes 1.5 times more tokens than the base model. This results in higher accuracy, but also increases response time and computational cost.

According to Microsoft, both models outperform larger language models such as OpenAI's o1-mini and DeepSeek-R1-Distill-Llama-70B—even though the latter is five times bigger. On the AIME-2025 benchmark, a qualifier for the U.S. Mathematical Olympiad, the Phi models also surpass DeepSeek-R1, which has 671 billion parameters.

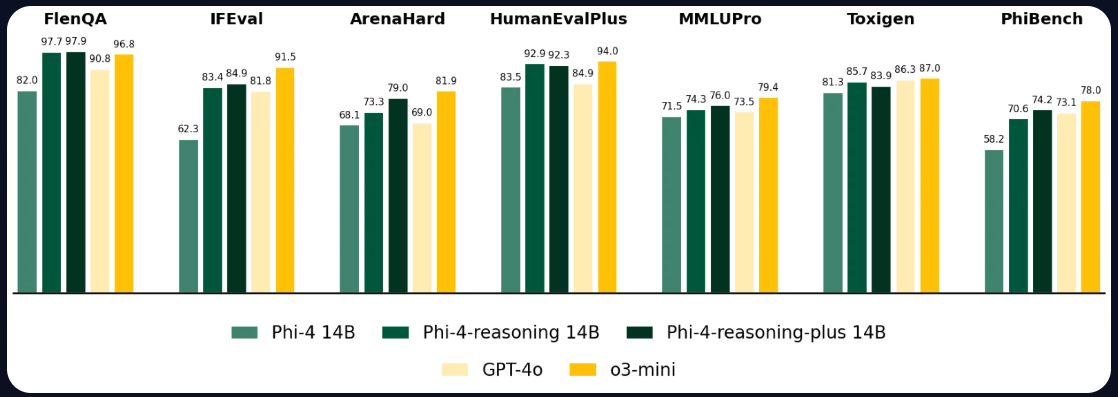

Performance gains are not limited to math or science domains. Microsoft says the models also show strong results in programming, algorithmic problem-solving, and planning tasks. Improvements in logical reasoning are said to positively impact more general capabilities as well, such as following prompts or answering questions based on long-form content. "We observe a non-trivial transfer of improvements to general-purpose benchmarks as well," the researchers write.

Phi-4-mini-reasoning brings reasoning to mobile

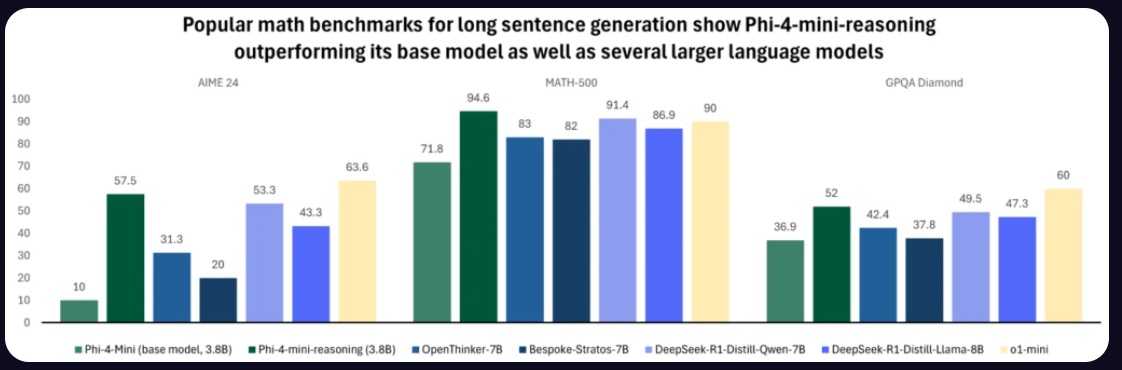

The smallest of the three models, Phi-4-mini-reasoning, is designed for mobile and embedded applications such as educational tools and tutoring systems. It uses a 3.8-billion-parameter architecture and was trained on more than a million math problems, ranging from middle school to postgraduate levels.

Despite its smaller size, Phi-4-mini-reasoning surpasses models like OpenThinker-7B and DeepSeek-R1-Distill-Qwen-7B in several evaluations. In mathematical problem-solving, its results match or exceed those of OpenAI's o1-mini.

Optimized for Windows integration

Microsoft says the new models are already optimized for use on Windows systems. A variant called Phi Silica is deployed on Copilot+ PCs. The model is integrated into tools like Outlook for offline summarization and the "Click to Do" feature, which provides contextual text functions directly on the screen. It runs directly on neural processing units (NPUs), enabling faster responses and lower power usage.

All three models—Phi-4-reasoning, Phi-4-reasoning-plus, and Phi-4-mini-reasoning—are available with open weights on both Azure AI Foundry and Hugging Face.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.