AI in education: ChatGPT is just the beginning

Large language models like ChatGPT can generate coherent, believable text. How does this impact education and teaching?

Strictly speaking, ChatGPT - artificial intelligence that generates texts in response to input prompts - is not even the beginning, but merely an intermediate stage in natural language processing (NLP), which has been observed for about ten years.

ChatGPT also can't do anything fundamentally new that earlier tools couldn't. It differs only in the quality of the generated output, the focus on interactivity (input of questions and AI-generated answers) and, above all, in its captivating simplicity: a URL, a registration, a single simple empty text field and the infinity of text generation is open to you, which moreover (depending on the question, mostly) provides meaningful and well-founded results, since it accesses a corpus of about 1 trillion words (from 6 million Wikipedia articles and millions of books and websites).

It should be noted that the training dataset consists almost exclusively of English-language texts, but AI-assisted translation also comes into play when communicating with users. This integrated translation sometimes leads to tragic misunderstandings in communication.

Despite all the teething problems, the results are impressive, disturbing, and impressively disturbing. The entire academic world, from elementary schools to top universities, is in turmoil, some out of dystopian panic, others out of utopian naiveté. Both positions are highly understandable.

For all readers to whom ChatGPT does not mean anything yet, we have asked ChatGPT to introduce itself briefly:

ChatGPT, can you briefly introduce yourself?

I am an assistant, a large language model trained by OpenAI. I am able to respond to questions and requests in different languages and provide information. I am also able to have small conversations and provide human-like responses. However, I am not a real person and have no personal experience or opinions. My level of knowledge is limited to the year 2021 and I have no ability to follow current events or obtain new information. I am merely a computer program designed to provide information and try to respond to questions and inquiries as best I can.

What is the disruptive potential of ChatGPT in terms of academic writing processes?

ChatGPT is a chatbot trained with machine learning to have human-like conversations. It is designed to help students and academic professionals support and improve their writing processes.

One of the disruptive potentials of ChatGPT in terms of academic writing processes is that it can help students structure and formulate their ideas and thoughts. ChatGPT can also act as a "smart" writing assistant by providing users with suggestions for possible changes or improvements to their writing style or grammar.

In addition, ChatGPT provides the ability for users to share their writing processes with others and collaborate on projects. This can be especially beneficial for students participating in collaborative projects or shared writing processes.

Overall, ChatGPT offers the possibility to simplify and improve academic writing processes by helping users structure and formulate their ideas, suggesting possible changes, and offering the possibility to collaborate on projects.

On the other hand, what about the dangers?

There are some potential dangers related to the use of ChatGPT or other chatbots designed for academic writing processes. One of the main concerns is that chatbots are not able to replicate the deep analytical skills and critical thinking abilities of humans. Chatbots can make suggestions for possible improvements or changes to writing style or grammar, but they cannot perform deep analysis or critical reflection that is often required for academic papers.

Another concern is that chatbots may not be able to accommodate the cultural and linguistic diversity of people. It is important that students learn to express their thoughts and ideas in their own language and style, and chatbots may not be able to support this ability.

It is also important to note that chatbots may not be able to address the complex requirements and standards of academic papers. While they can help simplify and improve writing processes, they cannot be a substitute for thorough research, in-depth analysis, and critical reflection.

Finally, there are concerns about the privacy and security aspects of chatbots. It is important that students are informed about how their data is used by chatbots and that they understand that chatbots may not be able to provide the same confidentiality and security as human writing consultants.

Overall, there are some potential dangers associated with using chatbots like ChatGPT in academic writing processes. It is important that students consider these concerns and understand that chatbots cannot be a substitute for in-depth research, deep analysis, and critical reflection.

Thank you. This helps me a lot.

You're welcome! I hope that my answers have helped you better understand the disruptive potential of ChatGPT in relation to academic writing processes and also consider the potential dangers associated with its use. If you have further questions or need more information, please do not hesitate to contact me. I am here to help you!

This dialog was created within a few seconds and without any post-processing. With a little more effort, much better results could have been produced, but even the result of this clueless input is enough to pass the Turing test.

The discussion about how (higher) education schools should react to this, which has been fueled since November 30, 2022, with the free release of ChatGPT, fails in most cases, because higher education policy is usually - and for good reason - inert.

As a result, tools like ChatGPT are usually more than one step ahead of higher education policy discussions. For example, many university committees are still discussing the systematic introduction of plagiarism detection software. In the meantime, plagiarism is playing an increasingly minor role, as AI tools can produce complete papers that are neither factually nor legally plagiarized.

One exception is structural plagiarism, in which existing text is intentionally distorted using AI-powered paraphrasing tools, also called rewriting tools, to hide the source from detection by plagiarism-detection software.

In our opinion, the questions to be asked and negotiated in (higher) education are therefore not how to react to this or that tool, this or that development, but rather: What fundamental qualitative changes in relation to scientific work can be expected as a result of the future collaboration of human and artificial intelligence that will determine our (academic) everyday life? How do we want to deal with this in the context of (educational) training?

Answering these questions requires historical awareness with an eye to the future.

The future of generative AI language models

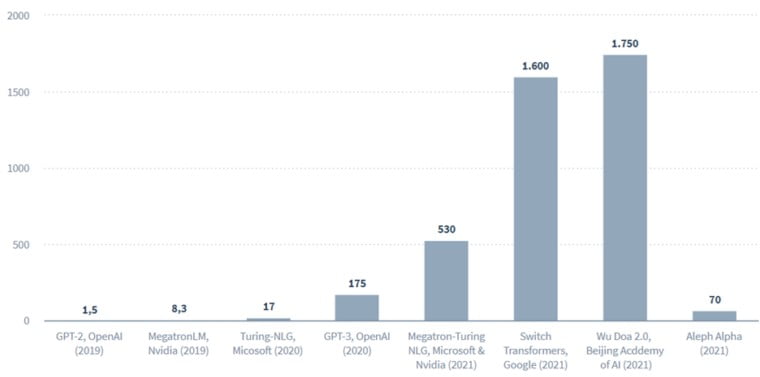

The following chart shows that the development of large language models (LLMs) is progressing rapidly. The focus is on the parameter count indicator, which correlates positively with the performance of LLM, here in the period from 2019 to 2021.

The development of the most important AI language models to date thus shows an exponential increase in performance. However, the graph also reflects the American and Chinese pioneering role (see the German start-up Aleph Alpha in the comparison on the far right of the graph).

Jörg Bienert, in his role as Chairman of the Board of the German AI Association, explicitly emphasized the high strategic relevance of generative AI language models for the German and European economy and the associated future potential of AI language models in a guest article in Handelsblatt in June 2022.

The German AI Association launched its LEAM initiative (short for "Large European AI Models") as early as 2021 to promote the development of large AI models in Europe and to avoid dependence on solutions outside the EU. Supported by renowned research institutions, companies, associations and startups, LEAM is intended to be a European AI lighthouse project.

Looking into the future is difficult, because there is no end in sight to the development. Currently, the most discussed shortcoming of AI language models is the fictional character of some generated texts ("hallucinating" systems), but this problem should also be solved in the near future.

DeepMind offers the Retro language model, a model with database search for a fact check. OpenAI is working on the research prototype WebGPT, which should cover an additional Internet search as an extension to GPT-3 or GPT-3.5 to be able to generate more factual texts.

As an alternative to OpenAI's ChatGPT, AI text service provider Writesonic is positioning itself with Chatsonic, already promising a solution to the difficulties with fictional texts: "Write factual content with real-time topics." Other providers are already following with similar announcements.

OpenAI's next model, GPT-4, is scheduled for release in a few months and will likely represent another quantum leap, like the version jump from GPT-2 to GPT-3 in mid-2020.

Further progress is also on the horizon in the interaction between humans and machines in the actual writing process. As recently as August 2022, Meta announced the PEER writing bot, a collaborative language model designed to provide support throughout the entire writing process.

Like a "jack-of-all-trades," PEER is supposed to be able to provide quick drafts, add suggestions, suggest edits, and explain its actions as well. And as mentioned earlier, this is undoubtedly just the beginning.

With its AI Act bill, the EU Commission aims to comprehensively regulate the impact of AI. Depending on the risk classification of the EU AI Act, this will result in certain obligations, AI strategies, documented and communicated AI guidelines for companies as providers and users of AI systems.

Where are the boundaries to the field of education? Can these be identified at all, or do they tend to merge?

The use of generative AI language models in education

The core question controversially discussed in the educational context is: Should AI-supported writing tools be used proactively in the sense of generators of draft texts in the classroom to ultimately generate higher quality work via the automated creation of initial draft texts and the subsequent "manual" optimization of the texts?

From our standpoint, the answer is: Yes. Or rather: Yes, but.

It is what it is: AI language models and systems are a fact of life in knowledge work. Hiding and ignoring are not appropriate tactics. But if it's no longer a matter of "whether" to use AI tools, then the question must be: How should we use these tools in the future? What knowledge, what competencies do students (teachers, pupils) need?

These include, for example, the ability to work scientifically, knowledge of text patterns, etc., but above all the analytical and critical thinking needed to evaluate automated products and control their use.

The far more difficult question currently revolves around the problem of assessing these student achievements, which are the result of human-machine co-production. If written homework and final papers are to remain relevant forms of examination in the future, our proposed solution starts with the analogy to the assessment of product quality: In the future, we should not only assess the final result, but also the quality of the design that led to this finished, elaborate product.

Two dimensions are relevant here: the methodological-technical design (in the sense of a research design) and the associated technical tool design, whereby both classic software solutions (e.g., word processing, literature management, statistical programs) and modern AI-supported tools can be considered. Realistically, however, even this suggested approach is only suitable as an interim solution.

Two reasons are crucial for this (from a January 2023 perspective): AI-powered digital research assistance tools like elicit.org already provide us with a method designer that gives us a wealth of scientific methods at our fingertips, depending on the research question.

This development is still in beta, but can be considered groundbreaking. Thus, this approach could lead to methodological scientific design at the push of a button, with human performance increasingly taking a back seat.

It is also expected that the AI tools used will become exponentially more powerful. This in turn means that the quality of the first drafts will keep improving and human post-processing will become increasingly superfluous.

Our conclusion

We need fundamentally renewed new teaching and learning settings and, above all, we need to rethink our teaching, learning, and examination culture at German schools and universities.

Now we as university representatives and teachers, with our human creativity and critical judgment, are particularly called upon; we may and must prove ourselves. We are only at the beginning - and, unfortunately, or fortunately, not at the end.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.