Prompting GPT-5 for agentic workflows and advanced coding applications

OpenAI has released an in-depth prompting guide for GPT-5, covering agentic workflows, new API parameters, coding workflows, and specific prompting patterns, with lessons learned from integrating the Cursor code editor.

According to the guide, GPT-5 was trained specifically for tool use, following instructions, and understanding very long contexts, making it a foundation for agentic applications. For these types of workflows, OpenAI recommends the Responses API, which preserves the model's reasoning between tool calls, improving both efficiency and output quality. The guide notes that simply switching from Chat Completions to the Responses API and passing previous reasoning with "previous_response_id" led to Tau benchmark scores in trading jumping from 73.9% to 78.2%. Keeping the reasoning context saves tokens, maintains plans across tool calls, and boosts both latency and performance.

The model's "agentic eagerness" can be tuned through prompts and the "reasoning_effort" parameter. Lowering reasoning effort dials back initiative, while setting clear criteria for context searches and limiting the number of tool calls (for example, to two) provides more control - including options to proceed when uncertainty remains.

To encourage more initiative, the guide suggests raising reasoning effort and adding persistence instructions that cut down on unnecessary questions. It also recommends setting clear stops, distinguishing between safe and risky actions, and establishing thresholds for when tasks should be handed back to users. For example, in purchase or payment flows, the threshold should be lower than in a search, and in programming, deleting files should require more caution than simple text searches.

For longer rollouts, the guide describes how GPT-5 can announce upcoming tool calls: it is trained to sketch out a plan at the start, then provide concise progress updates. The frequency, style, and content of these updates can all be controlled via prompt - from goal paraphrasing to structured plans, sequential status messages, and a final report. For complex tasks, OpenAI recommends splitting them into smaller, separable subtasks across multiple agent rounds.

GPT-5 as a coding assistant

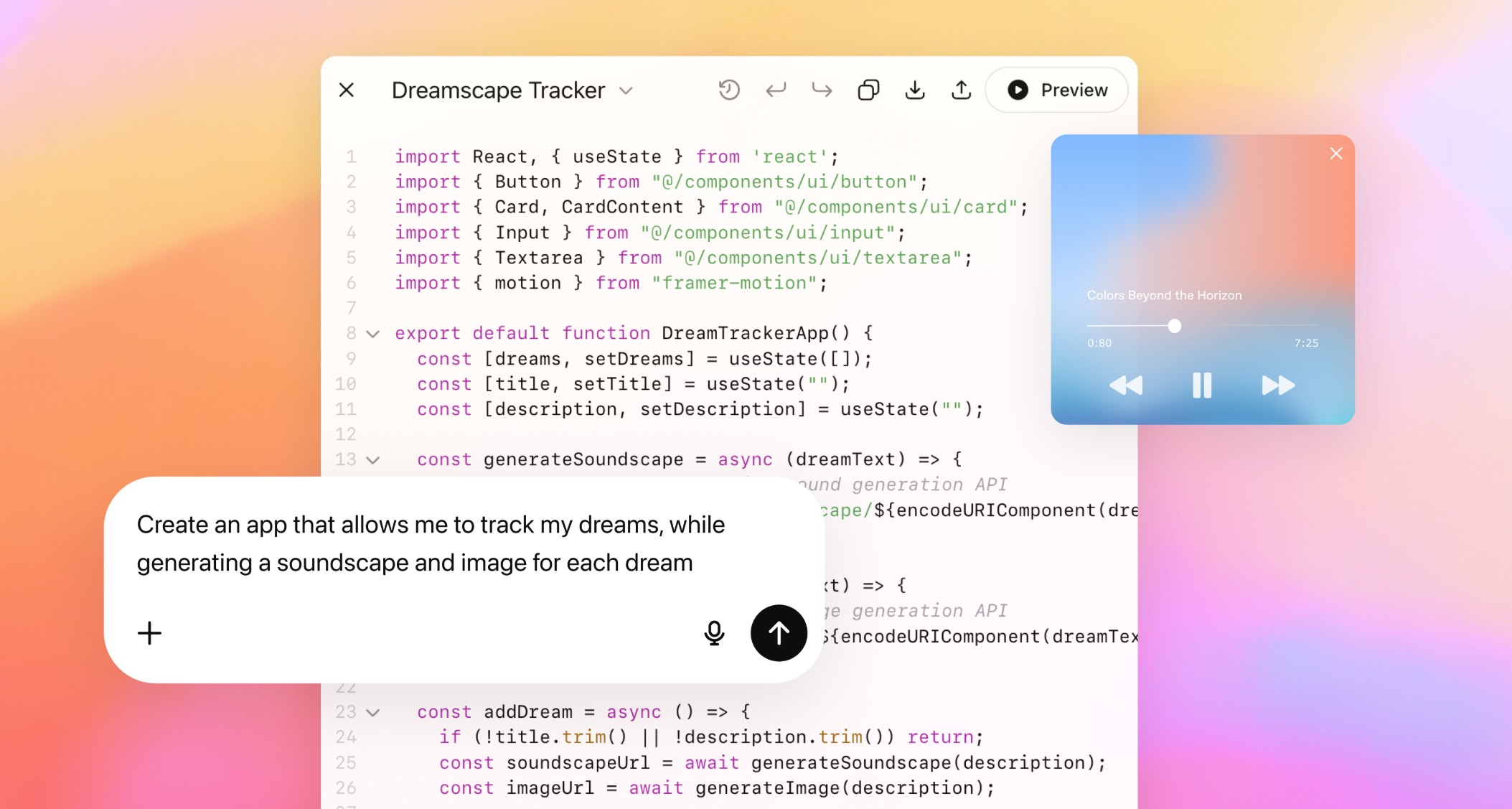

OpenAI positions GPT-5 as a strong model for software development. It is designed to handle large codebases, fix bugs, process major diffs, carry out multi-file refactoring, implement major new features, and even create entire applications from scratch.

For building new web apps, OpenAI suggests using Next.js (TypeScript), React, HTML, Tailwind CSS, shadcn/ui, Radix Themes, icon sets like Material Symbols, Heroicons, and Lucide, the Motion animation library, and fonts such as Inter, Geist, Mona Sans, IBM Plex Sans, or Manrope.

For greenfield projects, the guide recommends a prompt pattern where the model creates an internal set of quality criteria (five to seven categories) and iterates until all criteria are fully met.

For incremental changes and refactoring, GPT-5's modifications should blend seamlessly with the existing structure. The guide stresses mirroring the technical setup of the codebase explicitly - including principles, directory structure, and UI/UX rules. OpenAI provides sample guiding principles (clarity/reuse, consistency, simplicity, demo focus, visual quality), stack standards (including directory layout), and UI/UX rules (typography hierarchy, colors, spacing, state indicators, accessibility).

Early testing with the Cursor Code Editor

The Cursor code editor was among the first to test GPT-5 and documented its integration in a blog post. Cursor aimed to balance autonomy with concise status messages on longer tasks. Initially, GPT-5 produced overly detailed status updates, while code in tool calls was too terse (sometimes using single-letter variable names). Cursor addressed this by setting the global "verbosity" API parameter to low, and prompting the model to be more detailed in code tools ("Write code for clarity first ... Use high verbosity for writing code and code tools."). The result: compact status and summary messages, but much more readable code changes.

The team also noticed that GPT-5 sometimes asked unnecessary follow-up questions. According to the guide, giving more precise context about undo/reject functions and user preferences helped reduce interruptions. The model started applying changes proactively and submitting them for review, rather than asking for approval beforehand.

Another insight: prompts that worked well with earlier models triggered too many tool calls in GPT-5. After reducing these "extra-thoroughness" instructions, GPT-5 became better at deciding when to rely on internal knowledge versus when to use external tools. Structured, XML-like specifications improved instruction-following, and user-configurable Cursor rules added even more control.

Fine-tuning instructions, verbosity, and minimal reasoning mode

The new "verbosity" API parameter controls the length of the final answer, independent of "reasoning_effort." The guide explains that while you can set a global value, you can also override it as needed - for example, keeping status messages short but code outputs detailed, as in the Cursor setup.

GPT-5 also supports a new "minimal reasoning" mode, designed for maximum speed while still maintaining the benefits of the reasoning paradigm. OpenAI recommends prompts that start with a brief rationale, use clear status updates before tool calls, give explicit and persistent tool instructions, and encourage the agent to fully complete tasks before handing them back. For those migrating from GPT-4.1, OpenAI points to patterns from the GPT-4.1 guide.

When it comes to following instructions, OpenAI urges caution: GPT-5 is extremely literal, and vague or contradictory prompts can disrupt its reasoning. To help users avoid these pitfalls, OpenAI links to its Prompt Optimizer, which can flag inconsistencies and unclear instructions.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.