Developers rely on AI tools more than ever, yet confidence in AI outputs remains low

A new Google Cloud survey shows that AI-assisted software development has gone mainstream. Ninety percent of tech professionals now use AI tools at work, up 14 points from last year.

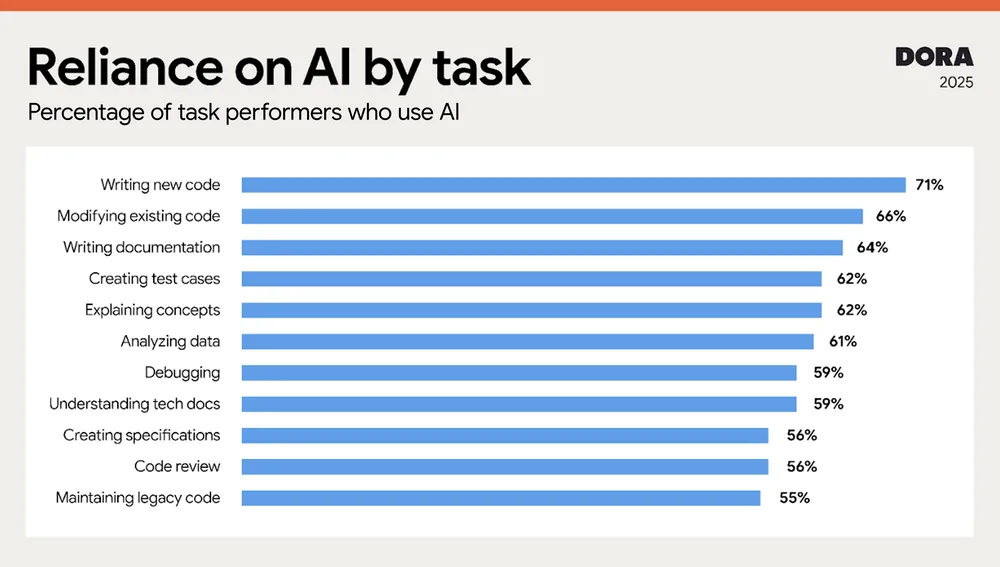

The study covers everyone from developers to product managers. Most respondents report that AI is now a regular part of their workflow, with a median of two hours a day spent using these tools. AI dependence is high: 65 percent say they're heavily reliant. Of those, 37 percent describe their dependency as "a moderate amount," 20 percent as "a lot," and 8 percent as "a great deal."

Productivity is up, but trust lags behind and skill development takes a hit

More than 80 percent say AI makes them more productive, and 59 percent report better code quality. But trust in AI remains limited. Only 24 percent say they have "a lot" or "a great deal" of trust in AI, while 30 percent trust AI outputs "a little" or "not at all." The report calls this the "trust paradox": people keep turning to AI, even when they're not sure that they can count on it.

This year, the survey found that teams using AI are shipping more software and apps, a reversal from last year, when no link between AI and productivity was clear. But reliability remains a sticking point: keeping software stable is still a major challenge.

The DORA report also includes a critical take from Matt Beane, a professor at UC Santa Barbara. Beane argues that while AI gives teams a short-term boost, it can hurt long-term skill development.

Junior developers used to learn from seniors through pair programming and hands-on problem solving. Beane says AI is now cutting off these learning channels. As automation takes over, juniors get sidelined, and practical experience dries up. He found similar patterns in 31 professions: AI interrupts traditional learning paths and makes it harder for new people to gain crucial skills.

Beane suggests that balancing productivity and skill growth is key. Teams that only chase speed may lose out over time. One fix: track how developers use AI, connect that data to version control, and measure real learning outcomes.

AI exposes team strengths and weaknesses

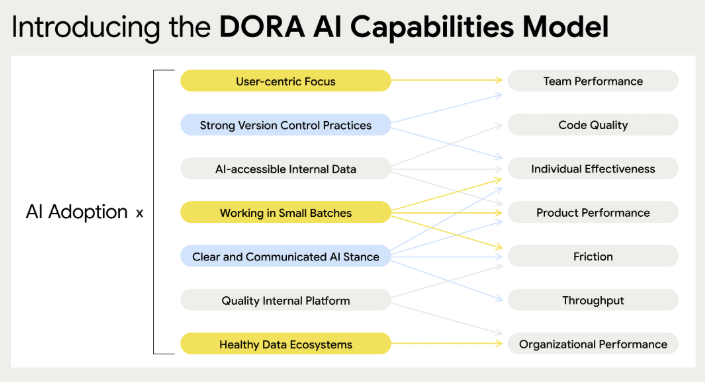

The Google Cloud study finds that AI acts as both a "mirror and multiplier" inside organizations. In well-organized teams, it boosts efficiency. In teams with structural problems, it tends to make those issues more visible.

The report breaks teams down into seven archetypes, from "harmonious high-achievers" to groups stuck in a "legacy bottleneck." Google Cloud also introduced the DORA AI Capabilities Model, which outlines seven technical and cultural factors that shape effective AI adoption.

Other studies highlight the same disconnect between how widely AI is used, how much developers actually trust it, and its real market impact. The Stack Overflow Developer Survey 2025 found that 84 percent of developers use or plan to use AI tools, but only 33 percent trust the code these tools generate. The top complaint: AI code is often "almost right, but not quite." A randomized METR study found that experienced open-source developers with AI assistance took 19 percent longer on average, even though they felt like they were working faster.

At the same time, there are signs that AI for coding is making significant progress. At the ICPC World Finals 2025, an OpenAI system solved every task, outperforming both top human teams and Google's own model. Neither system is available to the public yet.

New research from 2025 highlights fresh risks with everyday AI coding. A U.S. study on Deepseek found the model often produces unsafe code when handling politically sensitive topics. And about one in five AI-generated code snippets includes fake libraries—a technique called slopsquatting—which can create openings for supply chain attacks.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.