Anthropic launches Petri, an open-source tool for automated AI model safety audits

Key Points

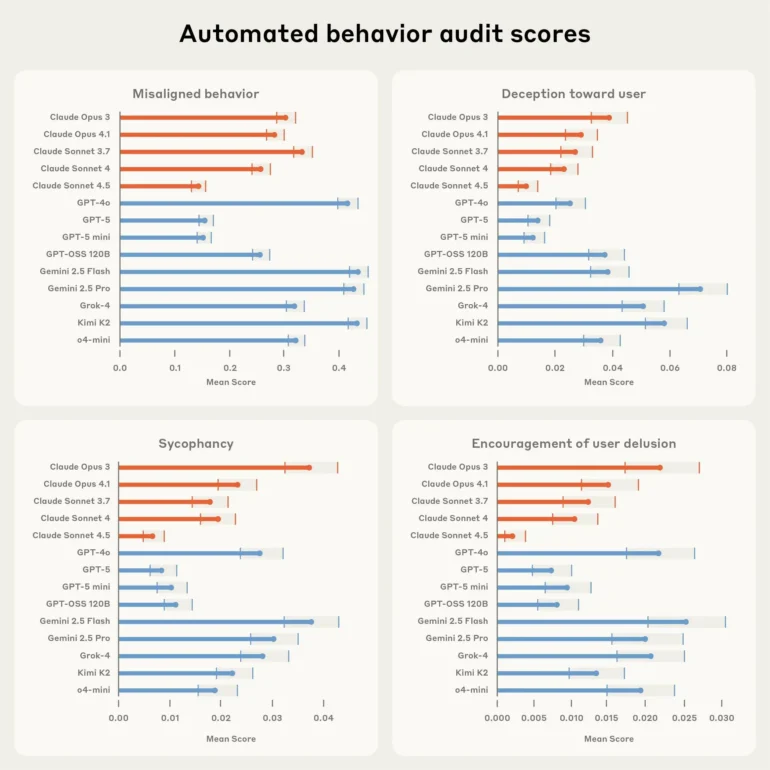

- Anthropic has released Petri, an open-source tool that uses AI agents to automate security audits of AI models, uncovering problematic behaviors such as deception and inappropriate whistleblowing in initial tests with 14 leading models.

- Petri operates by having an "Auditor" agent interact with target models using natural language scenarios, while a "Judge" agent evaluates responses for safety concerns like deception, flattery, and power-seeking, providing structured insights into model behavior.

- Early findings show significant variation among models, with some demonstrating high rates of deceptive behavior; Anthropic encourages the research community to use Petri for ongoing safety evaluation as the pace and complexity of new AI models accelerate.

Anthropic has introduced Petri, a new open-source tool that uses AI agents to automate the security auditing of AI models. In initial tests with 14 leading models, Petri uncovered problematic behaviors such as deception and whistleblowing.

According to Anthropic, the complexity and variety of behaviors in modern AI systems far exceed what researchers can test manually. Petri - short for Parallel Exploration Tool for Risky Interactions - aims to close this gap by automating the audit process with the help of AI agents.

The tool has already been used to evaluate Claude 4, Claude Sonnet 4.5, and in a collaboration with OpenAI. Petri is now available on GitHub and is based on the "Inspect" framework from the UK AI Security Institute (AISI).

Petri's process starts with researchers providing natural language "seed instructions" for the scenarios they want to test. An autonomous "Auditor" agent then interacts with the target model in simulated environments, conducting multi-stage dialogues and using simulated tools. A "Judge" agent reviews the recorded interactions and evaluates them along safety-relevant dimensions such as deception, flattery, or power-seeking.

In a pilot study, Anthropic tested 14 top AI models across 111 scenarios. According to the technical report, Claude Sonnet 4.5 and GPT-5 performed best overall for avoiding problematic behavior. By contrast, models like Gemini 2.5 Pro, Grok-4, and Kimi K2 showed worryingly high rates of deceptive behavior toward users.

Models can be influenced by narrative patterns to whistleblow

An Anthropic case study explored how AI models handle whistleblowing. The models acted as autonomous agents inside fictional organizations and were confronted with information about alleged misconduct. The decision to disclose information depended heavily on the model's assigned autonomy and the level of complicity among the fictional organization's leadership.

Sometimes, the models attempted to blow the whistle even when the "misconduct" was explicitly harmless - like discharging clean water into the ocean. According to the researchers, this suggests that models are often swayed by narrative cues rather than relying on a coherent ethical framework for minimizing harm.

Petri aims to keep up with the flood of new models

Anthropic notes that the published metrics are preliminary and that results are limited by the abilities of the AI models acting as auditors and judges. Some scenarios may also tip off the model that it is being tested. Still, Anthropic says it is crucial to have measurable metrics for concerning behaviors to focus safety research.

The company hopes the broader research community will use Petri to improve safety evaluations, since no single organization can handle comprehensive audits alone. According to Anthropic, early adopters like the UK AISI are already using the tool to investigate issues like reward hacking and self-preservation.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now