Google integrates Earth AI and Gemini language models for what it calls geospatial reasoning

Google is updating its Earth AI platform, linking it with Gemini language models and making new features available to a broader set of users.

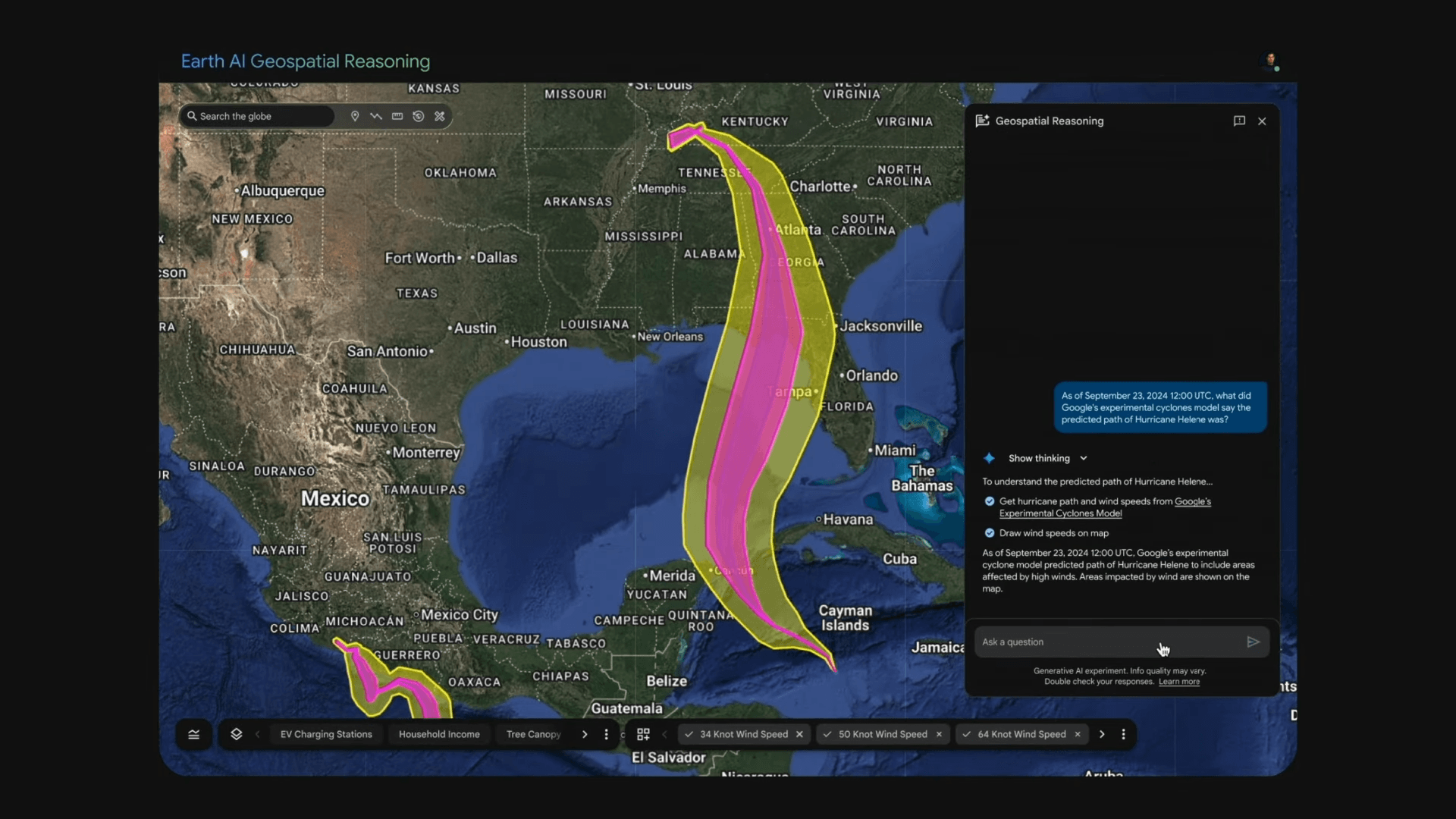

According to the company, the goal is to speed up detection of floods, droughts, and disease outbreaks, and to support more targeted responses. The central update is what Google calls "Geospatial Reasoning," a system that connects different AI models—such as weather forecasts, population density maps, and satellite imagery.

Google says this approach is designed to answer complex questions, such as identifying areas most at risk from a coming storm or determining which infrastructure might be affected. The company cites early flood detection and algae bloom analysis in drinking water systems as initial use cases.

For example, Google points to the nonprofit GiveDirectly, which uses geospatial reasoning with flood and population data to deliver emergency aid to hard-hit communities. The underlying technology relies on Gemini and other foundation models.

Earth AI is structured around three core model families: Imagery, Population, and Environment, each trained on specialized geospatial datasets. According to Google, the Imagery models process satellite and aerial imagery, support natural language queries, and enable open-vocabulary object detection.

The Population Dynamics Foundations model uses data from sources like Maps and Search Trends to represent human behavior, creating region-level embeddings for applications in health, mobility, and forecasting. Google says this system currently covers 17 countries and is updated monthly.

Environment models focus on weather, climate, air quality, and disaster forecasting, using machine learning to predict events such as floods and hurricanes. A technical overview is available in Google's Earth AI paper.

Access for social organizations and selected companies

At present, the new features are available in the US to Google Earth Professional users and selected companies through the "Trusted Tester" cloud program. Social and humanitarian organizations can apply for access.

Google says Earth AI models for image data, population analysis, and environmental monitoring can be combined with external datasets using the Google Cloud APIs. Companies can address specific needs, such as infrastructure management or environmental risk detection, by using tools like Imagery Insights in BigQuery.

Earth AI was officially announced in July 2025, when Google introduced its geospatial AI models and datasets under one framework. This rollout included the "AlphaEarth Foundations" model, which offers summary earth observations in a format similar to a virtual satellite.

Looking ahead, Google says it plans to expand Earth AI with more data sources and application areas. The company states that future work will include broader sensor support, longer-term trend analysis, finer spatial and temporal detail, and, ultimately, a unified "meta-Earth model" that combines multiple data types into a single system.

Google has also faced criticism for the performance and transparency of its AI-powered disaster alert systems. After the devastating earthquakes in Turkey in February 2023, Google's Android Earthquake Alerts System (AEA) significantly underestimated the magnitude of the first quake and sent only a few hundred urgent warnings, when millions were needed.

Google only acknowledged these failures two years after the event, despite earlier public statements that the system had "worked well." The delay in disclosure and shifting explanations have raised broader questions about transparency and accountability in AI-based early warning systems.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.