Pangram 3.0 AI text detector claims up to 99.98% accuracy, even for subtly AI-assisted content

Key Points

- Pangram's new 3.0 version adds a feature that not only distinguishes between human and AI-generated text, but also indicates how much AI assistance was involved.

- The company claims the updated model can spot AI-generated text with 99.98 percent accuracy, and it rarely mistakes human writing for AI, with only one in 15,000 cases misclassified.

- While a University of Chicago study has confirmed Pangram's effectiveness and resistance to manipulation, some critics argue that trying to detect AI writing is pointless and believe the education system should adapt instead.

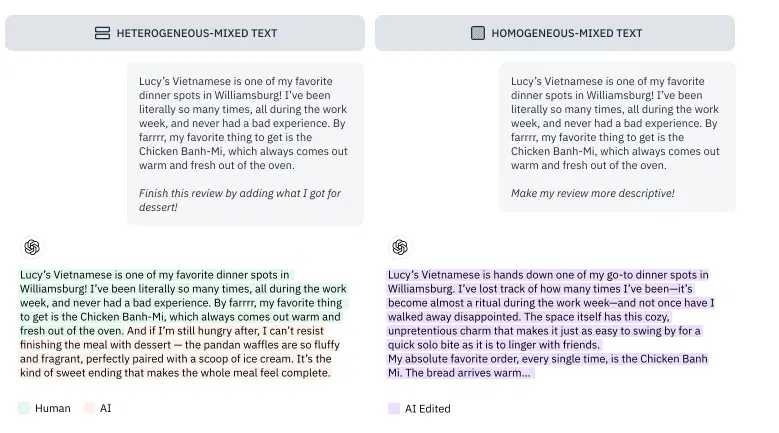

Pangram has released version 3.0 of its AI text detector. The most important new feature: the tool now classifies text into four categories instead of making a simple binary distinction between human and machine.

The new categories are: fully human-written, slightly AI-assisted, moderately AI-assisted, and fully AI-generated. For longer documents, Pangram 3.0 analyzes individual segments separately, a feature designed to spot cases where a human writes the first half of a text and an AI finishes the rest.

New categories reflect how people actually use AI writing tools

According to Pangram, the four-tier system tracks the level of AI involvement. "Light AI assistance" covers superficial changes like spelling corrections, grammar fixes, or translations that leave the core content intact.

"Moderate AI assistance" indicates the AI likely rewrote larger sections or added its own material, such as extra details, tone adjustments, or structural changes. Text generated entirely by models like ChatGPT falls under the "fully AI-generated" label.

To train the model, Pangram instructed AI systems to edit human texts at varying levels of intensity, the company explains in a technical blog post (Paper).

Pangram claims 99.98 percent accuracy

Pangram claims the new model achieves 99.98 percent accuracy when recognizing AI-generated text, with a false positive rate close to zero. According to the company, human essays are classified as follows:

| Human essays classified as | Rate |

|---|---|

| Fully human written | 99.84% |

| Slightly AI-assisted | 0.14% (1 in 700) |

| Moderately AI-assisted | 0.013% (1 in 7,500) |

| Fully AI-generated | 0.0064% (1 in 15,000) |

These figures come from the provider itself and have not been independently verified. Pangram also admits that the boundaries between categories are "more of an art than a science." There is no precise definition for the threshold between "light" and "moderate" AI support.

The detailed four-category breakdown is a paid feature. Free users see a simplified version: lightly assisted text appears as "Human," while moderately assisted text is labeled "AI-generated."

Earlier research backs Pangram's detection claims, but broader questions remain

Even before the release of version 3.0, an independent study by the University of Chicago confirmed Pangram's above-average performance. According to the study, the detector achieved false positive and false negative rates of practically zero for medium-to-long texts and proved robust against manipulation attempts by "humanizer" tools.

However, the researchers warned against a "technical arms race" between detectors, AI models, and circumvention tools. Whether AI text detectors are a good idea in general—even if they work technically—remains controversial. AI pioneer Andrej Karpathy, for example, considers the detection of AI texts a fundamental failure and calls for a transformation of the education system instead.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now