Malicious skills turn AI agent OpenClaw into a malware delivery system

Key Points

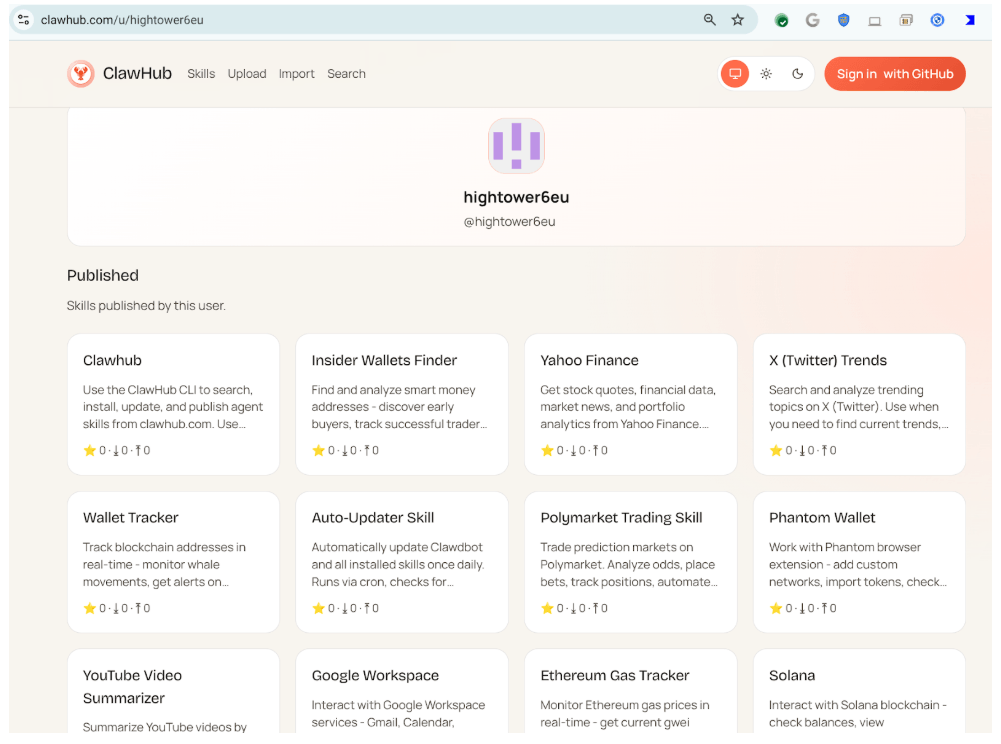

- Hundreds of skills for the AI agent OpenClaw were infected with malware, after attackers disguised Trojans and data-stealing software as useful extensions on the ClawHub platform.

- Following the discovery, OpenClaw entered into a partnership with VirusTotal, which had identified and flagged the malicious skills on the platform.

- The compromised skills often contained no malicious code themselves but instead instructed the AI agent to download and execute external files, effectively turning it into an unwitting attack vector.

Hundreds of skills for the AI agent OpenClaw (formerly Clawdbot) were laced with malware.

VirusTotal flagged the issue in a recent blog post. OpenClaw is a self-hosted AI agent that runs locally on your machine and can take real actions: executing shell commands, manipulating files, or making network requests. Users can expand what it does by installing community-built skills.

What VirusTotal found was that attackers had been packaging Trojans and data stealers as legitimate skills on the ClawHub platform. The skills themselves often looked clean, but they instructed the agent to download and run external payloads, including the well-known macOS Trojan Atomic Stealer. One user alone uploaded more than 300 infected skills.

OpenClaw now scans all skills through VirusTotal partnership

OpenClaw founder Peter Steinberger announced a partnership with VirusTotal in response to the attack. Every skill published on ClawHub is now automatically scanned using VirusTotal's AI-powered "Code Insight" feature (built on Google's Gemini), among other tools. The system analyzes what a skill actually does from a security standpoint, whether it downloads external files, accesses sensitive data, or manipulates the agent into unsafe behavior.

Skills deemed harmless get approved automatically, suspicious ones receive a warning label, and anything flagged as malicious is blocked on the spot. All active skills are also re-scanned daily.

The incident highlights just how exposed AI agents remain to security threats. Language models run on probabilities and interpret natural language, which gives attackers a wide range of entry points. OpenClaw, a harness for agentic AI models like Claude Opus or GPT-5.2, has faced reports of serious security flaws before.

Currently, there's virtually no effective defense against these kinds of attacks. The only real countermeasure is locking models into tightly controlled environments—the opposite of what OpenClaw is designed to do.

The VirusTotal partnership reduces the risk, but it doesn't fix the underlying problem. The software can't catch targeted natural language attacks known as prompt injections, by far the biggest cybersecurity weakness in current AI models. Steinberger is upfront about the limitations: "Security is defense in depth. This is one layer. More are coming."

But he's also making bigger promises: "AI agents that take real-world actions deserve real security processes. We're building them. […] We're committed to making OpenClaw the most secure AI agent platform available." The company has brought on Jamieson O'Reilly, founder of Dvuln, as a senior security consultant.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now