Microsoft's research argues AI media authentication doesn't work reliably, yet new laws assume it does

A new technical report from Microsoft systematically evaluates how to distinguish authentic media from AI-generated content. The findings are sobering: no single method is reliable on its own, and even combined approaches have limits. Whether Microsoft will actually implement its own recommendations remains unclear.

AI-powered deception is spreading across the internet at an accelerating pace. The White House recently shared a doctored image of a protester, and Russian influence operations are distributing AI-generated videos to discourage Ukrainians from enlisting. Against this backdrop, Microsoft has published a comprehensive technical report that systematically examines how to tell real media from synthetic content - and where current methods fall short.

The report, titled "Media Integrity and Authentication: Status, Directions, and Futures," was produced as part of Microsoft's LASER program for long-term AI safety, led by Chief Scientist Eric Horvitz. A cross-disciplinary team spanning AI, cybersecurity, social science, human-computer interaction, policy, operations, and governance spent several months evaluating three core technologies: cryptographically secured provenance metadata, invisible watermarks, and digital fingerprints based on soft-hash techniques.

Each of the three approaches has serious weaknesses

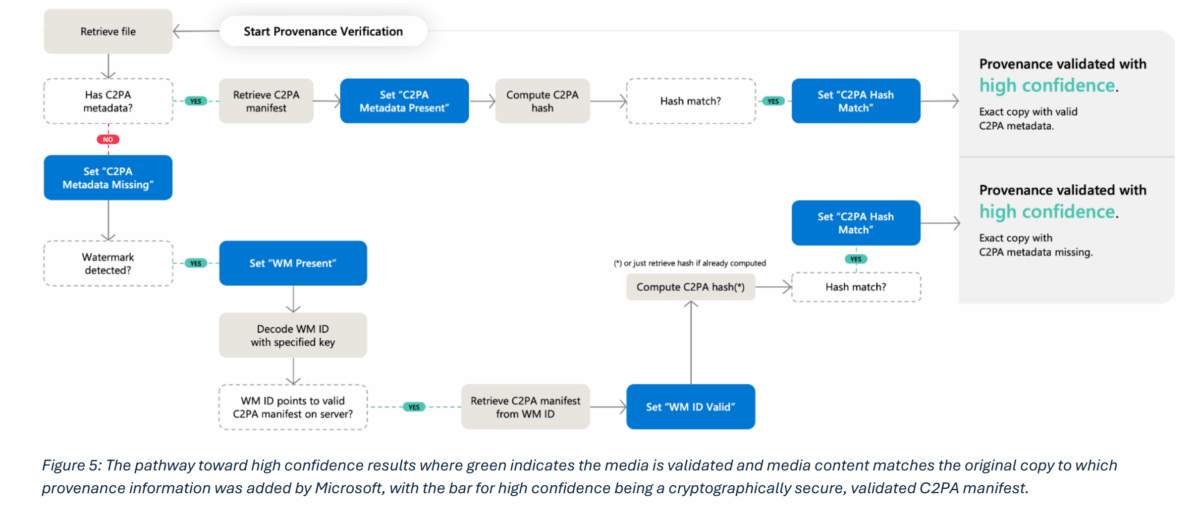

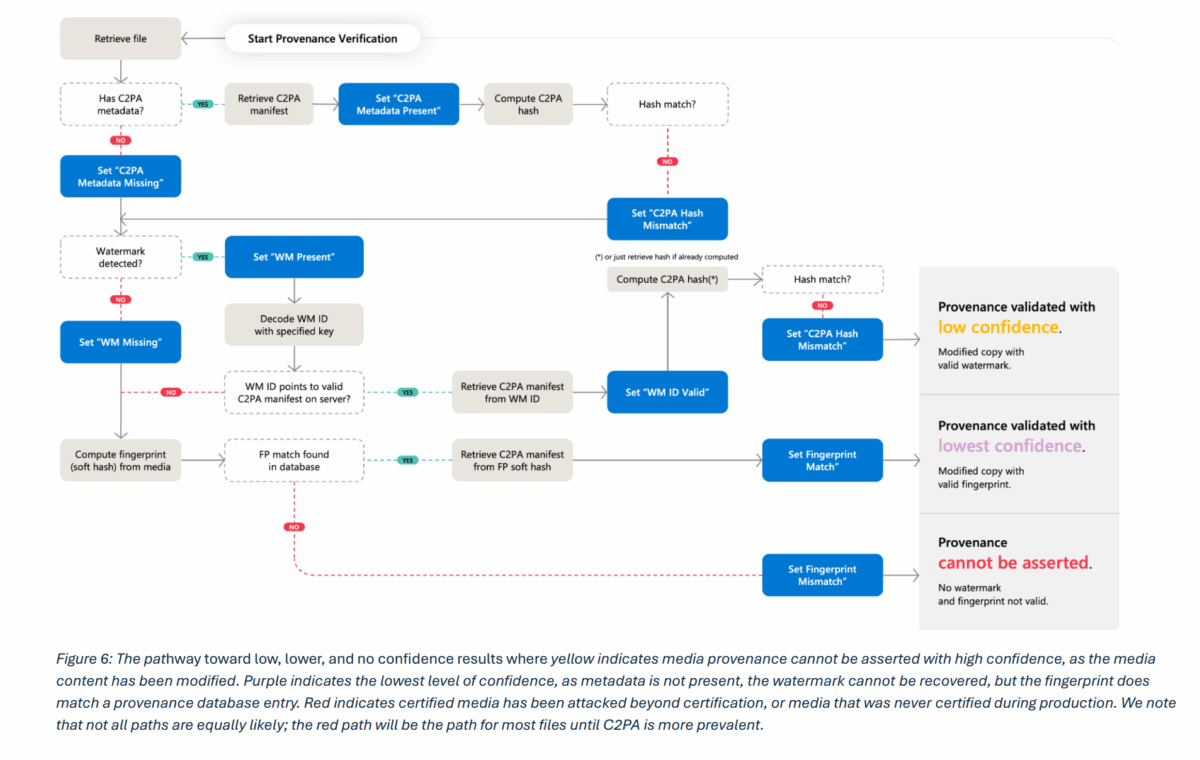

The three methods tackle the problem from different angles. Provenance metadata based on the open C2PA standard attaches cryptographically signed information to a file - recording who created it, what tool was used, and what edits were made. If anyone tampers with the metadata after the fact, the signature breaks and the forgery becomes detectable.

Invisible watermarks embed information directly into media content that's imperceptible to the human eye or ear. This means they can survive many processing steps, like uploading to social networks. Digital fingerprints, meanwhile, calculate a kind of mathematical shortcode from the content itself and store it in a database. When an image or video is checked later, the code reveals whether it matches a known original.

According to the report, each method on its own has significant weaknesses. Provenance metadata can be stripped out easily - a simple screenshot is enough. Watermarks are probabilistic, meaning they don't deliver 100 percent reliable results and can both trigger false alarms and miss forgeries. Digital fingerprints suffer from hash collisions, where two different files produce the same shortcode, as well as high storage costs. The report also flags a point that's often overlooked: even validated provenance data doesn't prove that content is true. It only proves the content hasn't been altered since it was signed.

In a conversation with MIT Technology Review, Horvitz noted that this is something he has to keep emphasizing to lawmakers. The goal isn't to make judgments about what's true and what isn't, he told the magazine - it's about developing labels that simply tell people where something came from.

Only 20 out of 60 combinations achieve high confidence

The research team modeled 60 different combinations of the three methods and tested how they perform under realistic attack scenarios. The results are sobering: only 20 of those combinations achieve what the report calls "high-confidence authentication." That requires either a validated C2PA manifest where stored checksums match the actual content, or a detected watermark that points to such a manifest in external storage.

The remaining 40 combinations deliver lower confidence levels or no reliable conclusions at all. Microsoft therefore recommends that public verification tools should only display high-confidence results. Less reliable signals, like possible fingerprint matches, should be accessible only to forensic specialists. The reasoning: unreliable results could create more confusion than clarity.

Reversal attacks can make fakes look real and real content look fake

The report goes into particular detail on so-called reversal attacks - manipulations designed to flip authenticity signals on their head. In one scenario, an attacker takes an authentic photo and makes minimal edits using an AI tool. The image gets correctly signed as "AI-modified," but a platform with poor display logic might simply label it "AI-generated" without showing the extent of the change. The result: a real photo gets discredited.

In another scenario, an attacker generates an AI image, strips out the watermark and manifest, and adds a forged camera manifest. Without reliable lists of trusted signers, a verification tool would flag the synthetic image as authentic.

Microsoft recommends that platforms always show the so-called edit scope when displaying provenance information - making clear exactly where changes occurred. Preview images of the original media should also be displayed. Distribution platforms like social networks should receive full manifest details so users can verify them through dedicated services.

Local devices are the weak link in the chain

Truly trustworthy results are only possible when media creation and signing happen in a secure cloud environment, according to the report. Local devices - especially conventional computers - don't offer enough protection. Administrators can modify programs and misuse cryptographic keys. Smartphones running Android and iOS fare somewhat better, since they can distinguish between tampered and unmodified operating systems.

For cameras, the picture is mixed. Newer models like the Google Pixel 10, Nikon Z6 III, and Canon EOS R1 already implement the C2PA standard. Basic compact cameras, however, typically lack secure chips. Microsoft recommends using hardware security enclaves - isolated areas within a processor that protect sensitive operations like content signing from access by other programs. The report also recommends adopting C2PA specification version 2.3 or later, which introduced security levels for signing certificates for the first time.

AI detectors help but face a fundamental paradox

AI-based deepfake detection tools play a supplementary but explicitly secondary role in the report. Proprietary detectors can reach roughly 95 percent accuracy in scenarios without targeted adversarial attacks, according to Microsoft's AI and public interest team. Freely available detection tools, by contrast, scored below 70 percent accuracy in testing.

The report also identifies a fundamental paradox: the better a detector performs, the more people trust its results. But the mistakes made by the best detectors - especially missed forgeries - can cause the most damage precisely because they carry the most trust.

On top of that, detectors are locked in a permanent arms race with attackers. Research has shown that sophisticated attacks can push some detectors' accuracy below 30 percent.

Laws are demanding what technology can't yet deliver

The report also analyzes legislation across several countries. California's AI transparency law, which takes effect in August 2026, requires AI providers to embed both visible and hidden disclosures in AI-generated content that are "permanent or extraordinarily difficult to remove," as far as technically feasible. The EU AI Act mandates that synthetic content be machine-readably labeled as AI-generated, with penalties of up to three percent of global revenue or 15 million euros, whichever is higher. Similar regulations exist or are emerging in China, India, and South Korea.

Microsoft explicitly warns that some of these requirements are technically impossible to meet. Visible watermarks can be removed by amateurs. Invisible watermarks based on current best practices can be stripped by skilled attackers. Recent research shows that diffusion-based image editing - modifying images through generative AI models - can break even robust watermarks. Rushing poorly functioning systems to market could undermine public trust in authentication methods altogether.

The report argues that policy expectations should be raised gradually, in step with advances in research and broadly deployable technical methods.

Microsoft wants to shape the rules but won't commit to following them

In an interview with MIT Technology Review, Horvitz described the work as a kind of recommendation for self-regulation, while acknowledging that it's also meant to strengthen Microsoft's reputation as a trustworthy provider. He told the magazine that the company is trying to be a preferred, trusted provider for people who want to understand what's happening in the world.

When MIT Technology Review asked whether Microsoft would implement its own recommendations across its platforms, Horvitz deflected. Product groups and executives were involved in the study, and development teams would take action based on the findings, a written statement said.

Microsoft sits at the center of a sprawling AI ecosystem. The company operates Copilot, the Azure cloud platform through which customers access OpenAI and other large AI models, the professional network LinkedIn, and holds a multibillion-dollar stake in OpenAI. According to MIT Technology Review, no binding commitment to implement the company's own blueprint was made.

Hany Farid, a professor of digital forensics at UC Berkeley who was not involved in the study, told MIT Technology Review that industry-wide adoption of the framework would make it "significantly harder" to deceive the public with manipulated content. Sophisticated individuals or state actors could still bypass the tools, but a substantial share of misleading content could be eliminated. Farid added that he doesn't think it solves the problem entirely, but it takes a big chunk out of it.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.