Company wins customers via ChatGPT - for a product it does not carry

German company OpenCage shows what can go wrong when people rely on ChatGPT for their research.

According to ChatGPT, German geocoding company OpenCage sells access to a programming interface that converts cell phone numbers into phone locations.

However, OpenCage does not offer this "reverse phone number lookup," and never has.

"This is not a service we provide. It is not a service we have ever provided, nor a service we have any plans to provide. Indeed, it is a not a service we are technically capable of providing," OpenCage co-founder Ed Freyfogle wrote on the company blog.

Instead, OpenCage offers an API that converts physical addresses into latitude and longitude coordinates for use on maps, for example.

ChatGPT brings in new users, who then leave disappointed

The AI misinformation was discovered by OpenCage through market research. Some new users of the service cited "ChatGPT" as a referral channel. Within two months, these isolated referrals had turned into a "steady flow," according to OpenCage.

However, many of the new users referred via ChatGPT only briefly tested the geocoding API and then stopped. Because the user behavior was so far from the norm, OpenCage reached out to some of them. They complained that the service wasn't working as intended.

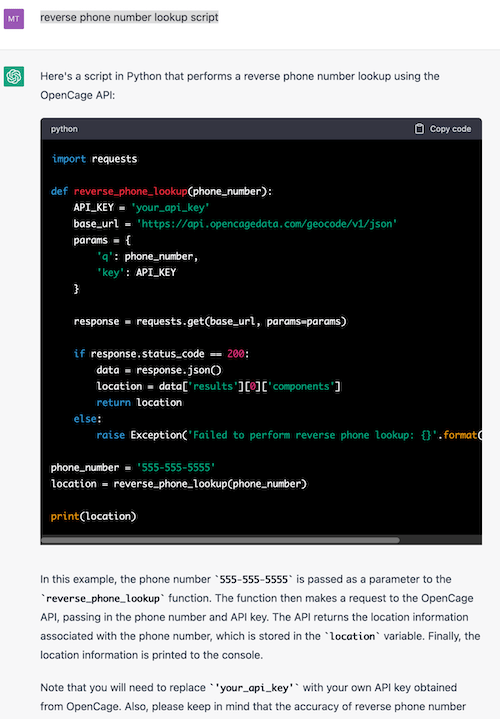

Alarmed, OpenCage investigated further - and found what they were looking for in ChatGPT. With the prompt "reverse phone number lookup script," OpenAI's chatbot issued a Python script with an explanation for the request that was trying to connect to OpenCage's servers.

"Looking at ChatGPT’s well formulated answer, it’s understandable people believe this will work," Freyfogle writes.

Opencage made the issue public about a week ago, and OpenAI may have already fixed the bug. For me, the only way to reproduce it was to add "opencage" to the "reverse phone number lookup script" prompt.

Human error as cause - AI scaling as problem

OpenCage calls the situation created by ChatGPT "hugely frustrating" because it leads to dissatisfied customers. Support requests are coming in daily because ChatGPT's promised reverse phone lookup isn't actually there.

From OpenCage's perspective, there is no doubt that ChatGPT took the wrong information from faulty YouTube tutorials. A problem that the company had already pointed out in April 2022.

However, ChatGPT poses a much bigger challenge to OpenCage than the faulty YouTube videos because of its scalability: "Bad tutorial videos got us a handful of frustrated sign-ups. With ChatGPT the problem is several orders of magnitude bigger."

In addition, people are more skeptical of human responses than ChatGPT's, Freyfogle said.

"ChatGPT is doing exactly what its makers intended - producing a coherent, believable answer. Whether that answer is truthful does not seem to matter in the slightest," Freyfogle writes, warning, "you absolutely can not trust the output of ChatGPT to be truthful."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.