OpenAI's GPT-4 is a safer and more useful ChatGPT that understands images

Key Points

- OpenAI has announced GPT-4, which OpenAI claims is its most "creative and collaborative" AI model yet.

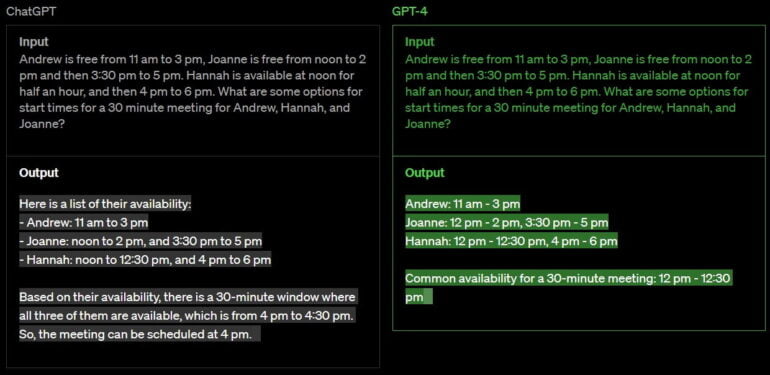

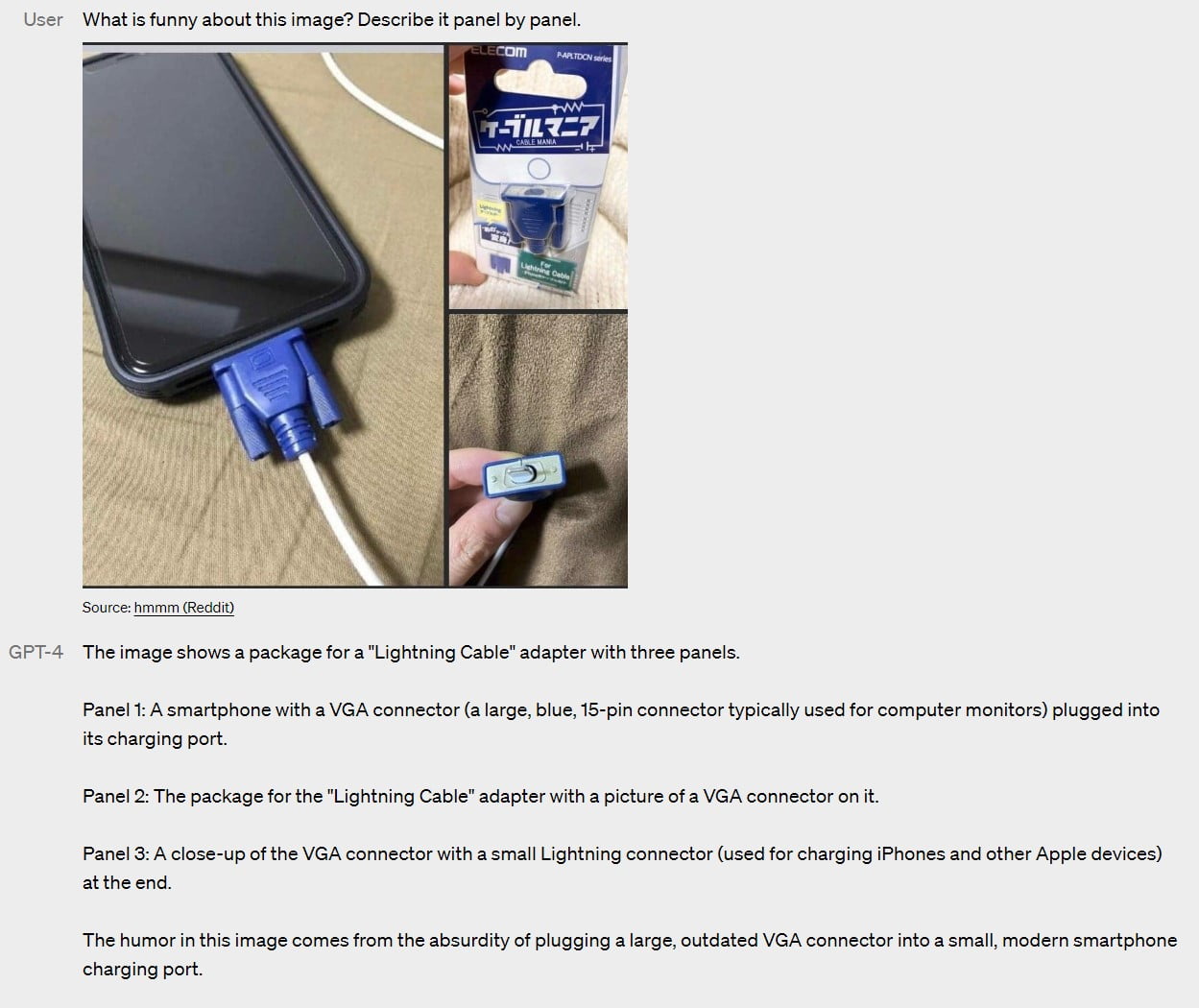

- GPT-4 is said to reason better than ChatGPT and can also process images as input, such as explaining a visual joke in a picture.

- OpenAI will initially make GPT-4 available through ChatGPT Plus. Developers can get on a waiting list for an API. However, prices are significantly higher than for GPT 3.5.

Update –

- Added dev demo from OpenAI CTO Greg Brockman

Update from March 15, 2023:.

In a livestream for developers and devs, OpenAI's CTO Greg Brockman presented some capabilities of GPT-4. Probably the most impressive demo in the stream was generating code for a working website using only a handwritten note. This demo starts around 17:30.

Original article from March 14, 2023:

According to OpenAI, GPT-4 is "more creative and collaborative" than any previous AI system, has a broader knowledge base, and is better at solving problems. As a multimodal system, it accepts images as input in addition to text.

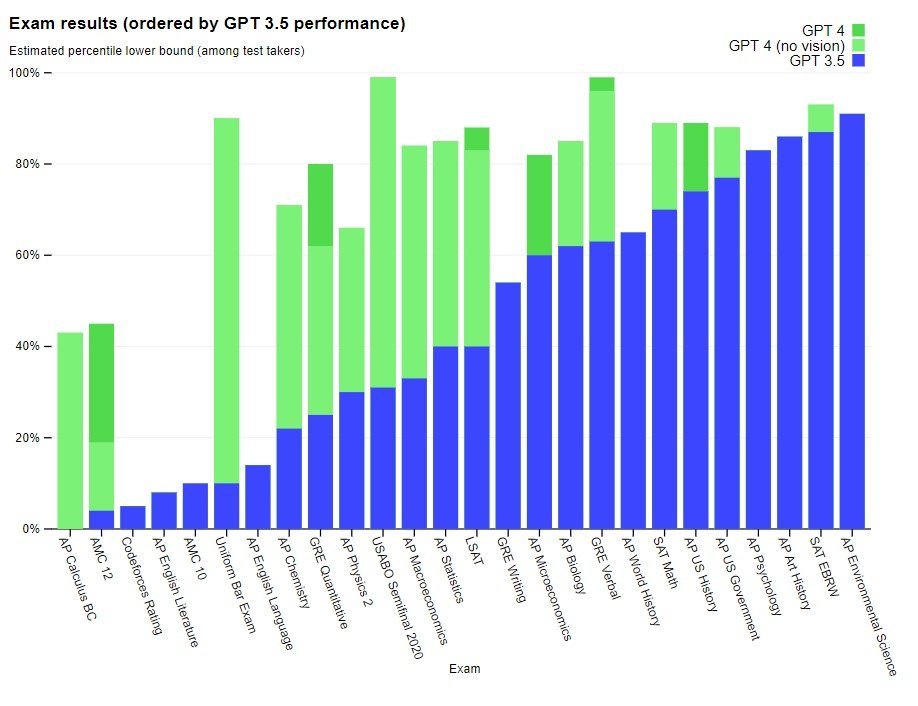

According to OpenAI, GPT-4 is a "breakthrough" in tasks that require structured problem-solving. For example, GPT-4 can provide step-by-step instructions in response to a question about how to clean an aquarium. In a simulated bar exam, GPT-4 is expected to score in the top ten percent of exams, where GPT-3.5 scored in the bottom ten percent.

GPT-4 can handle more than 25,000 words, making it suitable for generating larger documents and analyses. GPT-4's database ends in September 2021, and the model does not learn from its own experience. GPT 3.5 was a first test run for the new system, according to OpenAI.

The new AI system is based directly on lessons learned from adversarial test programs and feedback on ChatGPT, OpenAI said. It is said to outperform existing systems significantly in terms of factuality and steerability, although it is "far from perfect".

GPT-4 also outperforms its predecessor by up to 16 percent on common machine learning benchmarks, and outperforms GPT 3.5 by 15 percent on multilingual tasks.

OpenAI says it has also developed new methods to predict the performance of GPT-4 in some domains, using models trained with only one-thousandth the computational effort of GPT-4.

In this prediction of AI capabilities, OpenAI sees an important safety aspect that is not being adequately addressed given the potential impact of AI. "We are scaling up our efforts to develop methods that provide society with better guidance about what to expect from future systems, and we hope this becomes a common goal in the field," OpenAI writes.

OpenAI also started using GPT-4 to assist humans in evaluating AI outputs. This, it says, is the second phase of its previously announced alignment strategy.

GPT-4 can process visual input

The most obvious new feature of GPT-4 is its ability to process images as input. For example, it can explain a meme or the uniqueness of a motif using only image input, it can break down infographics step by step, and it can summarize scientific graphs or explain individual aspects of them.

In common benchmarks, GPT-4 already outperforms existing text-image models. OpenAI says it is still discovering "new and exciting tasks" that GPT-4 can solve visually.

To control the model, OpenAI relies on system messages for API clients. These can be used to determine, to some extent, the character of the model's responses, i.e. whether GPT-4 responds more in the style of a Hollywood actor or in a Socratic manner.

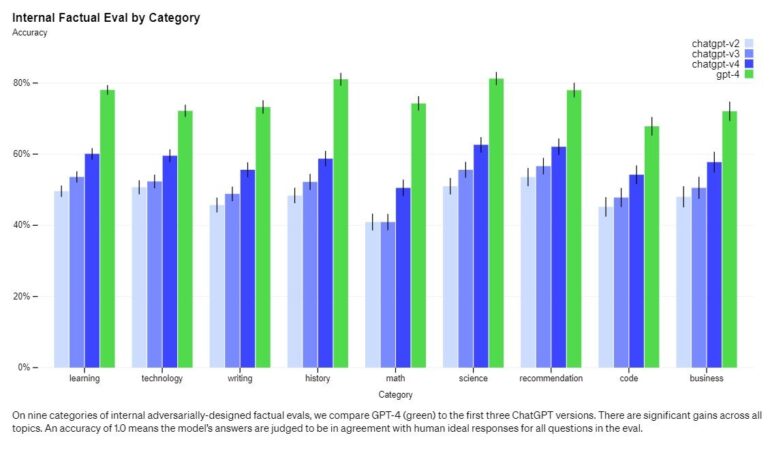

Similar limitations to previous GPT-models

Despite significant advances in reasoning and multimodality, GPT-4 has similar limitations as its predecessor. For example, it is still not completely reliable and is prone to hallucinations. However, in OpenAIs internal adversarial factuality evaluations, GPT-4 performs on average 40 percent higher than GPT 3.5 and achieves average accuracy scores between 70 and 80 percent.

GPT-4 also continues to create biases or reinforce existing ones - there is "still a lot of work to be done," OpenAI admits. In this context, the company points to recently announced plans for customizable AI language models that can reflect the values of different users and thus represent a greater degree of diversity of opinion.

OpenAI has significantly improved safety with respect to queries that the model should not answer because they violate OpenAI's content policies. Compared to GPT 3.5, GPT-4 is said to answer 82 percent fewer critical queries. It is also said to be 29 percent more likely to provide answers that comply with OpenAI policies for sensitive queries, such as those related to medical topics.

We spent 6 months making GPT-4 safer and more aligned. GPT-4 is 82% less likely to respond to requests for disallowed content and 40% more likely to produce factual responses than GPT-3.5 on our internal evaluations.

OpenAI

GPT-4 launches via ChatGPT Plus, API via waitlist

OpenAI is initially making GPT-4 available to paying customers of ChatGPT Plus. The service costs $20 per month and is available internationally. Developers will be given access via an API, as with the previous models. OpenAI offers a GPT-4 waitlist here.

The context length of GPT-4 is limited to about 8,000 tokens, which is about 4,000 to 6,000 words. There is also a version that can handle up to 32,000 tokens, which is about 25,000 words, but it has limited access at the moment.

The prices are $0.03 per 1k prompt token and $0.06 per 1k completion token (8k) or $0.06 per 1k prompt token and $0.12 per 1k completion token (32k), significantly higher than the prices of ChatGPT and GPT 3.5. The cheapest model, gpt-3.5-turbo, costs only about $0.002 per 1000 tokens.

Announcing GPT-4, a large multimodal model, with our best-ever results on capabilities and alignment: https://t.co/TwLFssyALF pic.twitter.com/lYWwPjZbSg

— OpenAI (@OpenAI) March 14, 2023

The current OpenAI report does not provide further details on architecture (including model size), hardware, training computation, dataset construction, and the like. OpenAI justifies this with the competitive market.

The fact that OpenAI does not disclose the number of parameters could also be interpreted as an indication that the company no longer attaches decisive importance to the number of parameters in its PR, since this alone has no significance for the quality of the model, but many people think it does.

In the run-up to the GPT-4 presentations, some absurd parameter numbers were mentioned in social media to visualize the performance of GPT-4 and to fuel the hype. In all previous models, OpenAI has communicated model size as a differentiating feature.

According to OpenAI, GPT-4's first customers include the language learning app Duolingo, the computer vision application Be My Eyes, and Morgan Stanley Wealth Management, which uses GPT-4 to organize its internal knowledge base. The Icelandic government is using GPT-4 to preserve its own language. "We have had the initial training of GPT-4 done for quite a while, but it’s taken us a long time and a lot of work to feel ready to release it", said OpenAI CEO Sam Altman.

On the heels of OpenAI's GPT-4 reveal, Microsoft also reveals that Bing Chat has been using GPT-4 from the beginning. So anyone who has interacted with "Sydney" in the past few weeks probably already has some understanding of GPT-4's capabilities.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now