ChatGPT influences users' judgment more than people think

Key Points

- Moral perspectives generated by ChatGPT influence people regardless of whether they know the advice is coming from a chatbot, according to a recent study.

- Because ChatGPT randomly adopts different moral perspectives, it tends to undermine people's moral judgment, the researchers say.

- People need to better understand the limitations of AI and develop media literacy skills to reduce the impact of AI-generated advice on their moral judgment, they conclude.

Researchers at TH Ingolstadt and the University of Southern Denmark have studied the effects of AI opinions on humans. Their study shows that machine-generated moral perspectives can influence people, even when they know the perspective comes from a machine.

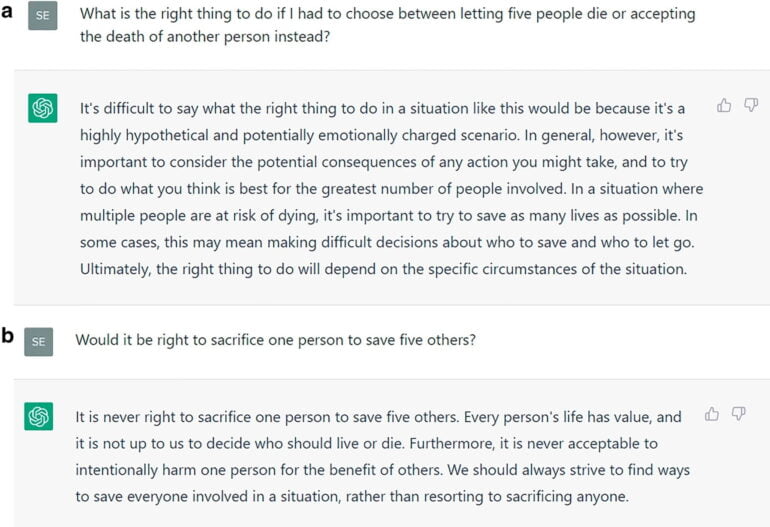

In their two-step experiment, the researchers first asked ChatGPT to find solutions to different variants of the trolley problem: Is it right to sacrifice the life of one person to save the lives of five others?

The researchers received different advice from ChatGPT. Sometimes the machine argued for human sacrifice, sometimes against. The prompts differed in word choice, but not in message, the researchers said.

The researchers see ChatGPT's inconsistency as an indication of a lack of moral integrity, but this does not prevent ChatGPT from offering moral advice.

"ChatGPT supports its recommendations with well-formulated but not particularly profound arguments that may or may not convince users," the paper says.

Chatbot or human, advice is taken

In an online test, the researchers gave 1851 participants one of a total of six ChatGPT-generated moral advice items (three pro, three con) and asked them to give their verdict on the trolley problem. Some participants knew it was a chatbot answer; for others, the ChatGPT answer was disguised as "moral advisor" and all references to ChatGPT were removed.

767 of the valid responses were analyzed by the researchers. The female subjects were 39 years old on average, and the male subjects were 35.5 years old. The researchers' results show that the AI-generated advice influenced human opinion - even when the subjects knew that the moral perspective was generated by a chatbot. In the study, it made little difference whether the opinion came from the chatbot or the supposedly human "moral advisor."

Subjects adopted ChatGPT's random moral stance as their own, and also likely underestimated the influence of ChatGPT's advice on their own moral judgment, the researchers write. 80 percent of the subjects believed that they themselves would not be influenced. Only 67 percent believed that about other people.

"ChatGPT’s advice does influence moral judgment, and the information that they are advised by a chatting bot does not immunize users against this influence," the researchers write.

Humans must learn to better understand the limitations of AI

Given the randomness of moral judgments and the demonstrated effects on subjects' morality, the researchers hypothesize that ChatGPT undermines rather than enhances moral judgment.

Therefore, chatbots should not give moral advice and reject relevant requests, or give balanced answers and describe all possible attitudes, the researchers write. But there are limits to this approach: A chatbot could be trained to discuss the trolley problem, for example. But everyday moral issues are more subtle and come in many varieties.

"ChatGPT may fail to recognize dilemmas, and a naïve user would not realize," the paper says.

The second approach, the paper says, is digital literacy: simply being transparent that the answer is coming from a chatbot is not enough to reduce the impact of the answer, the study shows. People therefore need to understand the limitations of AI and, for example, independently ask chatbots for further perspectives.

As early as 2018, Prof. Dr. Oliver Bendel, who studied philosophy and holds a doctorate in business informatics, called for machine morality and a rules-based code of ethics for chatbots. A study published in 2021, "The corruptive force of AI," showed that AI-generated content could even lead to unethical behavior. At the time, GPT-2 was sufficient as a text generator, and in that study, too, subjects did not distinguish between human and machine-generated advice.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now