No foundational LLM currently complies with EU AI Act

Key Points

- Researchers from Stanford have examined the extent to which large language models already comply with the EU AI Act. Open-source models performed best.

- Although commercial vendors are unlikely to comply with the new law, the researchers believe they could improve their scores with reasonable and plausible measures.

- The researchers see the EU AI Act as a pioneering step for the further use of AI worldwide.

Researchers at Stanford University have studied which foundational AI language models can be used under the EU AI Act. The results speak for or against the EU AI Act, depending on your point of view.

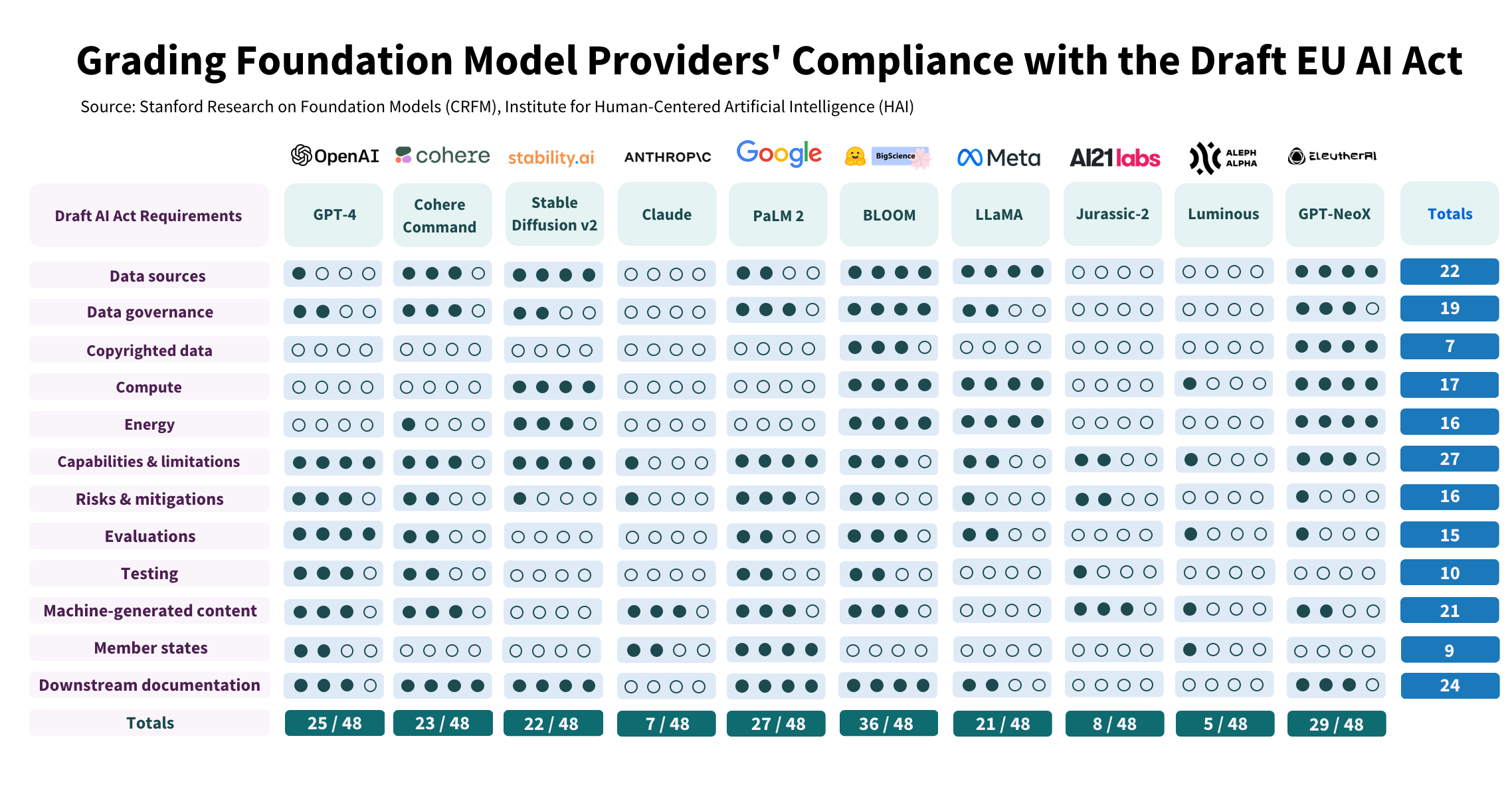

None of the ten language models examined achieved full compliance with the EU AI Act, which would be equivalent to 48 points. The researchers evaluated the language models in a total of twelve categories, each worth a maximum of four points.

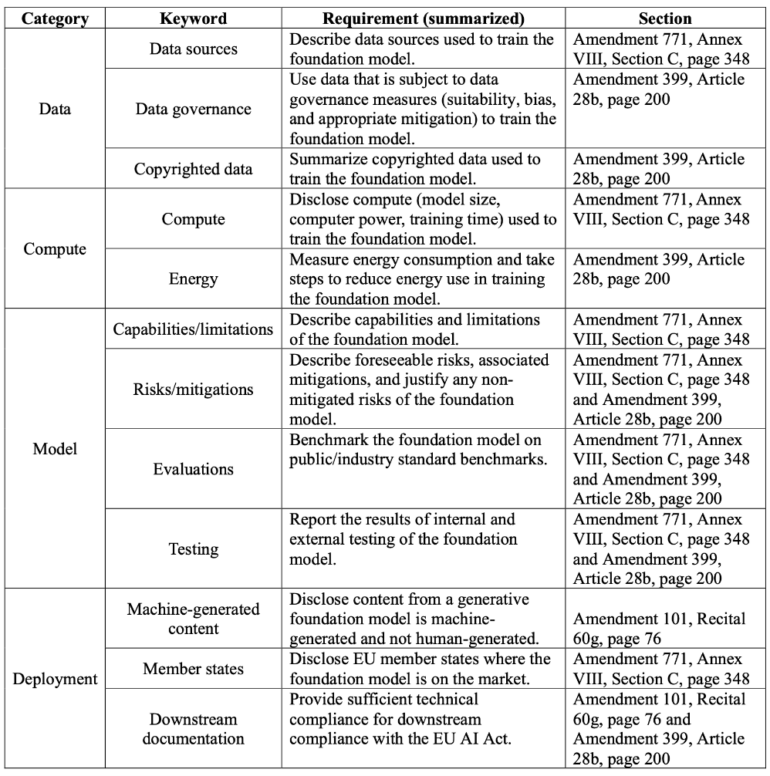

The categories include transparency of data sources, handling of proprietary data, risk mitigation, and computational and energy requirements. Two lead authors performed the initial scoring according to a predetermined methodology. Their scores were then discussed and voted on by all authors.

Open Source at the top of the list

The two most EU-compliant models are Bloom (36 points) from Big Science and GPT-NeoX (29 points) from EleutherAI. Both models are open source and therefore more transparently documented than those of commercial providers, which take competition into account. But while open-source models are generally getting better, they still lack performance and are likely to continue to do so, according to OpenAI CEO Sam Altman.

Persistent challenges where most model providers perform poorly include the use of copyrighted data in training data, unclear information about computational and energy requirements, unclear information about risk mitigation measures, and a lack of standards for evaluating model performance, particularly regarding adverse effects.

Because of the Brussels effect, the Stanford researchers believe the EU AI law is the most important regulatory AI initiative currently, as lawmakers around the world will look to it for guidance and multinational companies will seek consistent AI development processes. This, in turn, will shape the digital supply chain and the societal impact of AI, the researchers said.

AI Act compliance "within reach"

Despite the low compliance of many providers, the authors of the study believe that an overall score in the 30 to 40 range would be achievable for many through "meaningful, but plausible changes." Incentives such as fines for non-compliance may be sufficient here, without much regulatory pressure.

We believe sufficient transparency to satisfy the Act’s requirements related to data, compute and other factors should be commercially feasible if foundation model providers collectively take action as the result of industry standards or regulation.

From the study

Implementing the Act's 12 requirements would lead to "significant positive change in the foundation model ecosystem" and is within reach for most providers, despite poor results at first glance. However, the current trend is toward less transparency.

"Overall, our analysis speaks to a broader trend of waning transparency: providers should take action to collectively set industry standards that improve transparency, and policymakers should take action to ensure adequate transparency underlies this general-purpose technology."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now